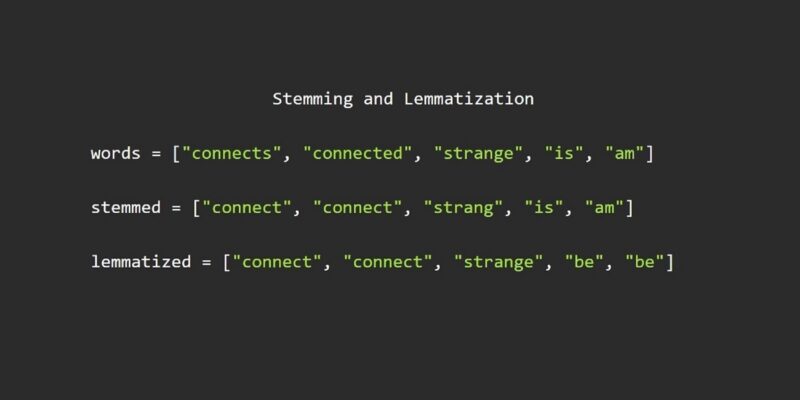

Stemming and Lemmatization

Stemming and Lemmatization is the process of converting inflectional words into their root form. It is the next step in pre-processing of NLP after Stopwords and Filtering.

Stemming

Stemming refers to the crude chopping of words to reduce into their stem words. A Stemmer follows a set of pre-defined rules to remove affixes from inflectional words. For example: connects, connected, connection can be converted to connect.

Porter’s Stemmer

There are multiple stemming algorithms to chose from, Porter’s Stemmer being one of the most used. NLTK provides this algorithm as PorterStemmer. To use this stemmer, we need to download it through Python Shell.

import nltk

nltk.download("punkt")Once the download is complete, we can use the PorterStemmer directly.

from nltk.stem import PorterStemmer

# Initialize the Stemmer instance

stemmer = PorterStemmer()

words = ["connects", "connected", "strange", "is", "am"]

stemmed = [stemmer.stem(word) for word in words]

print(stemmed)Output

["connect", "connect", "strang", "is", "am"]Due to being crude in nature, a Stemmer may return a result that is not a word. For example, strange was stemmed to strang, which has no meaning. Similarly, the root words for is and am is be. A Stemmer algorithm fails to handle such instances.

Lemmatization

Lemmatization is similar to Stemming, however, a Lemmatizer always returns a valid word. Stemming uses rules to cut the word, whereas a Lemmatizer searched for the root word, also called as Lemma from the WordNet. Moreover, lemmatization takes care of converting a word into its base form; i.e. words like am, is, are will be converted to “be”.

WordNetLemmatizer

Again, NLTK provides a WordNetLemmatizer to use off-the-shelf. However, this requires the POS tags of the word for correct results. For now, we manually provide the POS tags. Further, you can refer the blog on Part Of Speech Tagging – POS Tagging in NLP to know about POS tags automate the process of POS tagging.

from nltk.stem import WordNetLemmatizer

from nltk.corpus import wordnet

# Initialize the Lemmatizer instance

lemmatizer = WordNetLemmatizer()

words = [("connects", "v"), ("connected", "v"), ("strange", "a"), ("is", "v"), ("am", "v")]

lemmatized = [lemmatizer.lemmatize(*word) for word in words]

print(lemmatized)Output

["connect", "connect", "strange", "be", "be"]This, using a Lemmatizer we get the correct root words as expected.

Stemming vs Lemmatization

Now that we know what Stemming and Lemmatization are, one may ask why to use Stemming at all if Lemmatization provides correct results?

A Stemmer is very fast in comparison to Lemmatization. Moreover, Lemmatization requires POS tags to perform correctly. In our example, we manually provided the POS tags. Although when dealing in an application, we need to perform this POS tagging. Then, each word is searched for its base form from the WordNet. This increases the computation time and may not be optimal.

In some cases, it might be better to use a Stemmer than to wait for Lemmatization. Whereas, if precision is important in an application, one can use Lemmatization over Stemming.

Further in the series: N-Grams in Natural Language Processing

wordnetlemmatizer use default noun POS,

one you could consider running lemma first on default then stemmer second. could be a good trade-off on speed/accuracy?