To let machines understand the natural language, we first need to divide the input text in smaller chunks. Breaking paragraphs into sentences and then into individual words can help machines interpret meanings easily. This is where the concept of tokenization comes in Natural Language Processing.

If you’re new to the concept of Natural Language Processing, we recommend you to go through A Complete Introduction to Natural Language Processing.

Natural Language Toolkit (NLTK)

NLTK is the leading platform for building Python programs to work with human language data. NLTK has built-in support for dozens of corpora and trained models. Also, it contains a bunch of text processing methods for classification, tokenization, summarization, stemming, tagging, parsing and other NLP tasks.

It is an open-source, free and community-driven project. NLTK is suitable for linguists, engineers, students, educators, researchers, and industry users alike. To get started with NLTK, you should have python installed. You can then install NLTK library using pip.

pip install nltkWhile NLTK comes with various useful tools, our focus in this series would be on text processing.

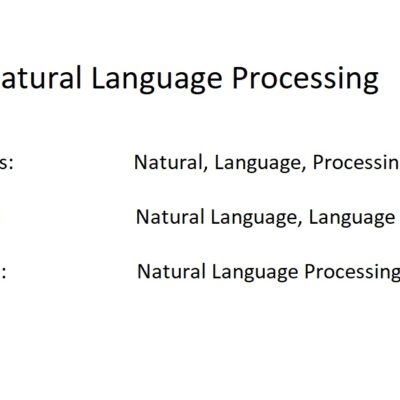

Tokenization

Tokenization is one of the most common tasks in text processing. It is the process of separating a given text into smaller units called tokens.

An input text is a group of multiple words which make a sentence. We need to break the text in such a way that machines can understand this text and tokenization helps us to achieve that.

It can be classified into 2 types:

Sentence Tokenization

Word Tokenization

Sentence tokenization is the process of dividing the text into its component sentence. The method is very simple. In layman’s term: split the sentences wherever there is an end-of-sentence punctuation mark. For example, the English language has 3 punctuations that indicate the end of a sentence: !, . and ?. Similarly, other languages have different closing punctuations.

While we can manually break sentences on these punctuations, python’s NLTK library provides us with the necessary tools and we need not worry about splitting sentences.

import nltk

text = "What is this paragraph about? The paragraph displays use of sentence tokenization. Notice how all the sentences will break on closing punctuations!"

sentences = nltk.sent_tokenize(text)

for sentence in sentences:

print(sentence)Output

What is this paragraph about?

The paragraph displays use of sentence tokenization.

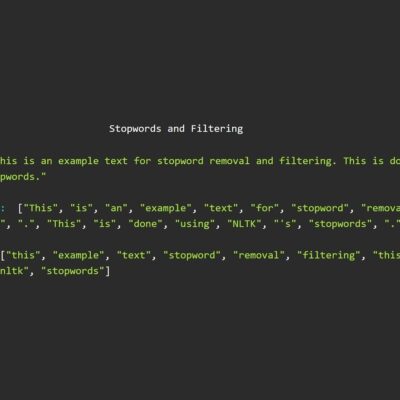

Notice how all the sentences will break on closing punctuations!Word tokenization is the process of dividing a text into its component word. We need to split the text after every space is seen. Also, we need to take care of punctuation marks. It is easier to deal with individual words than to deal with a sentence. Thus, we need to further tokenize sentences into words.

Along with Sentence Tokenization, NLTK provides tools to perform Word Tokenization.

import nltk

text = "This is an example text for word tokenization. Word tokenization split's texts into individual words."

words = nltk.word_tokenize(text)

print(words)Output

["This", "is", "an", "example", "text", "for", "word", "tokenization", ".", "Word", "tokenization", "split", "'s", "texts", "into", "individual", "words", "."]This is an example of word tokenization, but we cannot use this data as-in and requires further pre-processing steps which is covered in the next blog: Stopwords and Filtering in Natural Language Processing.