Meta dropped SAM 3 on November 19, and computer vision developers are calling it a “seminal moment.” The third iteration of the Segment Anything Model lets you segment any object in images or videos using simple text prompts like “the red car” or “person’s hand”—no manual selection, no training custom models, no ML PhD required. Roboflow, an early access partner, claims this could go down in history as “the GPT Moment” for computer vision. That’s not hyperbole.

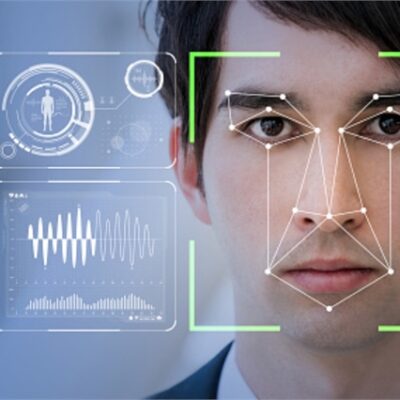

The Breakthrough: Vision Meets Natural Language

Here’s what changed. SAM 1 and SAM 2 required visual prompts—you had to click objects, draw bounding boxes, or manually trace outlines. SAM 3 ditches that entirely. Type what you want to segment in plain English, and it handles the rest. “Segment the red car.” “Track the person’s hand through this video.” Done.

This is zero-shot segmentation with an interface anyone can use. No training data, no labeled datasets, no weeks of model fine-tuning. You describe what you see, SAM 3 masks it. On images and videos. With real-time streaming support built in.

The parallel to GPT is obvious. Before GPT-3, NLP required linguistics expertise, rule-based systems, or supervised learning on massive datasets. After GPT-3, you write a prompt and get text. SAM 3 does the same for vision. Furthermore, it’s not just an improvement—it’s a different category of tool.

Developers Are Noticing

The Hacker News thread hit 590 points within 24 hours. Roboflow, which had early access, wrote: “This feels like a seminal moment for computer vision. I think there’s a real possibility this launch goes down in history as ‘the GPT Moment’ for vision.”

Another developer called the LLM integration potential “insane unlocks.” Combine SAM 3 with a language model, and suddenly multimodal agents can understand and manipulate visual environments as naturally as they process text. That’s not a future possibility—people are building it now.

One comment stood out: “Text is definitely the right modality.” It’s correct. Visual interfaces for computer vision tools were always a stopgap. Natural language was the goal; we just needed the models to catch up. SAM 3 closes that gap.

What This Means in Practice

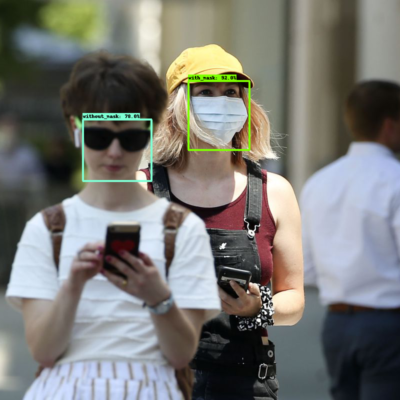

Rapid prototyping is the immediate win. Test computer vision ideas in hours instead of months. No dataset collection, no annotation pipeline, no model training. Describe what you want SAM 3 to segment, see if it works, iterate. Additionally, developers are already using it to generate training data for specialized models—SAM 3 as teacher, custom lightweight models as students.

Video editing gets a major upgrade. Rotoscoping, greenscreen effects, object masking—tasks that used to require frame-by-frame manual work or expensive software. SAM 3 handles it with a text prompt. Moreover, Instagram Edits and Meta AI app are getting SAM 3 integration soon, which means consumer-grade tools with professional-level capabilities.

The technical specs matter for production use. SAM 3 runs on consumer GPUs (fits a 3090 with 24GB VRAM), averages around 4 seconds latency, and ships under a commercial-friendly license. Consequently, it’s not quite real-time for every use case, but it’s close enough for most applications. The 3.5GB model size means edge deployment is plausible.

SAM’s Evolution: Iteration as Strategy

SAM 3 is the third step in Meta’s deliberate progression. SAM 1 (2023) introduced zero-shot image segmentation with visual prompts. SAM 2 (2024) added video tracking but kept the visual interface. SAM 3 (2025) brings open vocabulary and streaming.

Each version built the foundation for the next. SAM 1 proved the concept. SAM 2 extended it to video. SAM 3 made it accessible. That’s how you dominate a category—not with one big launch, but with consistent iteration that compounds. The SAM series is now the de facto standard for segmentation. SAM 3 cements that position.

Meta’s Vision Dominance

This isn’t just about one model. Meta is executing a long game in computer vision. Open-source everything (Apache 2.0 license), partner with key players early (Roboflow, VLM.run), integrate into consumer products (Instagram), and iterate faster than competitors.

Google has Gemini, Anthropic has Claude vision, OpenAI has GPT-4V. But SAM is purpose-built for segmentation, and that focus shows. General-purpose vision-language models do many things adequately; SAM 3 does one thing exceptionally well. In a field where specialization matters, that’s the winning bet.

The next move is obvious: performance improvements to hit true real-time, mobile deployment for on-device processing, and specialized variants for domains like medical imaging or satellite analysis. SAM 4 is probably already in development. If the pattern holds, it’ll be another leap forward.

The Bottom Line

Computer vision just became as accessible as building a chatbot. If you can write a prompt, you can segment objects in images and videos. No ML degree, no training infrastructure, no months of work. That’s the GPT moment—the inflection point where a technology goes from “expert tool” to “developer default.”

SAM 3 crossed that line yesterday. The question now isn’t whether it’s transformative. It’s what developers build with it.