Moonshot AI just dropped a bomb on the AI industry: Kimi K2 Thinking, the first trillion-parameter open-source reasoning model. Released November 6th and already trending #5 on Hacker News with 632 points, Kimi K2 directly challenges OpenAI’s closed o1 model and Anthropic’s Claude Opus. For developers frustrated with API costs and vendor lock-in, this could be the game-changer they’ve been waiting for—if they can actually run it.

The First Trillion-Parameter Open Reasoning Model

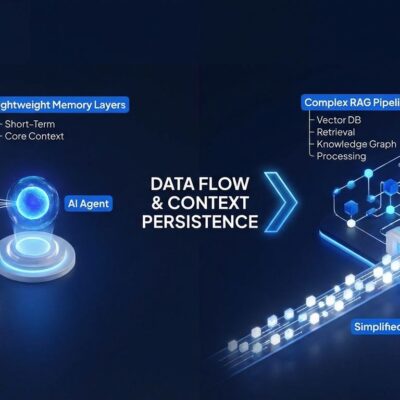

Kimi K2 isn’t just another open-source LLM. It’s a reasoning model—the same class as OpenAI’s o1 and Claude Opus. These models use Chain of Thought (CoT) reasoning, breaking down complex problems into logical steps rather than jumping straight to answers. Think advanced coding problems, mathematical proofs, system architecture design—tasks where you need genuine logical inference, not just pattern matching.

Until now, reasoning models were exclusively commercial territory. If you wanted o1’s capabilities, you paid OpenAI per token. If you wanted Claude Opus, you went through Anthropic’s API. There was no open-source alternative at competitive scale. Kimi K2 changes that equation.

At trillion-parameter scale, Kimi K2 matches the size of GPT-4 and o1. This isn’t a smaller compromise model—it’s competing head-to-head with the closed giants. Moonshot AI, a Chinese company, is making a direct play for developers who want reasoning capabilities without the commercial strings attached.

Why Developers Should Care About Kimi K2

The cost argument alone is compelling. OpenAI’s o1 charges per token, and for production applications running reasoning-heavy workloads, those costs compound fast. A single complex query might burn through thousands of tokens. Scale that to hundreds or thousands of daily requests, and you’re looking at serious monthly bills.

Self-hosting flips that equation. Yes, you need infrastructure—GPUs, memory, compute power. But once deployed, there are no per-query charges, no rate limits, no unexpected price hikes when OpenAI adjusts their pricing model. For companies already running GPU clusters, adding Kimi K2 becomes a capital expense versus an unpredictable operational cost.

Beyond cost, there’s control. Open-source means you can fine-tune the model for your specific domain. Need reasoning specialized for your industry? Train it. Want to optimize for your use case? Modify it. Concerned about data privacy? Keep everything on-premises. None of this is possible with commercial APIs.

This is what open-source AI is supposed to be—not just smaller models for hobbyists, but genuinely competitive alternatives to commercial offerings.

The Reality Check: Can You Actually Run This?

Let’s be honest: democratizing AI doesn’t mean much if only hyperscalers can afford to run it. Trillion-parameter models require serious hardware. We’re talking multiple high-end GPUs, substantial RAM, enterprise-grade infrastructure. Individual developers aren’t spinning this up on their gaming rigs.

Who can run Kimi K2 right now? Large companies with existing GPU clusters. Research institutions with compute budgets. Cloud providers who’ll offer hosted versions. Who can’t? Most individual developers and bootstrapped startups without serious hardware investments.

But this is still progress. The open-source community has a track record of making the impossible practical. Expect quantized versions that trade some performance for dramatically reduced resource requirements. Watch for cloud services offering Kimi K2 hosting at competitive rates. The tools will emerge.

The important shift is this: the model exists, the code is available, the community can build around it. That wasn’t true yesterday.

The Bigger Picture: Open Versus Closed AI

There’s a strategic dimension here that goes beyond technical specs. US AI companies—OpenAI, Anthropic, Google—favor closed models. They cite safety, responsible development, and business sustainability. Chinese companies—Moonshot, DeepSeek, Baidu—are pushing open-source aggressively.

It’s not hard to see the calculation. Open-source AI challenges US commercial dominance. Why pay OpenAI when you can run comparable models yourself? Why depend on Anthropic when alternatives exist? For China, open-source is both philosophical position and strategic weapon.

Developers benefit from this competition. More choices mean less centralization. The threat of open-source alternatives keeps commercial providers honest on pricing and features. The AI cold war is making strange bedfellows, but the result is better technology and more accessible tools.

Yann LeCun at Meta has been vocal: open-source AI is essential for innovation and safety. Sam Altman at OpenAI argues the opposite: closed development enables responsible AI. Kimi K2 puts weight behind the open-source camp—and does it at a scale that can’t be dismissed.

What Happens Next

The developer community is already evaluating Kimi K2. Watch GitHub for repo activity and documentation quality. Benchmarks comparing performance to o1 and Claude Opus will emerge. The real test is whether Kimi K2 delivers on its promise: reasoning capabilities matching closed models.

Deployment solutions will follow. Quantized versions, cloud hosting options, community tools for easier setup. The gap between “technically available” and “practically usable” will close, as it always does with successful open-source projects.

Strategic questions remain. Will OpenAI respond by opening o1? Will more Chinese companies follow Moonshot’s lead? Can the community build enough tooling to make trillion-parameter reasoning accessible beyond hyperscalers?

For now, Kimi K2 represents a clear milestone: reasoning AI is no longer exclusively commercial territory. The walls are coming down. For developers building the next generation of AI-powered applications, that matters.