Apple has open-sourced Private Cloud Compute (PCC), the secure cloud infrastructure powering Apple Intelligence features. This marks a dramatic shift from Apple’s traditionally closed approach—security researchers can now independently audit the code that processes user AI requests in the cloud. Instead of trusting Apple’s privacy claims, researchers can verify them directly in the implementation.

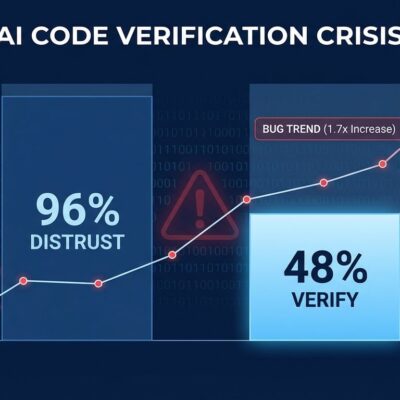

This is unprecedented transparency for production AI infrastructure from a major tech company. Google, Microsoft, and OpenAI offer no comparable independent verification of their AI systems. The move could set a new industry standard where privacy claims must be verifiable, not just marketed.

From Trust Through Reputation to Trust Through Verification

PCC’s open-source release fundamentally changes how we evaluate AI privacy. For years, cloud AI privacy has operated on a “trust us” model—companies publish privacy policies, promise not to log data, and users either believe them or don’t. Apple is shifting to “verify us” instead.

Security researchers can now inspect the actual code, verify cryptographic attestation protocols, and confirm that user data is never logged or stored. The architecture uses secure enclaves (hardware-isolated processors), cryptographic attestation (devices verify server security before sending data), and stateless processing (all data erased from memory immediately after processing). Every claim is now independently verifiable.

Meanwhile, every other major AI system requires trust. ChatGPT, Gemini, and Copilot users must trust the company’s privacy policy. With PCC, independent verification is possible. This creates competitive pressure: competitors either match Apple’s transparency or accept being positioned as less private.

How Private Cloud Compute Actually Works

PCC is built on a “stateless computation” model where requests are processed entirely in memory within secure enclaves, then cryptographically erased. Before any data is sent, the user’s device verifies the cloud server is running the exact, audited PCC code through cryptographic attestation.

Here’s the flow:

- User device initiates AI request and requests attestation from PCC server

- Server provides cryptographic proof of hardware identity (secure enclave), software identity (exact code version), and security configuration (no debugging/logging)

- Device verifies proof against public keys and transparency logs

- If valid, device sends encrypted request; if invalid, falls back to on-device processing

- Secure enclave processes the request and immediately wipes all working memory

No persistent storage. No logging. No backdoors. And now researchers can verify this in the code rather than taking Apple’s word for it.

This architecture isn’t theoretical—it’s processing millions of requests daily. It’s the blueprint for how privacy-preserving AI can work at scale. Organizations building sensitive AI systems can learn from or adapt these patterns.

The Competitive Pressure This Creates

Apple’s move puts Google, Microsoft, and OpenAI in an uncomfortable position. If independent researchers verify that Apple’s AI genuinely doesn’t log or store user data, what do competitors say when asked the same question?

The competitive landscape now looks like this: Google Cloud AI encrypts data but Google’s systems can access it. No attestation, no independent verification. Microsoft Azure OpenAI is similar—data can be used for model improvement unless explicitly opted out. Anthropic Claude has privacy-focused policies but no hardware attestation or verifiable guarantees. Apple PCC is now independently verifiable through open source code.

Competitors face a choice: match Apple’s transparency (expensive and complex) or accept being seen as less private. For enterprise customers and privacy-conscious users, that perception matters. Organizations processing sensitive data—healthcare, finance, legal—need demonstrable privacy guarantees, not just policy statements.

This could accelerate the shift toward “verifiable AI” as a market differentiator and eventually a compliance requirement. The irony is rich: Apple, traditionally secretive, is now more transparent than Google (the open source champion) on AI infrastructure.

What Developers Can Learn from This

Even if you’re not using Apple hardware, PCC’s architecture offers valuable lessons. The principles—hardware root of trust, minimal attack surface, cryptographic attestation, transparency logs—apply to any secure system design.

Key architectural patterns worth studying: Security guarantees anchored in tamper-resistant hardware, not just software policies. Stateless processing where no persistent data storage occurs. Verifiable builds where the public can confirm production systems match audited code. Transparency logs that cryptographically record any system changes.

These techniques solve real problems many organizations face: how to process sensitive data in the cloud while maintaining zero-trust security. The patterns are transferable even if you can’t replicate Apple’s exact hardware setup.

However, let’s be clear: open-sourcing the code doesn’t mean perfect security. The codebase still needs thorough review—specialist work that takes time. Hardware vulnerabilities can bypass software protections (Spectre and Meltdown proved that). There’s still trust required that production systems match the published code, though transparency logs help verify this. Healthy skepticism remains important. Open source enables verification, but verification still requires effort and expertise.

Key Takeaways

- Apple’s PCC open source release shifts AI privacy from a “trust us” model to “verify us”—security researchers can now independently audit the code rather than taking claims on faith

- The architecture demonstrates that privacy-preserving AI at scale is achievable: secure enclaves, cryptographic attestation, stateless processing, and now verifiable implementation

- Competitors face pressure to match transparency or accept being positioned as less private, especially for enterprise customers requiring demonstrable privacy guarantees

- The architectural principles—hardware root of trust, stateless processing, verifiable builds, transparency logs—are applicable to any secure system design, not just Apple’s ecosystem

- Open source doesn’t guarantee perfect security, but it enables independent verification—the security community’s real work is just beginning