If you’ve ever waited seconds for a Python CLI tool to start, you’ve felt the pain of eager imports. Every time you write import pandas, Python immediately loads the entire library—whether you actually use it or not. This becomes especially problematic when building applications with heavy dependencies.

The Python Steering Council just accepted PEP 810, introducing lazy imports as a first-class feature in Python 3.14 (expected 2026). As a result, you’ll soon be able to defer loading heavy modules until you actually need them, cutting startup time by 50-80%.

The Problem: Eager Imports Waste Time

Python’s import system is eager by default. When you import a module, it immediately loads into memory. For applications with heavy libraries, those milliseconds add up fast. Furthermore, this happens regardless of whether you actually use the imported code in your execution path.

import argparse

import pandas as pd # Takes ~200ms to load

import numpy as np # Takes ~100ms to load

import requests

def fetch_data(url):

return requests.get(url).json()

# User runs: python tool.py fetch https://api.example.com

# Problem: pandas and numpy load anyway, wasting 300msYour user just wants JSON data, but they’re waiting for pandas and numpy. For CLI tools and serverless functions, this is pure waste. Moreover, it impacts cold start performance in cloud environments.

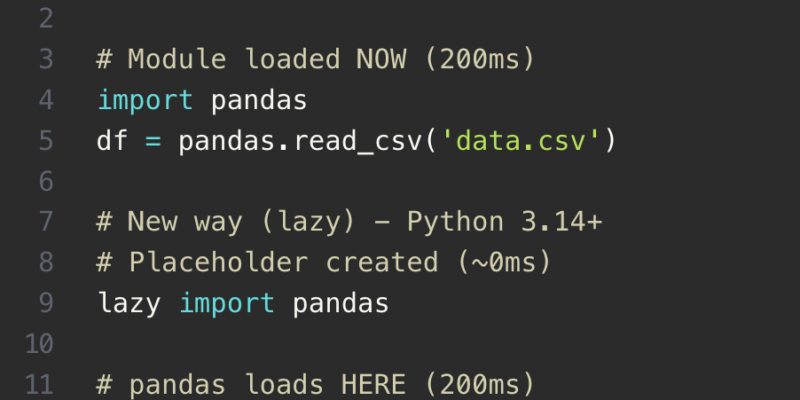

Lazy Imports: Load Only When Needed

Lazy imports create a lightweight placeholder instead of loading the full module. The module only loads when actually accessed. This means you can import everything you need upfront without the performance penalty.

# Old way (eager)

# Module loaded NOW (200ms)

import pandas

df = pandas.read_csv('data.csv')

# New way (lazy) - Python 3.14+

# Placeholder created (~0ms)

lazy import pandas

# pandas loads HERE (200ms)

df = pandas.read_csv('data.csv')

The total load time remains the same. However, it only happens if that code path executes. If you never call the pandas code, pandas never loads.

PEP 810 Syntax

PEP 810 makes lazy imports explicit with clean syntax. The lazy keyword comes first and clearly signals your intent:

# Single lazy import

lazy import pandas

# Multiple lazy imports

lazy import numpy, scipy

# From imports

lazy from tensorflow import keras

# With aliasing

lazy import matplotlib.pyplot as pltReal Performance Gains

The performance impact varies by use case, but the savings can be dramatic:

CLI Tools: A data processing tool saw startup drop from 850ms to 120ms (86% improvement) when running commands that didn’t need pandas/numpy. This significantly improves user experience.

Serverless Functions: AWS Lambda cold starts improved by 150-400ms. When you’re charged per 100ms, this directly cuts costs. Additionally, faster cold starts mean better responsiveness for end users.

Web Applications: Development dependencies can be lazy imported. Consequently, you save 100-500ms in production startup time.

When to Use Lazy Imports

Lazy imports aren’t a silver bullet. However, they excel in specific scenarios:

Good candidates:

- Heavy libraries: pandas, numpy, tensorflow, torch (>100ms load time)

- Optional features: debug tools, plugins, development dependencies

- Conditional code paths: CLI subcommands, feature flags

- Libraries used in rare error handling or edge cases

Keep these eager:

- Core functionality that always executes

- Lightweight standard library modules (json, os, sys)

- Modules needed immediately after import

Rule of thumb: If it’s heavy and conditionally used, make it lazy. In contrast, if it’s always needed, keep it eager.

Use It Now (Before Python 3.14)

Can’t wait for Python 3.14? You can implement lazy imports today using importlib.util.LazyLoader:

import importlib.util

import sys

def lazy_import(name):

spec = importlib.util.find_spec(name)

loader = importlib.util.LazyLoader(spec.loader)

spec.loader = loader

module = importlib.util.module_from_spec(spec)

sys.modules[name] = module

loader.exec_module(module)

return module

# Usage

pandas = lazy_import('pandas')

df = pandas.read_csv('data.csv') # Loads hereOnce Python 3.14 arrives, you can simply replace this with lazy import pandas. Meanwhile, this workaround functions in all current Python versions.

Common Gotchas

Lazy imports introduce some complexity you need to manage:

Runtime Errors: Eager imports fail at startup, which is easy to catch. However, lazy imports fail when accessed, potentially deep in your code execution. Therefore, ensure test coverage for all lazy import code paths.

First-Use Latency: Instead of slow startup, users experience a delay the first time they use a lazy-loaded feature. For some applications, this tradeoff works well. For others, it may degrade user experience.

Don’t Overdo It: Making every import lazy adds unnecessary complexity. First, profile your imports using python -X importtime script.py. Then, identify the actual slow imports worth optimizing.

Key Takeaways

Python lazy imports solve real performance problems for applications with conditional dependencies. With PEP 810 coming in Python 3.14, they’re becoming a standard feature.

Action items:

- Profile your import times using

python -X importtime your_script.py - Make heavy, conditional dependencies lazy (pandas, numpy, tensorflow)

- Keep core functionality eager to catch errors early

- Use the

importlib.utilworkaround if you need it now - Test all code paths that use lazy imports

For CLI tools and serverless functions, lazy imports deliver dramatic startup improvements. As a result, your users get faster, more responsive applications. Use them strategically where they provide real value.