Microsoft is re-launching its controversial Recall AI feature for Windows 11 after pulling it in June 2024 following massive security backlash. Recall continuously captures screenshots of everything users do on their PC, using on-device AI to create a searchable photographic memory. Security researchers immediately discovered the original implementation stored screenshots in an unencrypted SQLite database, easily accessible to any malware. Five months later, Microsoft’s redesigned version ships with mandatory Windows Hello authentication, encrypted storage, and opt-in defaults. However, developers remain skeptical about whether constant screenshot surveillance can ever be truly safe.

This is a high-profile case study in how NOT to launch an AI feature. Microsoft’s privacy failures and subsequent redesign offer critical lessons for any developer building AI-powered tools.

The Original Security Disaster

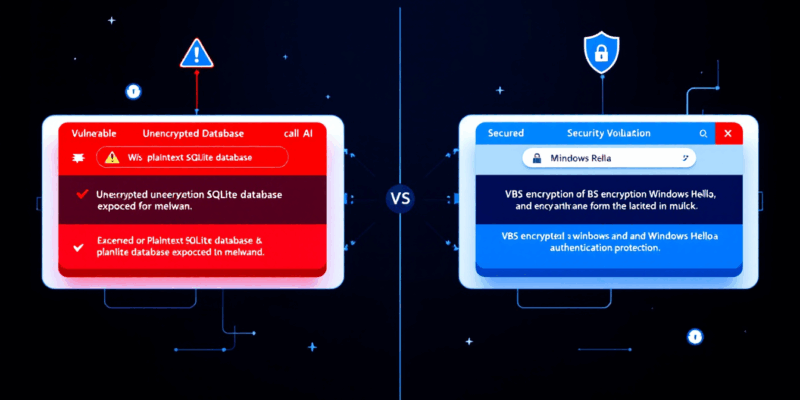

The June 2024 Recall launch was a security catastrophe. Researchers discovered screenshots were stored in a plaintext SQLite database at a predictable file path, accessible to any malware with user-level permissions. No encryption. No authentication required. Just simple SQL queries to harvest your entire digital life.

Security researcher Kevin Beaumont didn’t mince words: “The original Recall implementation was a security disaster. Every piece of malware, every attacker with local access, could harvest your entire digital life in minutes.” Moreover, proof-of-concept exploits appeared within hours of the announcement. Ars Technica’s security analysis documented how trivial the exploitation process was.

This wasn’t a subtle vulnerability—it was a fundamental architectural failure suggesting Microsoft shipped the feature without basic threat modeling. For developers, it’s a stark reminder that AI capabilities mean nothing if security fundamentals are ignored.

What Changed in the Redesign

Microsoft’s November 2025 redesign addresses the most egregious security flaws but doesn’t eliminate fundamental privacy concerns. Recall now requires Windows Hello authentication—biometric or PIN—before accessing screenshots. Furthermore, the database uses VBS (Virtualization-Based Security) enclaves for encryption. Screenshots are captured continuously but stored encrypted, and the feature is opt-in by default instead of opt-out.

Microsoft VP Yusuf Mehdi stated, “We heard the feedback loud and clear. The new Recall is built privacy-first with multiple layers of protection.” According to Microsoft’s official blog, the architecture now runs in protected memory regions isolated from other processes, with improved sensitive content filtering for passwords and credit cards.

These are necessary improvements. However, they don’t address the core question: should your operating system constantly surveil you? Even with encryption, the attack surface remains massive. Screenshots are captured before encryption, displayed after decryption, and processed by AI models that could have vulnerabilities. Essentially, Microsoft fixed the symptoms but not the disease.

Why Privacy Concerns Remain

Despite the redesign, security experts and developers remain deeply skeptical. The fundamental issue isn’t just encryption—it’s that constant screenshot surveillance creates massive attack vectors even when “properly” implemented. Malware can capture screenshots before Recall encrypts them. Additionally, keyloggers can harvest authentication credentials. Memory dumps can extract decrypted content.

Cryptographer Matthew Green argues: “Encrypting the database is necessary but not sufficient. The attack surface is massive—screenshots are captured before encryption, displayed after decryption, and processed by AI models that could have vulnerabilities. This is a feature designed without threat modeling.”

The developer community echoes this skepticism. A Hacker News thread discussing Recall garnered over 850 comments, most expressing distrust even after the redesign. The consensus: technical fixes don’t address the philosophical problem of surveillance-based features.

Related: Anthropic Computer Use API: AI Agents Control Your PC

For developers building AI features, this highlights a critical tension. Innovation doesn’t excuse privacy negligence. Even “secure” implementations of fundamentally invasive features will face pushback. Consequently, some AI capabilities may simply not be worth the privacy trade-offs, regardless of how well they’re engineered.

Lessons for Developers Building AI Features

Microsoft’s Recall disaster offers clear lessons. Privacy must be architectural, not a patch. Security researchers are your allies, not obstacles. Moreover, user trust is fragile.

The contrast with Rewind.ai—a macOS third-party app with similar functionality—is instructive. Rewind built privacy-first from day one with local-only processing, transparent controls, and no cloud dependencies. The difference isn’t technical sophistication; it’s philosophical approach. Microsoft treated privacy as a feature to add later. In contrast, Rewind treated it as a foundation requirement.

When building AI features that touch user data, design threat models before writing code. Make privacy opt-in, not opt-out. Be transparent about data handling. Furthermore, listen when security researchers raise alarms before launch. Microsoft’s mistake was treating these as nice-to-haves instead of requirements.

Recall is rolling out to Windows Insiders now, with general availability expected Q1 2026. Only Copilot Plus PCs with NPU chips can run it. Enterprise IT administrators can disable it via Group Policy, but the feature sets a precedent for “ambient AI” that constantly monitors user activity—something we’ll see from Apple, Google, and others soon. Ultimately, how Microsoft handles this relaunch will shape expectations for AI privacy across the industry.

Key Takeaways

- Microsoft shipped Recall AI in June 2024 with unencrypted screenshot storage accessible to any malware—a textbook failure in security architecture that forced a complete redesign.

- The November 2025 relaunch adds Windows Hello authentication, VBS encryption, and opt-in defaults, but doesn’t eliminate fundamental privacy concerns about constant surveillance.

- Security experts argue that even “secure” screenshot surveillance has massive attack surfaces—malware can capture before encryption, keyloggers can steal credentials, and memory dumps can extract decrypted content.

- Developers building AI features should treat privacy as architectural foundation, not an afterthought—design threat models first, make features opt-in, and listen to security researchers before launch.

- The contrast with Rewind.ai shows privacy-first AI can work when built thoughtfully from day one, while Microsoft’s approach of adding privacy later destroyed user trust.