Simple LLM chains work for demos but collapse in production. They can’t persist state across sessions, recover from errors, or incorporate human oversight—features real-world AI agents require. LangGraph, a graph-based framework from LangChain, fills this gap with production-ready infrastructure for stateful, multi-agent workflows. The numbers back its explosive growth: 21.3k GitHub stars, and the Octoverse 2025 report lists it among the fastest-growing repositories by contributor count. Companies like Rakuten, GitLab, and Cisco already run it in production.

Most LangGraph tutorials show toy examples. This guide focuses on what makes it production-ready: durable execution, human-in-the-loop integration, and persistent state management.

What LangGraph Is and How It Works

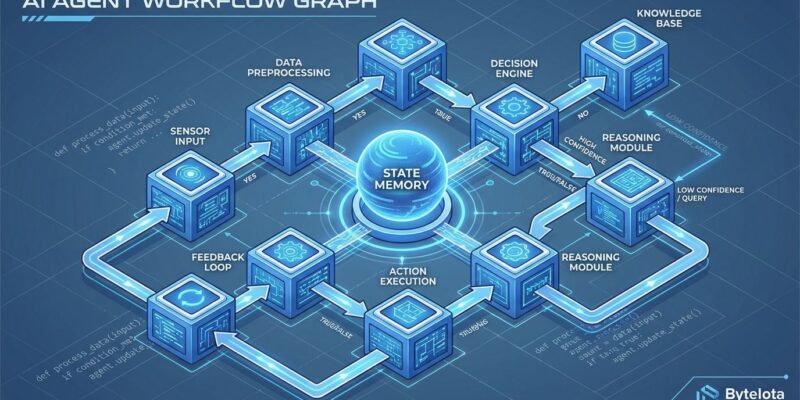

LangGraph represents AI agent workflows as directed graphs with three core building blocks: nodes (individual processing steps), edges (conditional routing logic), and state (shared memory). Think of it like a flowchart where each box performs a task and arrows decide what happens next based on conditions. Unlike LangChain’s higher-level abstractions for rapid prototyping, LangGraph provides low-level control for production systems requiring complex state management.

Here’s the simplest possible workflow:

from typing import TypedDict

from langgraph.graph import StateGraph, START, END

class GraphState(TypedDict):

message: str

count: int

def greeting_node(state: GraphState):

return {"message": f"Hello! Processing item {state['count']}"}

workflow = StateGraph(GraphState)

workflow.add_node("greeting", greeting_node)

workflow.add_edge(START, "greeting")

workflow.add_edge("greeting", END)

app = workflow.compile()The code defines a state schema (GraphState), creates a node function that updates state, and connects nodes with edges. When the workflow runs, it flows from START → greeting node → END, carrying state through each step. This graph model enables conditional branching, cyclical workflows, and state persistence—capabilities impossible with linear LLM chains.

Production Features That Actually Matter

LangGraph provides four production-critical features most tutorials skip:

Durable execution means agents automatically checkpoint at each node and resume from exactly where they failed. No data loss. No manual retry logic. If your agent crashes mid-workflow, it picks up seamlessly when restarted. This is mandatory for long-running workflows that span hours or days.

Human-in-the-loop integration lets you inject approval steps anywhere in your graph. An agent can draft content, pause for human review, then continue based on feedback. You can inspect state at any point, approve or reject actions, and even “time-travel” to previous states to fix course. This isn’t bolted on—it’s a first-class feature.

Persistent state maintains context across sessions. Your agent remembers previous conversations, tracks progress on multi-day tasks, and stores results in Redis, PostgreSQL, or custom backends. For production deployments, you need this—in-memory state is only for testing.

Native streaming shows token-by-token LLM outputs and intermediate reasoning steps in real time, with zero performance overhead. Users see what the agent is thinking rather than waiting for a final response after 30 seconds.

Enterprise companies trust these features in production. Rakuten uses LangGraph for e-commerce workflows, GitLab for DevOps automation, Elastic for search optimization, Cisco for networking tasks, and Klarna for fintech operations. Deployment options include fully managed cloud, hybrid (runs in your VPC), or self-hosted on Kubernetes.

Building a Multi-Agent Workflow

A practical example demonstrates how LangGraph’s features connect. Imagine a research agent that searches the web, analyzes results, asks for human input, and continues based on feedback.

The workflow has four nodes: a search node calls a web search API and adds results to state; a processing node analyzes those results and extracts insights; a human review node pauses execution and asks “Does this look good?”; and a continuation node proceeds differently based on approval. Conditional routing handles the decision logic.

Here’s the pattern:

def should_continue(state):

if state["human_approved"]:

return "finalize"

else:

return "search_again"

workflow.add_conditional_edges(

"human_review",

should_continue,

{

"finalize": "final_output",

"search_again": "search_node"

}

)The should_continue function checks state and returns the next node name. If the human approved results, route to the finalize node. Otherwise, loop back to search again. State accumulates updates as it flows through nodes—search results from node 1, analysis from node 2, human feedback from node 3. Each node reads the current state and returns a dict of updates, which LangGraph merges automatically.

This architecture enables complex workflows impossible with simple chains: multi-agent collaboration where specialized agents hand off tasks, cyclical loops for iterative refinement, and human oversight at critical decision points. The official multi-agent tutorial provides a complete implementation of this pattern.

When to Use LangGraph (and When NOT To)

LangGraph is overkill for most projects. Use it when you need complex multi-step workflows with state persistence, production agents that must recover from failures, human-in-the-loop approval processes, or workflows spanning hours or days. For anything simpler, choose a lighter alternative.

Use LangGraph for:

- Complex workflows requiring detailed state management across multiple steps

- Production systems needing fault tolerance and automatic error recovery

- Human-in-the-loop approval processes (legal reviews, content moderation)

- Multi-agent systems with conditional routing between specialized agents

- Long-running workflows that persist for hours, days, or weeks

Skip LangGraph for:

- Simple chatbots with linear conversation flows (use plain LangChain)

- Single LLM call with a prompt template (use Python + API directly)

- Quick MVPs or prototypes (the learning curve wastes time)

- Conversational agents without complex state (use AutoGen instead)

- Structured role-based task delegation (use CrewAI instead)

This honesty saves time. If you’re building a simple support chatbot, LangGraph adds unnecessary complexity. Reach for it when simpler tools can’t handle your state management, error recovery, or orchestration needs.

Common Pitfalls to Avoid

Beginners make three critical mistakes that waste hours of debugging.

Async/await issues are the most common. LangGraph requires all node functions to be async def, even for trivial tasks. Forgetting async or missing await on coroutines causes cryptic errors or silent failures. Make every node async from the start and consistently await all coroutines.

Parallel state conflicts happen when multiple nodes update the same state key without a reducer function. If two nodes run in parallel and both update state["results"], LangGraph throws an InvalidUpdateError because it doesn’t know how to merge the updates. The solution: define a reducer function that specifies how to combine values—append to list, merge dicts, take the latest value, etc.

Dead-end nodes break execution. Every node must have a path to another node or to END. If you add a node but forget to connect it with edges, the workflow crashes when it reaches that node with nowhere to go. Fix this by sketching your graph structure visually before coding (LangGraph Studio helps). Human brains struggle tracking more than 5-7 nodes mentally—draw it out. The LangGraph cheatsheet documents these and other common gotchas.

Key Takeaways

- LangGraph solves the production AI agent gap with state persistence, error recovery, and human oversight

- It’s worth the learning curve when you need these features, but overkill for simpler use cases

- Start with the official docs and LangChain Academy’s free course

- Build incrementally—simple workflows first, add complexity gradually

- Remember: most projects don’t need this level of orchestration. Choose tools that match your actual requirements