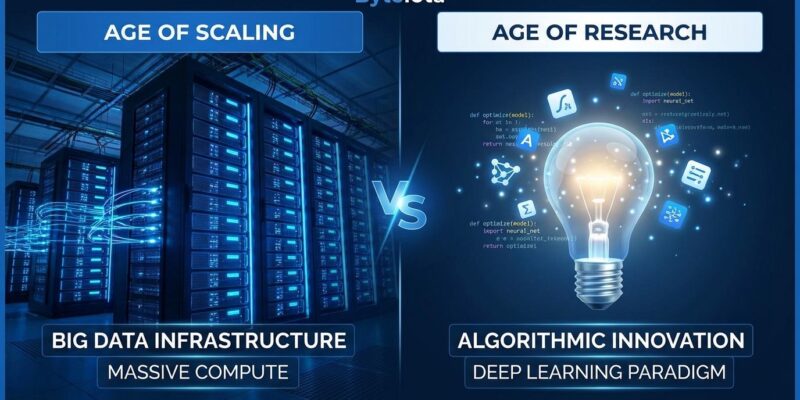

Ilya Sutskever—co-founder of OpenAI, former Chief Scientist, and current CEO of Safe Superintelligence Inc—declared in a November 2025 podcast that AI is shifting from the “age of scaling” back to an “age of research.” His argument: pre-training data is finite, scaling has hit diminishing returns, and models “generalize dramatically worse than people” despite benchmark success. The timing is provocative. While Sutskever claims scaling is over, the industry just committed $7.8 trillion to AI infrastructure through 2030. OpenAI alone pledged $1.09 trillion. Either he’s right and the industry faces a reckoning, or he’s positioning his “research-first” company against his former employer’s scaling bet.

This debate determines where AI investment flows, which approaches get funded, and what skills become valuable for developers. If the “age of research” is real, algorithmic innovation beats compute access. If scaling continues, hyperscalers win and smaller players get squeezed out. Someone’s wrong, and it’s a trillion-dollar question.

The Industry Just Bet $7.8 Trillion That Sutskever Is Wrong

While Sutskever declares scaling dead, the industry is accelerating infrastructure spending. OpenAI committed $1.09 trillion to compute infrastructure from 2025 to 2035—$250 billion to Microsoft Azure over six years, $300 billion to Oracle over five, $38 billion to AWS over seven, and $11.9 billion to CoreWeave over five. Sam Altman’s justification: “Scaling frontier AI requires massive, reliable compute.” The company projects $20 billion annual revenue by year-end but posted a $7.8 billion operating loss in the first half of 2025.

Hyperscalers are all in. Amazon, Microsoft, Google, and Meta will spend $360 billion on capex in 2025 alone—a 47% year-over-year increase. The Stargate Project, backed by SoftBank, OpenAI, and Oracle, plans $500 billion over four years. Total confirmed AI infrastructure investment through 2030: $7.8 trillion. That’s not the behavior of an industry that believes scaling is over.

Actions speak louder than words. The trillion-dollar bets suggest industry leaders believe scaling continues to deliver returns. Either they’re right and Sutskever’s timeline is too aggressive, or they’re locked into infrastructure contracts before realizing the limits. Developers need to watch what companies do, not just what former employees say.

Why Sutskever Says AI Scaling Is Dead

Sutskever offers three arguments. First, data scarcity. “Pre-training will run out of data. The data is very clearly finite.” Common Crawl and similar datasets have been exhausted. High-quality human-generated text on the internet has been mined out. Future scaling requires fundamentally different data sources—synthetic data, self-improvement loops, or new collection methods.

Second, diminishing returns. In 2025, researchers found that advanced reasoning systems no longer deliver proportionate improvements when adding more computational steps. Training costs increase exponentially while performance gains slow. Beyond a “critical batch size,” further increases in training efficiency yield diminishing returns. TechCrunch reported that AI scaling laws are forcing labs to change course.

Third, model fragility. “These models somehow just generalize dramatically worse than people. It’s a very fundamental thing.” Models score impressively on benchmarks but fail at basic real-world tasks. They excel at structured tests but collapse when faced with novel situations requiring common-sense reasoning. The 80.9% SWE-Bench score Claude Opus 4.5 achieved is historic, but production deployment reveals the gap between test performance and real-world reliability.

Related: Claude Opus 4.5: First AI to Hit 80.9% on SWE-Bench, 67% Cheaper

The industry consensus is shifting. Epoch AI’s analysis confirms pre-training has exhausted most high-quality publicly available web data. Everyone in AI now acknowledges you can’t just throw more compute and data at pre-training and expect continuous improvement. The question isn’t whether scaling has limits—it’s when those limits actually bite.

Context Matters: Sutskever Runs a Company Betting Against Scaling

Sutskever’s credentials are unquestionable. He co-invented AlexNet, the 2012 breakthrough that launched modern deep learning. He co-founded OpenAI and served as Chief Scientist from 2015 to 2024, playing an instrumental role in developing ChatGPT. In November 2023, he voted to fire Sam Altman over concerns that OpenAI prioritized commercialization over safety. He left OpenAI in May 2024.

In June 2024, he founded Safe Superintelligence Inc with a mission to build “safe AGI” via research breakthroughs, not scaling. SSI raised $2 billion at a $32 billion valuation in April 2025 from GreenOaks, Google, and NVIDIA. The company secured a Google Cloud partnership for TPU access. Sutskever is now CEO after co-founder Daniel Gross left for Meta in June 2025.

Here’s the uncomfortable question: Sutskever runs a company whose entire business model depends on “research over scaling” being correct. SSI’s $32 billion valuation is a bet that algorithmic innovation can compete with OpenAI’s trillion-dollar compute infrastructure. His message aligns perfectly with his company’s positioning. That doesn’t make him wrong—but context matters. Is this prophecy or positioning?

Follow the evidence, not the narrative. Someone who left OpenAI (the scaling champion) has incentive to claim their former employer’s strategy is doomed. The industry’s $7.8 trillion bet suggests they disagree. Developers should ask: what does the data show, not what do people with vested interests claim?

What the Age of Research Actually Means for Developers

Sutskever divides AI history into three eras. From 2012 to 2020, the “age of research” discovered deep learning, invented transformers, and proved scale matters. From 2020 to 2025, the “age of scaling” saw everyone pursue the same strategy—just make it bigger. Scaling “sucked the air out of the room, causing everyone to do the same thing.” Now, with compute infrastructure established, he argues we’re entering a new age of research where progress comes from algorithmic breakthroughs and novel architectures, not just adding more GPUs.

The shift has practical implications for developers. If algorithmic innovation matters again, smaller teams with better ideas can compete with hyperscalers. Research skills—architecture design, training efficiency, novel approaches—become more valuable than access to compute or prompt engineering expertise. Diversity returns to AI development instead of everyone following the same scaling playbook.

However, the “age of research” doesn’t necessarily mean “no more scaling.” It might mean smarter scaling—better algorithms that make compute go further, synthetic data generation that extends training, or test-time compute that improves reasoning without larger models. The binary framing (scaling OR research) is probably wrong. The future might be both: research breakthroughs that enable new forms of scaling.

What Developers Should Do: Watch the Evidence, Not the Claims

The debate won’t settle through arguments—it’ll settle through evidence. GPT-5, Gemini 4, and Claude Opus 5 are expected in 2025-2026. If they show substantial improvements over GPT-4.5, Gemini 3, and Claude Opus 4.5, Sutskever is wrong and scaling continues. If improvements plateau or require exponentially more compute for marginal gains, he’s vindicated. Until then, the question remains open.

Developers should hedge their bets. Build skills in both application development (using LLMs effectively) and algorithmic fundamentals (understanding how they actually work). The industry’s trillion-dollar infrastructure spending suggests leaders believe scaling continues. SSI’s $32 billion valuation suggests investors believe research matters. Both camps could be partially right.

Watch infrastructure spending trends. If hyperscaler capex accelerates, the industry disagrees with Sutskever’s timeline. If spending slows or shifts from raw compute to R&D, the pivot is happening. Watch SSI’s first model release—if “research-first” delivers competitive results without trillion-dollar infrastructure, the thesis has merit. Most importantly, watch actual model performance trajectories, not marketing claims or founder statements.

Key Takeaways

- Sutskever declares the “age of scaling” over; industry bets $7.8 trillion it continues through 2030

- His arguments (data scarcity, diminishing returns, model fragility) have growing industry support from TechCrunch, Epoch AI, and technical analyses

- He runs SSI, a company betting on “research over scaling”—context matters when evaluating claims

- GPT-5, Gemini 4, and Claude Opus 5 in 2025-2026 will test whether scaling improvements continue or plateau

- Developers should hedge: build skills in both application development and algorithmic fundamentals, watch evidence not narratives