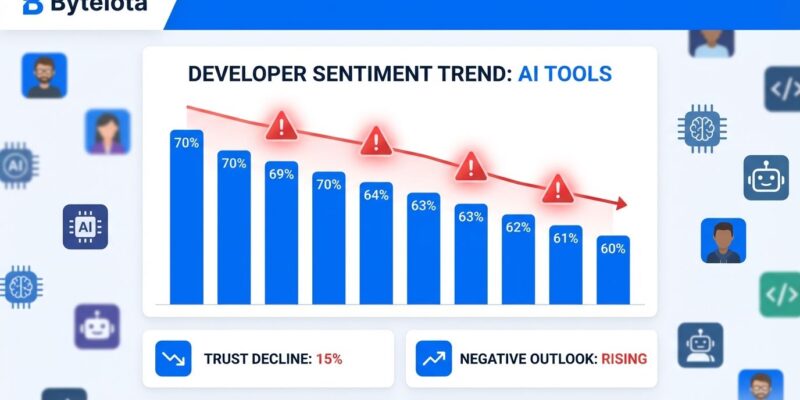

The 2025 Stack Overflow Developer Survey just revealed a troubling paradox: while 84% of developers now use or plan to use AI coding tools—up from 76% in 2024—positive sentiment has dropped from over 70% to just 60%. Even more concerning, 46% actively distrust AI-generated code accuracy, a sharp rise from 31% last year. Despite 51% of professional developers using these tools daily, enthusiasm is eroding faster than adoption is growing.

This marks the first major sentiment decline during the AI coding boom, and the reasons are clear in the data. Developers aren’t rejecting AI tools outright—they’re adopting them out of necessity while growing increasingly frustrated with the reality of using them.

The “Almost Right” Problem: Why Developers Are Frustrated

The biggest source of friction shows up in a devastating statistic: 66% of developers struggle with AI solutions that are “almost right, but not quite.” These near-misses require extensive debugging, and 45% report that fixing AI-generated code takes more time than writing it from scratch. When your productivity tool creates more work than it eliminates, satisfaction drops fast.

Trust has collapsed alongside sentiment. Only 3% of developers “highly trust” AI outputs, while 46% actively distrust them—a 15-point jump from 2024’s 31%. This isn’t developers being cautious; it’s developers learning from experience. The promise of AI coding assistance was faster development. The reality is often slower development with questionable code quality.

Consider what this means in practice: A developer uses GitHub Copilot to generate a function. It looks right. The syntax checks out. However, there’s a subtle bug—maybe an edge case unhandled, or a security vulnerability introduced. The developer spends 30 minutes debugging what would have taken 15 minutes to write correctly from scratch. This happens repeatedly, and trust erodes.

The Productivity Paradox: Slower but Feeling Faster

Here’s where perception diverges from reality. A July 2025 study revealed that experienced developers took 19% longer to complete tasks when using AI tools. However, these same developers expected to be 24% faster before the study, and believed they were 20% faster after completing it—despite being measurably slower.

This perception gap matters enormously. Developers are adopting tools they think accelerate their work, when data suggests the opposite for experienced practitioners. The tools may generate code quickly, but the subsequent review, debugging, and refactoring overhead eliminates any time savings—and then some.

Moreover, the productivity paradox reveals a deeper issue: AI coding tools optimize for code generation speed, not code quality or maintainability. Experienced developers spend significant time reviewing generated code for bugs, security issues, and architectural problems. Consequently, that overhead doesn’t feel like “using AI”—it feels like regular work. Therefore, developers perceive speed from generation while underestimating the cost of cleanup.

Trust Declines Steepest Among Senior Developers

The experience gap tells its own story. Overall trust in AI accuracy dropped from 40% to 29% in a single year, but the decline is concentrated among those who understand code quality best. Furthermore, context issues—where AI fails to understand broader codebase architecture and team standards—affect 41% of junior developers but 52% of senior developers.

Similarly, learning developers show 53% positive sentiment toward AI tools, while professionals maintain 61%. This inverted relationship—where less experience correlates with higher satisfaction—suggests AI tools excel at simple, isolated tasks but struggle with complex, context-dependent work that experienced developers handle daily.

Senior developers recognize what AI-generated code misses: architectural consistency, security implications, performance considerations, maintainability concerns. Indeed, when you’ve debugged production incidents caused by subtle bugs, you develop healthy skepticism toward code you didn’t write yourself. AI tools haven’t earned that trust, and the data shows experienced developers know it.

Willing But Reluctant: The Forced Adoption Phenomenon

Stack Overflow captured the trend perfectly: “Developers remain willing but reluctant to use AI.” This isn’t enthusiasm—it’s resignation. The JetBrains 2025 survey found that 68% of developers expect employers to require AI proficiency, transforming these tools from optional productivity enhancers to mandatory job requirements.

FOMO drives adoption as much as genuine utility. When 84% of developers use AI tools and Fortune 100 companies mandate them, individual developers feel pressure to adopt regardless of personal experience. The choice becomes “use AI tools and stay employable” rather than “evaluate whether AI tools improve my work.”

This forced adoption creates a bubble. Developers adopt tools not because they improve outcomes, but because everyone else is adopting them. Consequently, usage statistics rise while satisfaction falls, creating the exact paradox visible in the Stack Overflow data. Adoption metrics look strong, but they’re masking declining confidence in the technology’s value.

The Critical Inflection Point for AI Coding Tools

The market tells one story while developer experience tells another. AI coding tools are projected to grow from a $4-5 billion market in 2025 to $12-15 billion by 2027. Meanwhile, GitHub Copilot added 5 million users in just three months, reaching 20 million total. Ninety percent of Fortune 100 companies have adopted it. Growth looks unstoppable.

Yet the foundation is shaky. Trust is declining, not improving. Quality concerns persist—research shows AI-generated code introduces 322% more privilege escalation paths and contributes to technical debt doubling. The “almost right” problem remains unsolved. Furthermore, experienced developers remain skeptical, and for good reason.

The AI coding revolution faces a critical choice: address quality issues or face backlash when enterprises start measuring actual productivity instead of adoption rates. Right now, companies are betting on AI tools based on hype and competitor pressure. If the productivity gains don’t materialize—or worse, if they turn into productivity losses—the adoption trend could reverse quickly.

Tools need to earn developer trust through quality improvements, not marketing claims. That means solving the “almost right” problem, improving context awareness, and delivering on the speed promises. Until then, the gap between 84% adoption and 60% sentiment will keep widening.

Key Takeaways

- Adoption doesn’t equal satisfaction: 84% of developers use AI coding tools, but only 60% express positive sentiment—the first major decline despite continued growth in usage.

- The “almost right” problem drives frustration: 66% struggle with AI solutions requiring extensive debugging, and 45% find fixing AI code takes longer than writing it manually.

- Perception diverges from reality: Developers believe AI makes them faster when data shows experienced developers are 19% slower, revealing a massive productivity perception gap.

- Trust erodes fastest among experts: Senior developers show lowest trust levels (52% cite context issues vs 41% for juniors), suggesting AI tools work better for simple tasks than complex work.

- Forced adoption creates a bubble: With 68% expecting employer requirements, developers adopt AI tools out of necessity and FOMO rather than proven value, masking quality concerns with usage statistics.