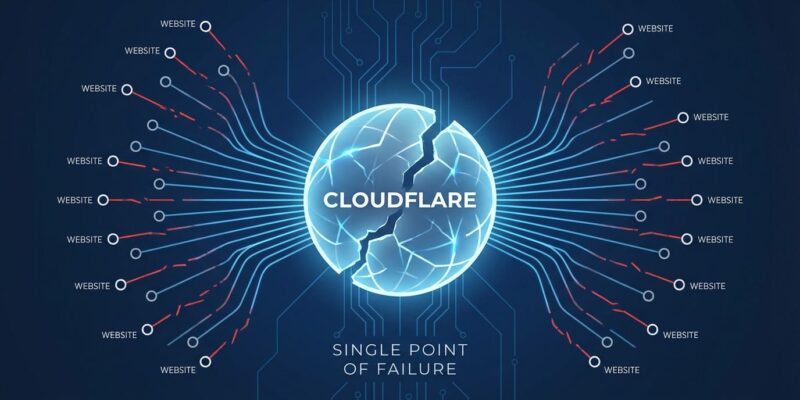

On November 18, 2025, a single database permissions change at Cloudflare triggered a four-hour global outage that took down X (Twitter), ChatGPT, Spotify, Discord, and thousands of other websites. The culprit? A configuration file that doubled in size, exceeded a hardcoded 200-feature limit, and triggered a Rust panic across Cloudflare’s entire global network. However, this wasn’t an isolated technical glitch—it’s the third major infrastructure provider to fail in just 30 days, following AWS (October 20) and Azure (October 29). When three hyperscalers controlling 67% of internet infrastructure all fail within a month, we’re witnessing a systemic problem the industry has been willfully ignoring.

Three Strikes in 30 Days: A Pattern We Can’t Ignore

Between October 20 and November 18, 2025, three catastrophic failures exposed the fragility of centralized infrastructure. AWS’s us-east-1 region went dark due to DNS and DynamoDB failures, taking Snapchat, Reddit, and Venmo offline. Ten days later, Azure’s global DNS outage hit Azure Front Door and dependent services worldwide. Then Cloudflare’s Bot Management system brought down the web’s CDN backbone for four hours.

Moreover, these weren’t unrelated accidents. Technical analysts noted they “followed a shared structural pattern”—subtle internal changes cascading through control-plane primitives like DNS, routing fabrics, and bot mitigation systems into global multi-service outages. A database query behaving differently. A permissions update triggering unexpected duplicates. Trusted internal data that wasn’t validated defensively.

The uncomfortable truth: your code can be perfect, your architecture pristine, but when AWS, Azure, or Cloudflare fail, you fail anyway. No amount of individual resilience matters when the infrastructure itself is the single point of failure.

The Numbers Don’t Lie: 67% Concentration is a Systemic Risk

As of Q3 2025, AWS commands 29% of the global cloud market, Microsoft Azure holds 20%, and Google Cloud captures 13%. Combined, three hyperscalers control 62% of the $106.9 billion quarterly cloud infrastructure market. Furthermore, add Cloudflare’s CDN dominance—64% by website usage—and the picture is stark: roughly two-thirds of digital infrastructure depends on three cloud providers plus one CDN giant.

The Cloudflare outage illustrated this concentration perfectly. When their network failed, users couldn’t even Google “Cloudflare market penetration” because the search results were hosted behind Cloudflare. Consequently, the irony is almost poetic: the infrastructure we rely on to explain infrastructure failures is itself centralized on that same infrastructure.

Additionally, economists call this “systemic risk”—when failure of one entity threatens the entire system. In finance, we regulated “too big to fail” banks after 2008. In infrastructure, we’re still pretending concentration is fine because individual uptime hovers around 99.9%. But the math is brutal: 0.1% downtime multiplied by billions of users equals catastrophic failure. Three outages in 30 days suggests that 99.9% figure might be optimistic.

Why Centralization Persists Despite the Risks

Developers don’t centralize out of laziness. Instead, hyperscalers offer unbeatable economics through scale, superior developer experience with unified dashboards and APIs, genuine technical excellence (99.9% uptime is impressive), and massive network effects—everyone uses them because everyone uses them. The velocity advantage is real. Building on AWS beats architecting for multi-cloud when you’re racing to market.

However, multi-cloud exists as an alternative, but the barriers are formidable. Over 70% of enterprises cite management complexity as their top challenge. The cost premium runs 25-40% higher than single-cloud. Finding engineers proficient in AWS, Azure, and GCP is like hunting unicorns. Cross-cloud egress fees punish you for moving data between providers.

The result: 81% of enterprises claim multi-cloud adoption, but most run one primary cloud plus secondary workloads for specific use cases—not true diversification. A developer on Hacker News admitted, “We got lazy” by putting “DNS, CDN, security, edge workers, caching, load balancing—into one basket.” It’s an honest assessment. Nevertheless, the problem is that rational decisions at the individual company level create irrationality at the system level. We’ve architected ourselves into dependency.

The Uncomfortable Question: Should We Force Diversification?

Three possible paths forward exist. First, accept occasional catastrophic failures as the cost of convenience—the status quo that 60% of enterprises will likely continue following. Second, pursue voluntary market-driven diversification as multi-cloud tools improve—a slow march that 30% might attempt but face real economic barriers. Third, implement forced regulatory diversification requiring backup providers for critical infrastructure—the controversial option with only 10% probability but the most effective.

The banking sector faced this after 2008. “Too big to fail” led to capital requirements, stress testing, and regulatory oversight. Infrastructure might need similar guardrails. Matthew Hodgson, CEO of Element, argues: “The trouble with big centralised systems is that they suffer global outages because they have single points of failure. True resilience comes from decentralisation and self-hosting.”

Here’s the opinion that will make some uncomfortable: waiting for the market to self-correct isn’t working. Three outages in 30 days, billions of users affected, and the economic incentives still favor centralization. Therefore, maybe voluntary diversification isn’t enough. Maybe we need regulatory requirements for critical infrastructure—mandated backup providers, tested failover procedures, penalties for single-vendor dependency in regulated sectors like finance and healthcare.

It’s controversial. It adds complexity and cost. Nevertheless, precedent exists, and the alternative is accepting that occasionally, half the internet will simply stop working because someone changed a database permission or updated a DNS configuration.

What Developers Can Do Now

Full multi-cloud is expensive and complex, but partial diversification is achievable. Split critical services across providers—don’t put authentication, CDN, and hosting on the same vendor. Adopt a primary-plus-fallback CDN model (Cloudflare primary, Fastly fallback) which costs far less than full multi-cloud. Furthermore, self-host critical landing pages: a static HTML page on a $5 VPS survives provider outages and keeps customers informed.

Additionally, monitor from outside your stack with external uptime checks that catch provider failures your internal monitoring won’t see. Document incident playbooks: know exactly what to do when each provider fails, including DNS updates, cache purges, and customer communication. These aren’t theoretical exercises—three outages in 30 days means you’ll use these playbooks.

The trend toward edge computing offers hope. By 2025, 75% of enterprise data will be processed outside traditional data centers, reducing cloud dependency. The decentralized cloud storage market is expanding from $1.8 billion in 2024 to a projected $8.44 billion by 2032. These aren’t silver bullets, but they represent movement away from pure centralization.

You can’t fix the system alone, but you can diversify your own stack. Partial diversification beats none. Start with primary-plus-fallback. Split critical services. Monitor externally. Plan for failure. Because November 18 proved that failure isn’t a possibility—it’s a pattern.

The Real Question

The question isn’t “What caused the Cloudflare outage?”—Cloudflare’s post-mortem answered that. The question is: Why do we accept single points of failure for critical infrastructure?

The industry has made a bet: convenience trumps resilience. Three hyperscalers are more efficient, cheaper, and faster than distributed alternatives. We’ve voluntarily centralized an internet designed to be decentralized because the economic incentives overwhelmingly favor it.

November 18 suggests we might be wrong. Three outages in 30 days, billions affected, and the fundamental architecture unchanged. Therefore, maybe 99.9% uptime isn’t good enough when 0.1% downtime can break ChatGPT, X, Spotify, and Discord simultaneously. Maybe the cost of convenience is higher than we thought.

Key Takeaways

- The pattern is systemic, not coincidental: AWS (Oct 20), Azure (Oct 29), Cloudflare (Nov 18) all failed within 30 days following the same structural pattern of control-plane cascades

- Concentration creates fragility: 62% cloud market share across three hyperscalers plus 64% CDN share on Cloudflare means two-thirds of internet infrastructure depends on four entities

- Economic barriers are real: Multi-cloud adds 25-40% cost, 70% of enterprises cite complexity, finding multi-cloud engineers is difficult—centralization persists for legitimate reasons

- Voluntary diversification isn’t working: 81% claim multi-cloud but most don’t do true diversification, suggesting market forces alone won’t solve concentration risk

- Partial diversification is achievable: Primary-plus-fallback CDN, split critical services across providers, external monitoring, and incident playbooks provide practical risk reduction within realistic budgets