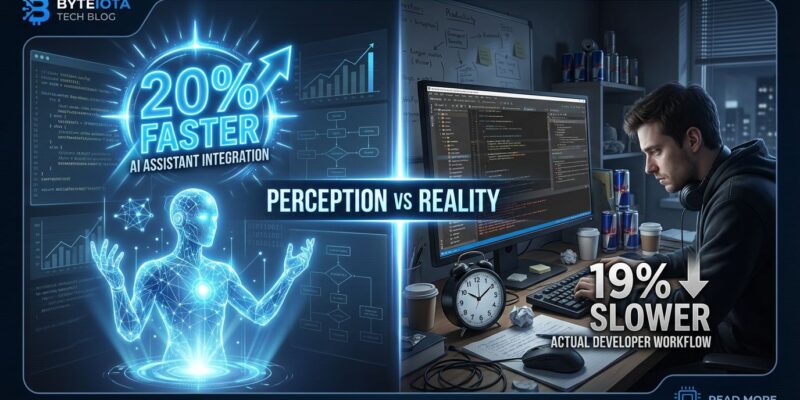

Developers using AI coding tools work 19% slower than those coding without AI—yet they believe they’re 20% faster. This stunning 44-point perception gap, revealed by a rigorous METR study, exposes the dirty secret of the AI productivity boom: the gains are in our heads, not in the data. While the industry pours billions into AI developer tools promising revolutionary speedups, the most careful research shows the opposite. We’re not just believing the hype—we’re hallucinating our own productivity.

The Study That Breaks the Illusion

METR recruited 16 experienced open-source developers—contributors to repositories averaging 22,000+ stars and over a million lines of code—to work on 246 real issues from their own projects. Half the tasks allowed AI tools (primarily Cursor Pro with Claude 3.5/3.7 Sonnet), half didn’t. The results shattered expectations.

Before the study, developers expected AI to make them 24% faster. After completing tasks both with and without AI, they still believed they were 20% faster when using these tools. The screen recordings and time logs told a different story: they were 19% slower.

This isn’t a rounding error. It’s a 44-point gap between perception and reality. Developers couldn’t trust their own experience.

Why AI Creates More Work Than It Saves

The slowdown stems from a fundamental shift in how developers spend their time. Without AI, most time goes to writing and thinking about code. With AI, developers spend less time coding and more time managing the AI itself.

Here’s what really happens: Developers accepted less than 44% of AI suggestions. Three-quarters read every line of AI output—because they had to. More than half made major modifications to clean up what the AI generated. The time “saved” by instant code suggestions gets consumed by reviewing, debugging, and fixing unreliable output.

The reliability problem is real. Research on tools like Copilot and Ghostwriter found hallucination rates as high as 42% in complex scenarios. Twenty percent of AI-generated code references packages that don’t even exist—phantom APIs and ghost functions that look legitimate but fail silently. In July 2025, Google’s Gemini CLI reassigned file operations incorrectly and wiped user data.

AI doesn’t eliminate work—it shifts work from creation to validation. For experienced developers in complex codebases, that’s a terrible trade.

The Technical Debt Time Bomb

The immediate slowdown is only part of the problem. Features built with more than 60% AI assistance take 3.4 times longer to modify six months later. You’re not just slower today—you’re building a maintenance nightmare for tomorrow.

This makes sense when you consider what AI struggles with: large, mature codebases with intricate dependencies and strict coding standards. AI excels at isolated, simple tasks. It fails at understanding system-wide context. The code it generates might work now, but it creates friction for every future change.

Forty-five percent of developers say debugging AI-generated code takes more work than writing it themselves. The most common frustration, cited by 66% of developers? AI solutions that are “almost right, but not quite”—which might be worse than completely wrong.

The Industry’s Data-Free Hype Machine

Gartner predicts the AI software market will hit $297.9 billion by 2027, with 92% of organizations planning AI investments. Yet the same analysts project that 40% of agentic AI projects will be canceled by 2027 due to unclear business value. IDC reports that 90% of generative AI projects end prematurely.

The disconnect is stark. More than 50% of Fortune 500 companies use Cursor. Enterprise adoption spreads “like wildfire.” Individual developers report feeling 30% faster. But when you control for perception bias with screen recordings and time logs, experienced developers are slower.

Stack Overflow’s surveys show trust in AI coding tools dropping from over 70% in 2023 to around 60% in 2025. Only 3% of developers say they “highly trust” these tools. Even CIOs cite generative AI as overhyped for the third consecutive year.

The AI coding boom is built on vibes, not evidence. We’re investing billions in tools that make experienced developers slower, all because the user experience creates an illusion of speed.

When AI Actually Helps

The METR study focused on experienced developers working in familiar, complex codebases—the exact scenario where AI struggles most. The story changes for junior developers, unfamiliar code, or simple, repetitive tasks like generating boilerplate, documentation, or test scaffolding.

Sixty-nine percent of study participants continued using Cursor after the research ended. They found value somewhere, even if it wasn’t in raw speed. The tools are also improving rapidly—the researchers acknowledged their findings might be obsolete within months as AI models advance.

AI isn’t useless. It’s oversold. The problem isn’t the technology—it’s the universal productivity claims that ignore context.

Demand Evidence, Not Vibes

The 44-point perception gap should terrify anyone making tool decisions. If developers can’t accurately gauge their own productivity—if something that feels 20% faster is actually 19% slower—then we can’t rely on intuition or anecdotes.

CTOs are spending millions on enterprise AI coding licenses based on testimonials and vendor benchmarks, not randomized controlled trials. Developers adopt tools because they feel faster, not because they are faster. The entire market runs on perception.

It’s time for a reality check. Demand data, not marketing claims. Let developers choose tools based on their actual measured results, not hype. And maybe—just maybe—trust that if experienced developers working in complex codebases perform worse with AI, the answer isn’t to mandate the tools anyway.

The emperor has no clothes. The productivity myth is exposed. Now we have to decide: do we keep pretending, or do we start measuring what actually matters?