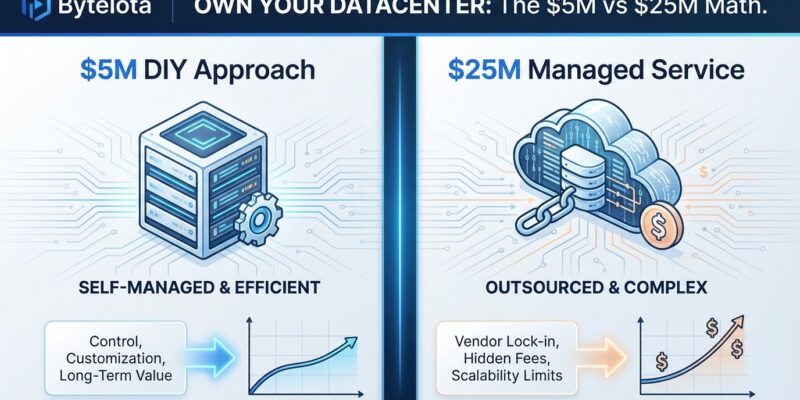

Comma.ai just revealed they spent $5 million building their own datacenter instead of renting cloud infrastructure that would have cost them $25 million or more. The autonomous driving company’s disclosure is trending on Hacker News with 883 points and 360 comments, and for good reason. As AI workloads shift from training to inference in 2026, the economics of datacenter ownership are flipping. Inference now consumes 55% of AI infrastructure spending, runs continuously, and favors predictable high-utilization workloads—exactly the scenario where ownership beats rental by 5x.

The Comma.ai Case: $5M Owned vs $25M Rented

George Hotz’s autonomous driving company didn’t build a datacenter on ideology. They did the math. Their $5 million investment bought 600 GPUs across 75 TinyBox Pro machines, 4PB of storage, and 450kW of power capacity. The same compute in cloud would have cost $25 million or more—a 5x markup for the privilege of renting someone else’s hardware.

The infrastructure is maintained by “only a couple engineers and technicians,” according to comma.ai’s blog post. They pay $540,000 annually in power costs, expensive even by San Diego’s inflated 40¢/kWh rate that’s triple the global average. Despite the power premium, ownership still wins decisively.

Why? Engineering incentives. When you own the datacenter, optimization is mandatory. When you rent cloud, throwing money at the problem is always an option until the CFO objects. Comma.ai’s approach forces genuine efficiency—and saves millions.

The AI Workload Shift Changes Everything

Timing matters. Five years ago, this analysis might have concluded differently. But 2026 marks a fundamental shift. Inference workloads now consume over 55% of AI infrastructure spending, surpassing training for the first time. By 2027, Deloitte estimates inference will account for two-thirds of all AI compute.

The economics are brutal. GPT-4 cost $150 million to train. Within two years, inference costs hit $2.3 billion—15x the training expense. This pattern holds broadly: inference represents 80-90% of a production AI system’s lifetime costs. Training is an occasional capital investment. Inference runs continuously, directly tied to revenue.

Sustained, predictable workloads favor ownership. Variable, bursty workloads favor rental. Inference is sustained and predictable. The math becomes obvious.

The Breakeven Timeline: Four Months

Lenovo’s 2026 Total Cost of Ownership analysis for generative AI infrastructure found on-premise systems reach breakeven in under four months for high-utilization workloads exceeding 20% capacity. Previous generations required 12-18 months. The acceleration comes from NVIDIA’s Hopper and Blackwell architectures delivering better performance per dollar.

The advantage compounds over time. Owned infrastructure yields an 18x cost advantage per million tokens compared to Model-as-a-Service APIs. For companies running sustained inference at scale, the choice is binary: own or hemorrhage cash.

Cloud still wins for experimentation, one-off training runs, and variable workloads. Startups testing ideas shouldn’t build datacenters. But companies with predictable AI inference loads above 20% utilization are effectively subsidizing hyperscalers’ profits by renting.

NeoClouds: The 4x Cheaper Middle Ground

Not every company can front $5 million for a datacenter. Enter the NeoClouds—GPU-focused cloud providers like CoreWeave, Lambda Labs, Crusoe, and Nebius that specialize in AI workloads without the legacy baggage of general-purpose clouds.

CoreWeave charges $1.39 per hour for an NVIDIA A100 40GB GPU. Azure charges $3.67 for the same hardware—a 62% premium. For DGX H100 systems, NeoClouds deliver at $34 per hour versus $98 on hyperscaler platforms. That’s a 4x price advantage.

CoreWeave raised $7 billion and reported nearly $1 billion in quarterly revenue, projecting $5 billion annually. The market is real. NeoClouds provide a bridge for mid-sized enterprises not ready to own but unwilling to pay hyperscaler premiums.

When to Own, When to Rent, When to Use NeoClouds

The decision framework is straightforward:

Own your datacenter when:

- You run sustained AI inference workloads

- Utilization consistently exceeds 20%

- You have engineering capacity to manage infrastructure

- You can invest $5 million or more upfront

- Breakeven in four months justifies the capital

Rent hyperscaler cloud when:

- You’re experimenting and prototyping

- Workloads are variable or bursty

- You’re running infrequent training jobs

- You’re small scale or early stage

- You lack infrastructure expertise

Use NeoClouds when:

- You need GPU compute but lack $5 million for a datacenter

- You want 4x savings over hyperscalers

- You’re a mid-sized enterprise scaling AI workloads

- You’re building toward eventual ownership

The Hyperscaler Reality

Hyperscalers are spending $600 billion on AI infrastructure in 2026, a 36% increase over 2025. Amazon and Microsoft are each planning $80 billion or more. Google revised its capex guidance upward three times, reaching $91-93 billion compared to $52.5 billion in 2024.

Here’s the problem: aggregate capex now exceeds projected cash flows. Hyperscalers are increasingly relying on debt markets to fund AI infrastructure buildouts. They’re betting they can monetize this investment before the economics collapse.

Meanwhile, companies like comma.ai are proving that ownership works. NeoClouds are capturing market share with 4x better pricing. The “cloud-first” era that defined the 2010s is ending for AI workloads. The 2020s are about hybrid strategies, cost optimization, and challenging vendor lock-in.

Comma.ai’s $5 million datacenter isn’t a curiosity. It’s a signal. When inference is 80-90% of your AI system costs, when breakeven hits in four months, when ownership delivers 18x token cost advantages—the economics aren’t debatable. They’re math.