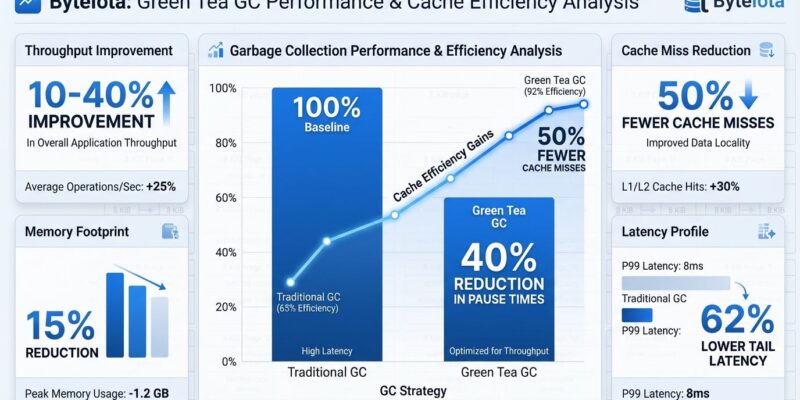

Go 1.26 launched in February 2026 with Green Tea as the new default garbage collector, delivering 10-40% lower GC overhead for most programs—no code changes required. The algorithm shifts from processor-centered object scanning to memory-aware page scanning, reducing memory stalls that previously wasted 35% of mark time. On modern AMD64 CPUs like Intel Ice Lake or AMD Zen 4 and newer, vector instructions squeeze out another 10% improvement. This is Go’s biggest GC overhaul since concurrent collection arrived in Go 1.5, and it’s running in production at Google right now.

Page-Based Scanning Replaces Object Traversal

Here’s what changed under the hood. Traditional Go GC scanned objects one at a time using depth-first traversal—imagine walking a tree by diving deep into each branch before moving to the next. Green Tea scans entire 8 KiB memory spans called pages, using breadth-first traversal at the page level. Instead of tracking individual objects on a LIFO stack, it queues whole pages on a FIFO work list.

Each object gets just 2 bits of metadata: a “Seen” bit (pointer to this object found) and a “Scanned” bit (object already processed). Pages can reappear on the work list multiple times per mark phase, whereas objects appeared at most once in the old GC. This matters because scanning memory sequentially in 8 KiB chunks delivers better cache locality than jumping around to individual objects scattered across the heap.

The performance impact is measurable. Microbenchmarks show L1 and L2 cache misses cut in half. Memory stalls that consumed over 35% of mark time in the original GC drop significantly. When your program scans gigabytes of heap, cache efficiency compounds fast.

Real-World Performance: 10-40% Gains, But It Depends

Benchmarks are promising, but real-world results vary by workload topology. The tile38 geospatial database saw a 35% reduction in GC overhead—one of the best-case scenarios. Databases, caches, and API servers with high-fanout data structures like trees and graphs benefit the most because Green Tea’s page-based approach aligns with how these workloads organize memory.

However, not every service gets a windfall. DoltHub tested Green Tea in September 2025 and found neutral results: more CPU per GC cycle but fewer total GCs. The Go team fixed this “less frequent but more expensive” issue before the Go 1.26 final release, but the lesson holds—your mileage will vary. If your heap is low-fanout with frequent mutations, gains may be modest or nonexistent.

On modern AMD64 processors, AVX-512 vector instructions provide bonus performance. Green Tea uses 512-bit registers to hold entire page metadata and processes 64 bytes in parallel. The VGF2P8AFFINEQB instruction (a Galois Field operation) handles bit-wise transformations for efficient scanning. This adds roughly 10% more GC overhead reduction on Intel Ice Lake and AMD Zen 4 or newer chips. Older CPUs and non-AMD64 platforms skip vector acceleration but still get the base 10-40% improvement from page-based scanning.

Zero-Code Migration, One-Line Opt-Out

Upgrading is automatic. Install Go 1.26, rebuild your application, and Green Tea activates by default. No code modifications, no configuration files, no runtime flags unless you want to opt out.

Testing before production is straightforward:

# Download and test Go 1.26

go install golang.org/dl/go1.26@latest

go1.26 download

go1.26 build ./...

# Monitor GC behavior (before/after comparison)

GODEBUG=gctrace=1 ./your-app

# Run benchmarks

go1.26 test -bench=. -benchmemIf you observe regressions, opt out with a single environment variable:

# Build without Green Tea

GOEXPERIMENT=nogreenteagc go build ./...Note that the opt-out will disappear in Go 1.27 (expected August 2026). If Green Tea causes problems for your workload, report it to GitHub issue #73581 now while the team can still address edge cases.

When Green Tea Helps and When It Doesn’t

Green Tea excels on workloads with regular heap structures and high-fanout data. If your service spends more than 10% of CPU time in GC and uses data structures like trees, graphs, or indexes, you’re likely to see substantial gains. Multi-core systems benefit more—scalability improves with core count, and microbenchmarks show 10-50% GC CPU reduction on high-core machines.

Watch for neutral or negative results if you’re running on single-core or dual-core deployments, working with low-fanout structures that mutate frequently, or operating under very tight latency SLAs (sub-millisecond p99). In these scenarios, Green Tea’s breadth-first page scanning doesn’t align well with your memory access patterns.

Testing checklist: Capture baseline metrics on Go 1.25 or earlier (GC pause times at p50/p95/p99, GC CPU percentage, overall throughput). Upgrade to Go 1.26, rebuild, and remeasure the same metrics under production traffic or realistic load tests. Monitor for 48-72 hours to catch any latency tail behavior. If performance degrades, opt out and file an issue with profiles.

What This Means for Go Services

For most Go developers, this is free performance. Docker, Kubernetes, and millions of microservices written in Go now run faster without touching a line of code. The shift from object-based to page-based scanning represents a fundamental algorithmic change, not just incremental tuning—similar in impact to when Go 1.5 introduced concurrent GC in 2015.

GOGC tuning still applies. If you’ve customized GOGC (default 100) to balance memory versus GC frequency, those settings work the same under Green Tea. Higher GOGC values (200-400) trade memory for less frequent GC, while lower values (50-80) prioritize low memory usage with more frequent collections. Green Tea improves efficiency at any GOGC setting.

Production deployment at Google since Go 1.25 signals confidence. The Go team wouldn’t make Green Tea the default without validation at scale. If your service matches the optimal workload profile—high GC activity, regular heap, high-fanout data, multi-core—upgrade and reap the benefits. If your workload is edge-case territory, test carefully and report findings.

Key Takeaways

- Upgrade for free performance: Go 1.26’s Green Tea GC delivers 10-40% lower GC overhead automatically for most programs.

- Page-based beats object-based: Scanning 8 KiB memory spans instead of individual objects cuts cache misses in half and eliminates 35%+ of memory stalls.

- Test before production: While Google runs Green Tea successfully, workload variability means you should benchmark before/after on your specific services.

- Opt-out available now, gone soon: Use GOEXPERIMENT=nogreenteagc if issues arise, but the option disappears in Go 1.27 (August 2026).

- Best for databases and high-fanout heaps: Services with trees, graphs, caches, and >10% GC CPU time see the biggest gains—single-core or low-fanout workloads may see little improvement.