In January 2026, Microsoft began asking thousands of engineers across its most critical product divisions—Windows, Office, Teams, Edge, and Surface—to install Anthropic’s Claude Code and compare it directly against GitHub Copilot, the AI coding assistant Microsoft owns and aggressively markets to developers worldwide. The Verge broke the story on January 22, and it immediately sparked controversy on Hacker News: If Microsoft’s own engineers need to A/B test Copilot against a competitor, what does that say about Microsoft’s confidence in its flagship AI coding tool?

This is corporate irony at its finest. Moreover, Microsoft sells Copilot to millions of developers while internally hedging its bets on the very product it claims is the future of software development. For developers paying $10/month for Copilot subscriptions, this news raises an uncomfortable question: Does Microsoft know something about Copilot’s limitations that it’s not sharing with customers?

The Hypocrisy: Selling Copilot While Testing Claude

Microsoft is simultaneously selling GitHub Copilot to millions of developers worldwide—generating over 40% revenue growth in Q4 2024—while internally deploying Claude Code to thousands of employees and asking them to provide comparative feedback. The scale is significant: Microsoft’s Experiences + Devices division, responsible for Windows, Microsoft 365, Teams, Bing, Edge, and Surface, received instructions to install Claude Code. Additionally, the CoreAI team was included in the rollout. Even non-technical staff—designers and product managers—were encouraged to use Claude Code for prototyping.

Engineers are expected to use both Claude Code and GitHub Copilot in parallel, then provide structured feedback comparing code quality, performance, user experience, and workflow integration. However, Microsoft’s communications chief Frank Shaw responded with corporate PR speak: “Companies regularly test and trial competing products to gain a better understanding of the market landscape.” Notice what he didn’t say: that Copilot is superior.

Furthermore, the timing adds another layer of absurdity. Just two weeks after Claude officially became a Microsoft subprocessor on January 7—enabling deep integration into Microsoft 365’s security boundary—Microsoft expanded Claude Code adoption to major engineering teams. Consequently, this follows a November 2025 partnership where Anthropic committed to $30 billion in Azure purchases while Microsoft simultaneously competes against them in AI coding tools. Microsoft is now partnering with Anthropic, competing with them, and testing which product actually works better.

What This Actually Reveals About Microsoft Copilot

Microsoft’s internal A/B testing signals that AI coding tools are still unproven—even to their makers. Despite owning GitHub, partnering with OpenAI for $13B+, and generating massive Copilot revenue, Microsoft is hedging its bets by testing Anthropic’s competitor. This isn’t confidence. This is insurance.

Actions speak louder than marketing. If Copilot was clearly the best tool, Microsoft wouldn’t need to conduct large-scale internal comparisons. Therefore, the internal deployment reveals what Microsoft’s actions say versus what its marketing claims. When the vendor won’t fully commit to their own product, that’s a signal customers should notice.

The Hacker News community split roughly 40/40 on interpretation. About 40% view this as smart engineering culture: “Good companies test everything to avoid blind spots.” In contrast, another 40% see it as a damning lack of confidence: “If they don’t trust their own product, why should we?” The February 2 discussion hit 724 points with 223 comments, currently the #4 trending story. Top reactions ranged from “Microsoft is being pragmatic and data-driven” to “When Microsoft won’t eat its own dog food, that’s a red flag.”

Both camps have a point, but the trust erosion is real. Developers already skeptical of vendor marketing now have concrete evidence that even Microsoft questions Copilot’s superiority. In fact, the internal testing validates concerns that AI coding assistants are still experimental technology—for everyone, including the companies selling them.

Claude Code vs GitHub Copilot: The Practical Reality

Technical comparisons reveal why Microsoft is testing both: neither Copilot nor Claude Code is clearly superior across all use cases. They excel at different tasks. Specifically, Copilot is faster for routine boilerplate with broader language support, while Claude Code is better for complex reasoning with deeper codebase context and multi-file refactoring.

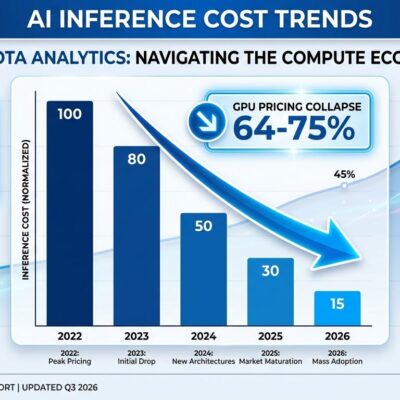

The 2026 benchmarks tell the story: Claude 3.5 achieved 25% faster code completion in complex scenarios and averages 30ms response time versus Copilot’s 50ms. However, Copilot has broader adoption, lower pricing at $10/month compared to Claude Code’s $15/month, and more versatile language support. Developer testimonials consistently describe Copilot as “best for quick tasks” and Claude as “better for complex refactoring.”

The existence of genuine trade-offs explains Microsoft’s internal testing. There isn’t a clear winner yet. Nevertheless, many experienced developers are adopting a hybrid approach that mirrors what Microsoft is now doing internally: maintain subscriptions to both tools, use Copilot as default for routine work, and switch to Claude Code when hitting complex architectural problems. Some rotate tools based on project complexity.

The Takeaway for Developers

Microsoft’s internal testing teaches developers a valuable lesson: Don’t trust vendor marketing. Test tools yourself. If even Microsoft—the company that owns GitHub and sells Copilot—isn’t confident enough to commit exclusively to its own product, developers should feel empowered to evaluate multiple options without vendor loyalty.

The emerging best practice is pragmatic: Use each tool for its strengths. Copilot handles 80% of day-to-day coding tasks efficiently. Meanwhile, Claude Code tackles the 20% of truly difficult problems where deeper reasoning and architectural understanding matter. The $25/month combined cost is reasonable for professional developers who need both capabilities.

Key takeaways:

- Microsoft’s internal Claude Code deployment reveals uncertainty about Copilot’s superiority, contradicting external marketing claims

- AI coding tools remain unproven and experimental—even the vendors aren’t sure which is best

- Technical benchmarks show genuine trade-offs: Copilot excels at routine tasks, Claude at complex reasoning

- The hybrid approach (both tools, task-dependent switching) is emerging as the pragmatic strategy

- Evaluate tools independently based on your specific work rather than trusting vendor claims or corporate partnerships

When the company selling you a product won’t commit to using it exclusively themselves, that’s all the signal you need to make your own independent evaluation.