Anthropic published peer-reviewed research on January 29 showing developers using AI coding assistants score 17% lower on comprehension tests than those who code manually—equivalent to two letter grades. The randomized controlled trial studied 52 professional developers learning a new programming library, finding AI users averaged 50% on quizzes while manual coders hit 67%. The kicker? AI provided no statistically significant speed improvement—just skill degradation.

The irony is brutal. Fortune reports top engineers at Anthropic and OpenAI now use 100% AI-generated code, while Anthropic researchers publish studies proving AI assistance impairs skills. If nobody learns to code, who verifies what AI builds?

What Actually Degrades: Debugging, Reading, Understanding

The 17% comprehension gap isn’t abstract—it hits the skills developers need most. Debugging suffered worst, followed by code reading and conceptual understanding. AI users encountered fewer errors (median 1 versus 3 for manual coders), but when errors appeared, they couldn’t fix them. You can’t debug what you don’t understand.

The productivity myth collapsed too. AI users finished only two minutes faster—not statistically significant. Where did the time go? Query composition. Some participants spent six minutes crafting a single prompt, burning 17% of the 35-minute task window. Others invested 11 minutes total on queries—31% of their time. That’s not a productivity gain. That’s trading coding time for prompt engineering time, then losing comprehension in the deal.

Code reading ability degraded because developers stopped engaging with code mentally. When AI generates output, developers skim rather than parse. The result: surface-level syntax recognition without deep comprehension. This creates “verification debt”—more code to review, less ability to review it effectively.

The Six Patterns: Three Destroy Skills, Three Preserve Them

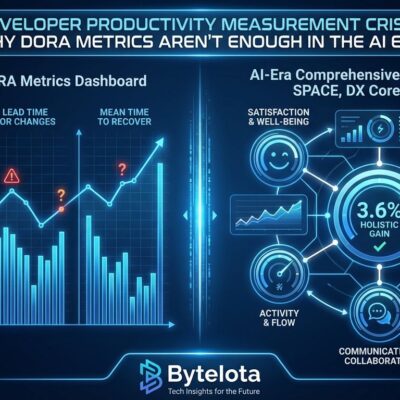

Here’s the breakthrough finding: how you use AI determines whether you learn or lose skills. Anthropic identified six distinct interaction patterns—three scored under 40% (failing grade), three scored 65-86% (strong performance).

The three patterns that destroy comprehension:

AI Delegation means complete reliance on code generation. Participants using this pattern completed fastest (~19.5 minutes) but scored worst on comprehension. The characteristic behavior: “cognitive offloading”—outsourcing thinking entirely to AI. It feels productive but destroys learning.

Progressive AI Reliance starts with limited questions, then escalates. Developers begin asking for explanations, but gradually shift to full delegation. This pattern particularly failed on second-task concepts, showing how AI dependence compounds over time without awareness.

Iterative AI Debugging looks like learning—participants asked many questions—but isn’t. They used AI to solve debugging problems rather than understand them. The result: slower completion, minimal skill retention, false sense of mastery.

The three patterns that preserve learning:

Conceptual Inquiry scored highest (86%) and worked fastest among high-performers. These developers asked only conceptual questions—”How does async/await work?” not “Write an async function for me.” They coded manually, using AI for understanding rather than doing. Seven of 52 participants used this pattern, and they dominated comprehension scores.

Generation-Then-Comprehension generated code first, then immediately asked follow-up questions for understanding. Slower execution but high comprehension. The key: deliberate learning behavior after generation. Don’t just accept AI output—interrogate it.

Hybrid Code-Explanation requested code generation paired with explanations. Time-intensive but comprehensive learning. The difference from AI Delegation: asking “explain this” alongside “write this” maintains cognitive engagement.

The takeaway is stark. Not all AI use destroys skills. Three patterns preserve learning through “cognitive engagement.” Three destroy it through “cognitive offloading.” The choice: use AI for understanding or use AI for doing.

The Verification Crisis: 96% Distrust AI, 48% Don’t Check

Here’s the industry’s dirty secret: 96% of developers believe AI-generated code isn’t functionally correct, yet only 48% say they always verify before committing. That’s not a minor gap—it’s a crisis. Nearly all developers (95%) spend effort reviewing, testing, and correcting AI output, with 59% rating that effort as moderate or substantial. So why doesn’t verification happen consistently?

Time pressure, mostly. The same deadlines that make AI appealing also discourage thorough review. Overconfidence plays a role—AI usually works, so developers skip verification until it spectacularly doesn’t. But the core problem runs deeper: verification is harder than writing code yourself. To review code effectively, you need to reconstruct the mental model the original author had. When AI writes code, you’re reconstructing a model you never built.

This creates a competency feedback loop that spirals downward. Stage one: Developers adopt AI for speed and convenience, feeling productive. Stage two: They stop practicing manual coding, and skills decay—Anthropic’s “Progressive AI Reliance” pattern. Stage three: Degraded skills mean they can’t verify AI output effectively, so they rely more heavily on AI. Stage four: Nobody can fix the systems AI builds. Verification debt compounds. Software quality degrades.

The paradox bites hard: AI assistance requires strong foundational skills to use effectively, but using AI prevents developers from building those skills. You need debugging chops to catch AI mistakes, but delegating debugging to AI erodes debugging chops. It’s a snake eating its tail.

Junior Developer Pipeline Drying Up

The employment data tells the story. A Harvard study of 62 million workers found junior developer employment drops 9-10% within six quarters after companies adopt AI. For developers aged 22-25, employment declined roughly 20% from its late 2022 peak. Senior developer employment? Barely budged.

Companies justify this with productivity logic: if AI handles entry-level tasks, why hire juniors? The problem is the talent pipeline. Junior years aren’t just about output—they’re about skill formation. You can’t become a senior developer without junior experience. When companies hire 9-10% fewer entry-level developers, where do future senior developers come from in five to ten years? The industry is eating its seed corn.

GitHub’s research on Copilot adds irony. Junior developers see the largest productivity gains from AI tools—and the most vulnerability to skill degradation. They accept more AI suggestions, ask fewer questions, and build less foundational knowledge. The result: new developers “unable to explain how or why their code works,” as IT Pro reported. They generate code without understanding, trading deep comprehension for quick fixes.

Some companies are adapting. Onboarding programs now include “How to Work with AI Assistance” modules. Mentors review AI-generated code to ensure juniors learn the “why” behind implementations. Some require manual coding periods before granting AI access. But these efforts fight against economic incentives. Training costs money. AI-boosted juniors ship faster. The short-term ROI favors delegation over learning.

What Developers Should Do Now

The Anthropic research provides the answer: use Pattern #6 (Conceptual Inquiry) instead of Pattern #1 (AI Delegation). Ask “How does X work?” not “Write X for me.” Request explanations, not implementations. Use AI as a teacher, not a coder. Manually implement after understanding.

If you do generate code, immediately follow up with questions. “Explain what this code does line by line.” “What are the trade-offs of this approach?” “What could go wrong here?” That’s Pattern #4 (Generation-Then-Comprehension), which scored 65%+ despite using AI for code generation. The difference: cognitive engagement after generation.

Maintain manual coding practice like pilots practice manual landings. Dedicate 20-30% of coding time to writing without AI assistance. Focus on debugging, refactoring, and code reading—the skills Anthropic’s study shows degrade most. When you hit a bug, resist the urge to paste it into Claude. Debug it yourself. You’re not losing time; you’re maintaining skills that will save far more time when AI inevitably produces garbage you need to fix.

Always verify AI output. Don’t join the 52% who don’t always check before committing. Treat AI as a second pair of eyes, not an oracle. Test thoroughly. Understand every line. If you can’t explain how code works, you don’t own it—AI does.

For companies: make code review mandatory for all AI-generated output. Invest in training programs on “how to use AI without losing skills.” Structure onboarding as hybrid—manual coding first, then AI assistance. Assess developers on understanding, not just output speed. Optimize for 10-year talent pipelines, not quarterly velocity metrics.

The harsh truth: AI isn’t going away. The choice isn’t “use AI or don’t.” It’s “use AI effectively or use AI poorly.” Developers who master Conceptual Inquiry will thrive because they can supervise AI, catch mistakes, and architect systems beyond AI’s current capabilities. Those stuck in AI Delegation become obsolete—not because AI replaced them, but because they replaced themselves.

The three high-scoring patterns prove you can use AI assistance without destroying comprehension. It just requires intentionality. The 17% gap isn’t inevitable. It’s a choice.