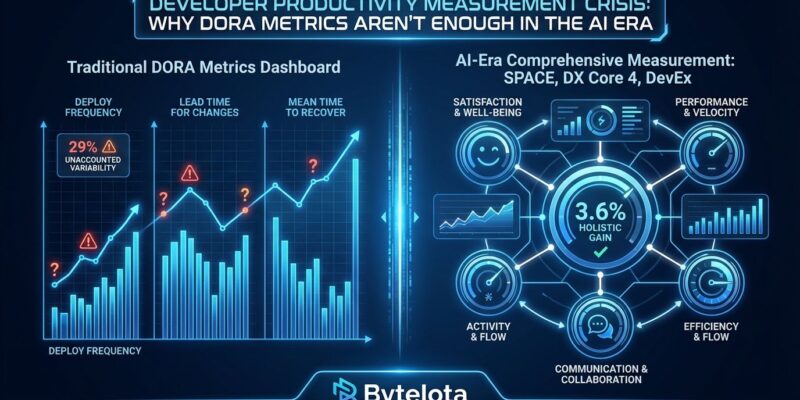

AI now writes 29% of all software code—up from just 5% in 2022—yet developer productivity has increased by only 3.6%. This gap exposes a measurement crisis that’s costing organizations millions. Engineering teams tracking only DORA metrics see their numbers climb while actual productivity stagnates. The problem isn’t AI—it’s that traditional DevOps metrics were never designed to capture how AI fundamentally reshapes development work.

This matters now because 2026 marks the inflection point where AI coding moved from experimental to mainstream. With 84% of developers using AI tools, organizations are making critical decisions based on incomplete data. The cost is measurable: 77% can’t track their AI ROI, 40% of agentic AI projects fail, and developer burnout intensifies as teams optimize for the wrong numbers.

The DORA Amplifier Effect: When Good Metrics Go Bad

DORA metrics—deployment frequency, lead time, change failure rate, and mean time to restore—don’t lie. They mislead. According to the 2025 DORA Report, a 25% increase in AI adoption correlates with a 1.5% drop in delivery throughput and 7.2% drop in delivery stability. Teams see more deployments and faster lead times on dashboards while quality erodes beneath the surface.

The problem is amplification. AI doesn’t fix broken processes; it magnifies them. High-performing organizations with solid pipelines and documentation get better. Struggling teams with flaky tests and fragile infrastructure watch AI “neutralize any gains” by flooding code review queues with plausible-looking code that hides subtle bugs. DORA metrics can’t distinguish between these scenarios.

Consider the reality: 30% of developers don’t trust AI-generated output even as they use it daily. Teams see deployment frequency climb without changing underlying processes. Fast recovery becomes meaningless when the same problems recur because AI-generated fixes clear immediate issues but create new ones downstream. Traditional DORA benchmarks weren’t built for a world where AI works on multiple tasks simultaneously or where the bottleneck shifts from writing code to validating it.

More deployments don’t equal more value. Faster lead time doesn’t mean sustainable productivity. Organizations need metrics that capture what DORA misses: developer experience, cognitive load, code quality beyond test coverage, and actual business value delivered.

The Multi-Framework Solution: Measuring What Actually Matters

The fix isn’t abandoning DORA—it’s complementing it with frameworks built for the AI era. Three have emerged as industry standards, each addressing what traditional metrics overlook.

The SPACE Framework from GitHub, Microsoft, and University of Victoria measures five dimensions: satisfaction, performance, activity, communication, and efficiency. Its core principle: “Productivity cannot be reduced to a single dimension.” The key to implementation isn’t measuring all five—it’s picking the three that matter most for your context and using them to balance velocity with sustainability.

The DX Core 4 framework synthesizes DORA, SPACE, and DevEx research into a practical system deployable “in weeks, not months.” It balances four dimensions: speed (includes DORA metrics), effectiveness (measured via the Developer Experience Index), quality (beyond failure rates), and business impact (revenue per engineer, feature vs. maintenance time). Organizations using it report 3-12% efficiency gains, 14% more time on feature development, and 15% better engagement. Critically, it prevents optimization gaming by equally weighting throughput with developer experience.

The DevEx framework from ACM Queue focuses on three core elements: feedback loops, cognitive load, and flow state. Its key insight: “Poor feedback loops increase cognitive load, which disrupts flow state.” Where DORA measures system performance, DevEx captures developer perceptions—the attitudes, feelings, and frustrations that predict burnout before it shows up in turnover data.

What should you measure? Team outcomes, business value, developer experience, full cycle time, and AI adoption with impact tracked separately. What should you avoid? Lines of code, commit counts, story points, individual velocity. As research across frameworks confirms: “Individual productivity metrics mislead; team performance provides actionable insights.”

AI Changes All Dimensions, Not Just Speed

Here’s where AI breaks traditional assumptions: it doesn’t just accelerate coding—it fundamentally changes where developers spend time and where bottlenecks form.

Less experienced developers use AI for 37% of their code compared to 27% for experienced developers. But productivity gains come solely from the experienced cohort. The implication: AI helps existing employees but reduces entry-level hiring. As one study bluntly noted, AI tools “may help you in your existing job” but “won’t help you land a new one.”

Time allocation has shifted. Developers spend less time writing initial code and more reviewing and validating AI output. Cognitive load increases from constant context-switching and verification work. Traditional productivity metrics miss this entirely—they count the code written, not the mental overhead of ensuring it’s correct.

The economic disconnect is stark. If 29% of code is AI-assisted and productivity increases 3.6%, that represents $23-38 billion in annual value. But AI writes 30% of Microsoft’s code and over 25% of Google’s. Why isn’t the productivity gain higher? Because organizations optimize for the wrong metrics and build on broken processes. Measuring only deployment frequency while ignoring code review bottlenecks and validation overhead is like celebrating sprint speed while your relay team fumbles every handoff.

The Cost of Staying Blind

This isn’t academic. Organizations clinging to DORA-only measurement are making costly mistakes right now. 77% can’t measure their AI ROI, wasting millions on tools they can’t evaluate. 40% of agentic AI projects fail, often because success criteria are wrong from the start. Developer burnout intensifies as teams optimize for metrics that create perverse incentives—ship faster, regardless of quality or sustainability.

Meanwhile, 300+ organizations using comprehensive frameworks achieve 3-12% efficiency gains. The competitive gap isn’t about working harder—it’s about measuring and optimizing for the right things.

The path forward is clear: start with one complementary framework. Don’t measure all dimensions at once—pick three that matter for your context. Track AI adoption and impact separately. Never tie throughput metrics to individual performance reviews. Balance speed with effectiveness, quality, and business impact.

DORA metrics served us well in the pre-AI era. But relying on them alone in 2026 isn’t just incomplete—it’s negligent. The frameworks to measure what actually matters exist today. The measurement crisis is real, but it’s solvable. The question is whether your organization will adapt before the numbers on your dashboard stop matching reality.