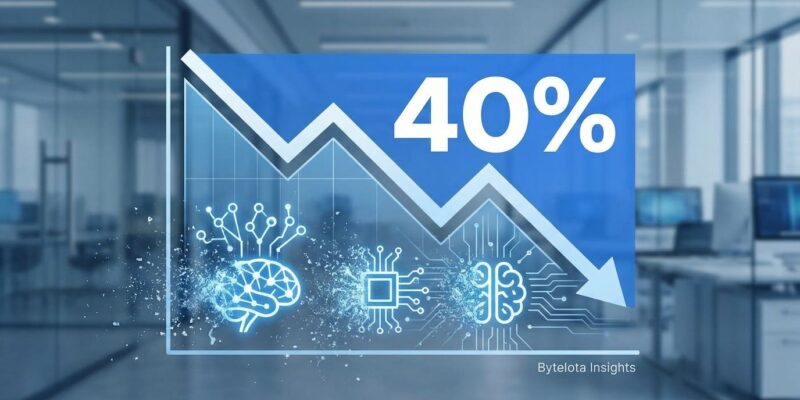

Gartner predicts over 40% of agentic AI projects will be canceled by the end of 2027, due to escalating costs, unclear business value, and inadequate risk controls. The research firm announced in June 2025 that AI agents are currently at the “peak of inflated expectations” on their Hype Cycle and will soon enter the “trough of disillusionment” throughout 2026—a reality check for enterprises betting billions on autonomous AI systems that promise to revolutionize operations but often can’t integrate with legacy infrastructure or prove measurable ROI.

The problems run deeper than technical limitations. Only 130 of thousands of vendors claiming “agentic AI” capabilities are legitimate, according to Gartner. The rest engage in “agent washing”—rebranding chatbots and RPA as autonomous agents. Meanwhile, 70% of developers report integration problems with existing systems, and 42% of AI projects show zero ROI due to measurement failures.

Agent Washing Crisis: Only 130 Real Vendors

Gartner estimates only about 130 of the thousands of agentic AI vendors are genuine. The rest engage in “agent washing“—rebranding existing products like AI assistants, robotic process automation (RPA), and chatbots without substantial agentic capabilities. Vendors market call recording features as “transcription agents” and CRM integrations as “activity mapping agents” despite these tools relying on fixed rules and constant human direction.

Real agents can plan multi-step tasks, use external tools, and adapt when things break. Chatbots can’t. The distinction matters because enterprises are making investment decisions based on vendor claims that don’t match reality. Anushree Verma, Gartner Senior Director Analyst, states: “Most agentic AI projects are currently early-stage experiments or proof of concepts that are mostly driven by hype and are often misapplied.”

Consequently, buying rebranded chatbots thinking they’re autonomous agents leads to failed deployments, wasted budget, and contributes to the 40% cancellation rate. Developers need to evaluate vendors based on genuine capabilities: Can it decompose complex goals into actionable steps? Does it orchestrate multiple systems independently? Can it adapt its approach when encountering obstacles? If not, it’s agent washing.

The Trust Crisis: Why Enterprises Can’t Deploy

LLM-based agents suffer from hallucinations—wrong answers delivered confidently—and black-box reasoning that can’t explain decisions. Gartner puts it bluntly: “You cannot automate something that you don’t trust, and many of these AI agents are LLM-based right now, which means that their brains are generative AI models, and there is an uncertainty and reliability concern there.”

The problem isn’t theoretical. Hallucinations happen frequently, and in enterprise settings, mistakes aren’t always obvious until damage is done. Leaders can’t defend decisions they can’t trust or verify. As a result, only 1 in 10 use cases reached production in the last 12 months due to trust concerns.

Furthermore, trust is the fundamental barrier blocking production deployment. Enterprises can tolerate mistakes in dev/test but not in mission-critical systems. Until agents provide explainable reasoning and reliable outputs, they’ll remain in experimental POC limbo, not production workflows driving business value. The gap between agent capabilities and enterprise reliability standards is why Gartner predicts 2026 will be the disillusionment year.

Integration Hell: 70% of Developers Hit the Wall

70% of developers say they’re having problems integrating AI agents with existing systems. The fundamental issue is architectural incompatibility: agents are non-deterministic (different outputs for same input), while legacy platforms are deterministic (predictable, repeatable). This mismatch isn’t a minor technical hurdle—it’s the primary reason projects get canceled.

Moreover, legacy systems like AS/400, IBM z14, or SAP ECC operate in batch cycles with no real-time triggers, event listeners, or execution endpoints. AI agents need real-time data and execution capabilities to act autonomously. Without it, they can only observe, not operate. Additionally, 42% of enterprises need access to 8+ data sources to deploy agents successfully, and 79% expect data challenges to impact rollouts.

The failure rate reflects this reality: 42% of AI initiatives failed in 2025, up from 17% in 2024. Most enterprises run on decades-old systems that power mission-critical operations. Bolting modern autonomous agents onto 1990s infrastructure without modernization guarantees failure. API-first architecture or legacy system modernization is prerequisite to agent success—something most organizations haven’t prioritized.

Related: Agentic Development Gap: 66% Test, 11% Deploy in 2026

Wrong Metrics: Why 42% Show Zero ROI

Traditional ROI metrics focused on cost savings or headcount reduction fall woefully short when assessing agentic AI. 42% of AI projects show zero ROI, often due to lack of meaningful measurement frameworks. Success is defined in vague terms like “improved efficiency” without quantifiable proof. However, too often, enterprises measure agents against narrow cost-cutting metrics and declare failure even when agents deliver real value via productivity gains, proactive issue prevention, and revenue growth.

Meanwhile, 61% of CFOs say AI agents are changing how they evaluate ROI, measuring success beyond traditional metrics to encompass broader business outcomes. Successful deployments show 5x-10x ROI when measuring productivity, not just cost-cutting. In fact, 74% achieve ROI within the first year when using comprehensive frameworks that track innovation velocity, risk reduction, revenue growth, and strategic agility—not just ticket costs or handle time.

The measurement problem creates a self-fulfilling prophecy: projects without proper success metrics get canceled despite actual business impact. Traditional measures like average call handle time are losing relevance as AI changes how work flows across channels and agents. Enterprises need new frameworks that capture the full strategic value of agentic AI.

What Works: Agentic AI Success in Constrained Domains

Despite the grim failure prediction, successful agent deployments exist in constrained, well-governed domains. IT operations shows the clearest ROI: 30-50% MTTR reduction, 20-40% fewer tickets via proactive monitoring, and SLA compliance improvements from 85% to 95%+. Finance operations (invoice processing, reconciliation), customer support (routine issue resolution), and sales automation all demonstrate measurable value when properly scoped.

Real success stories prove the model works under the right conditions. Connecteam’s AI SDR “Julian” cut no-shows by 73%, reactivated dormant leads, and doubled call coverage without expanding the sales team. Mercedes-Benz deployed multi-agent orchestration in e-commerce that drove 20% growth in new business. Fujitsu and Genshukai saved 400+ hours in hospital management with $1.4M revenue uplift.

The pattern is clear: narrow scope, clear boundaries, measurable metrics (MTTR, SLA compliance, ticket volume), and hybrid approaches win. Use agents for routine decisions, automation for deterministic workflows, assistants for research, and humans for exceptions. Build governance models with escalation paths, human oversight, and rollback capabilities. Focus on production-ready domains like IT ops, employee service, finance operations, and support workflows.

Therefore, the 40% cancellation prediction doesn’t mean agents are useless—it means poorly scoped, overpromised, ungoverned projects fail. Enterprises following proven patterns (constrained autonomy, strong guardrails, measurable outcomes) achieve real ROI and avoid the failure statistic. The path forward isn’t abandoning agents; it’s learning from successes and avoiding hype-driven deployments.

Key Takeaways

- Only 130 of thousands of “agentic AI” vendors are legitimate—agent washing is epidemic, and enterprises must verify genuine capabilities (multi-step planning, tool orchestration, adaptive behavior) before investing.

- Trust crisis blocks production: LLM hallucinations, black-box reasoning, and non-deterministic outputs make agents unsuitable for mission-critical systems until reliability and explainability improve dramatically.

- 70% of developers hit integration walls due to architectural mismatch between non-deterministic agents and deterministic legacy systems—API-first modernization is prerequisite to agent success.

- 42% show zero ROI because enterprises measure agents with wrong metrics (cost-savings) instead of productivity, innovation velocity, and revenue growth—comprehensive frameworks are essential.

- Constrained domains with strong governance succeed: IT ops, finance, and support workflows deliver 5x-10x ROI when properly scoped with clear boundaries, measurable metrics, and hybrid human-agent models.