A Machine Learning engineer sparked controversy in July 2025 with a stark prediction: “AI is creating the worst generation of developers in history.” Six months later, the warning is materializing. The 2026 job market isn’t replacing developers with AI—it’s replacing AI-dependent developers with skilled ones. Engineers who learned to code by copy-pasting from ChatGPT and Copilot can’t debug their own code. Companies are starting to notice.

The Data Proves the Quality Crisis

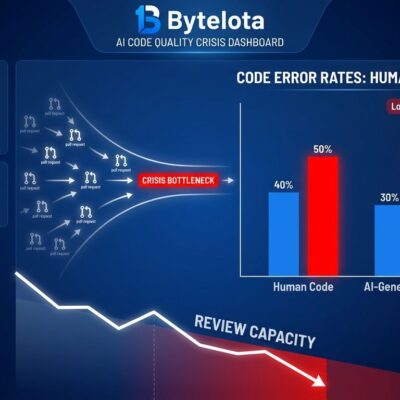

LinearB’s 2026 benchmarks analyzed 8.1 million pull requests from 4,800+ organizations worldwide. The results expose a brutal truth about AI-native developers: their code is failing review at catastrophic rates.

AI pull requests have a 32.7% acceptance rate. Manual pull requests? 84.4%. That’s a 2.6x quality gap. Moreover, AI PRs wait 4.6 times longer before review starts—reviewers are hesitant to even begin. When they do, the problems become obvious: code works in isolation but breaks under edge cases, lacks error handling, or misses architectural considerations.

One enterprise that deployed AI tools to 300 engineers saw code volume increase 28%. However, 30-40% of that code is AI-generated and needs significant rework. The math doesn’t add up: more volume, lower acceptance, overwhelmed reviewers. Teams face a 40% quality deficit—more code than reviewers can validate. AI tools are boosting volume, not value.

The Dependency Problem: Paste and Pray

Here’s what the AI-native developer workflow actually looks like:

- Ask ChatGPT or Copilot to write a function

- Copy-paste the generated code

- Run tests—three failures

- Paste the error back: “Why is this failing?”

- Copy-paste the new version

- Tests pass—ship it

- Production error two days later

- Can’t debug without asking AI again

This isn’t tool usage. It’s dependency. Remove AI from the equation, and productivity doesn’t drop 56%—it drops to zero. These developers can’t explain design decisions in code reviews. They can’t identify root causes when bugs hit production. Consequently, when AI tools go down or hit rate limits, work grinds to a halt.

The fundamentals are missing: debugging, problem-solving, understanding trade-offs. AI provides answers, but learning happens when you struggle to find them yourself. If you can’t explain your code without AI, you don’t understand it. If you can’t debug without AI, you’re unemployable in 2026’s market.

2026’s Invisible Unemployment Targets the Unskilled

The ML engineer predicted 2026 would see the first wave of AI-native engineers getting fired. It’s happening, but it’s subtle. Tech isn’t seeing mass “AI replaces humans” layoffs. Instead, it’s seeing something quieter: invisible unemployment.

The pattern is simple: when a junior developer leaves, companies try AI first before hiring a replacement. Furthermore, a Yale survey found 66% of CEOs are reducing or maintaining headcount in 2026. IBM’s voluntary attrition rate dropped below 2%—the lowest in three decades. Fewer people leaving means less hiring. And when companies do hire, they’re ruthlessly selective.

Engineering managers are realizing AI-dependent juniors cost more in review time than they save in shipping speed. A developer who ships code with a 32.7% acceptance rate isn’t productive—they’re a net negative. Therefore, senior engineers spend more time validating AI-generated logic than shaping system design.

So far in 2026, there have been 28 tech layoffs affecting 5,285 people. The layoffs aren’t announced as “AI replacing workers.” They’re called “performance-based reductions.” But the common thread? Low-performing engineers who can’t debug their way out of a failed build.

AI Is a Force Multiplier, Not a Substitute for Skill

Here’s the take ChatGPT won’t give you: AI tools don’t make everyone productive. They make skilled developers productive and unskilled developers dangerous.

The narrative that “juniors can ship faster with AI” is true but incomplete. They ship more, not better. And when 32.7% of what you ship needs rework, you’re not creating value—you’re creating technical debt. Additionally, you’re burdening your team with review overhead that cancels out velocity gains.

AI is a calculator for code. A calculator makes math faster for someone who understands math. For someone who doesn’t, it hides ignorance. Similarly, AI coding tools work the same way. Give Copilot to a senior engineer who knows architecture, and they’ll ship 56% faster. Give it to a junior who skipped fundamentals, and you get low-quality pull requests that tank team productivity.

The solution isn’t to abandon AI tools. It’s to learn the craft first. Master debugging without a safety net. Understand system design and trade-offs. Build things the hard way until you internalize how code works. Then add AI as an accelerator. Treat AI-generated code like a code review from a junior developer: useful suggestions, but verify everything.

The uncomfortable test: Open your editor. Turn off Copilot. Disable ChatGPT. Can you still build? Can you still debug? If the answer is no, you’re not AI-augmented—you’re AI-dependent. And in 2026’s lean hiring market, that’s a liability companies can’t afford.

What Comes Next

The ML engineer’s warning was dismissed by many as alarmist. Six months later, the data backs it up. LinearB’s benchmarks, the invisible unemployment trend, the 40% quality deficit—these aren’t predictions. They’re happening now.

The 2026 job market is ruthless. Companies are hiring fewer developers and demanding higher skill bars. Therefore, if you’re entering the field or early in your career, the message is clear: learn to code without AI, then learn to code faster with it. Fundamentals aren’t optional anymore—they’re the only thing separating employable from expendable.

AI isn’t going away. Neither is the expectation that developers can debug, architect, and think critically. The tools changed. The standards didn’t.