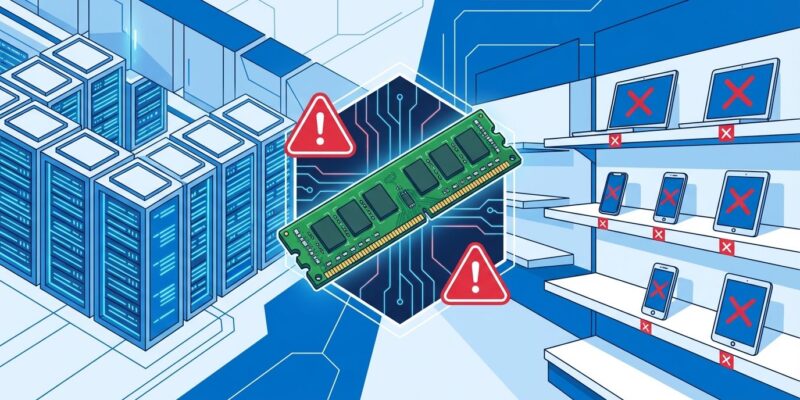

The global memory chip shortage isn’t ending anytime soon. Synopsys CEO Sassine Ghazi told CNBC on January 26 that the chip “crunch” will extend through 2026 and 2027. AI infrastructure is consuming so much production capacity that traditional markets are “starved.” Samsung just raised memory prices by 60%, DRAM surged 172% in 2025, and OpenAI’s Stargate project could consume 40% of global DRAM output. For developers and enterprises, this means higher cloud costs, expensive hardware, and AI access barriers.

The Shortage Extends Through 2027

Synopsys CEO Sassine Ghazi confirmed what the industry feared: relief won’t come in 2026. “Most memory from top players is going directly to AI infrastructure,” he told CNBC. “Many other products need memory, so those other markets are starved today because there is no capacity left for them.” Samsung President Wonjin Lee called the shortage “an industry-wide reality” with “inevitable” price increases. Analysts don’t expect relief until new fabrication plants in South Korea and Taiwan reach volume production after 2027.

Moreover, this isn’t a temporary supply-demand mismatch. It’s a structural reallocation of manufacturing capacity toward high-margin AI products. Memory manufacturers are choosing AI markets over consumer markets because the profit per wafer is significantly higher for high-bandwidth memory (HBM) than traditional DRAM.

The Price Shock Is Already Here

These aren’t abstract projections. The price increases are hitting every level of the market right now. Samsung raised prices on 32GB DDR5 modules from $149 in September to $239 today—a 60% jump in four months. DRAM prices rose 172% in 2025, outpacing even gold’s price growth.

The damage spreads across the stack. Samsung and SK Hynix are raising server DRAM prices by 60% to 70% for Q1 2026 compared to Q4 2025. Microsoft and Google are absorbing these increases. Consumer DRAM and SSD prices have doubled or tripled in two months. PC and laptop prices are climbing 10-20% industry-wide. Even storage isn’t spared—SSD and HDD costs jumped 30-40% since September.

Lenovo CFO Winston Cheng was blunt: “We will see memory prices going up. There is high demand and not enough supply. We are very confident that the cycle would be such that we could pass on the cost.” Translation: enterprises and consumers will pay more.

AI Infrastructure Is Consuming Everything

The root cause is clear: AI is eating the entire semiconductor supply chain. OpenAI’s Stargate project has inked deals with Samsung and SK Hynix for up to 900,000 DRAM wafers per month. Global DRAM capacity sits at 2.25 million wafer starts per month, meaning Stargate alone could absorb 40% of total output. Data centers will consume 70% of all memory production in 2026, up from historical levels around 30-40%. AI workloads account for roughly 20% of total DRAM when you include the wafer-equivalent of high-bandwidth memory and GDDR7.

Hyperscalers like Microsoft, Google, Meta, and Amazon are placing open-ended orders for memory to power AI data centers. Memory manufacturers are shifting cleanroom space and capital expenditure from consumer DDR5 to HBM3E because the margins are drastically higher. Consequently, the supply of conventional DRAM for traditional markets is collapsing by design, not accident.

This explains why manufacturers rejected long-term contracts at lower prices. They can make more money selling to AI infrastructure than to consumer electronics, and they’re optimizing accordingly.

Hardware Production Is Constrained Across the Board

This shortage isn’t just about RAM sticks. It’s hitting all hardware. Nvidia plans to cut RTX 50-series GPU production by 30-40% in early 2026 due to memory shortages—not just GDDR7, but all memory types. Mid-range models like the RTX 5060 Ti and RTX 5070 Ti will be hit hardest. AMD faces similar constraints for its RDNA 4 architecture.

Furthermore, the manufacturing complexity of GDDR7 is slowing its ramp-up, but the real issue is capacity allocation. Gigabyte’s CEO explained that Nvidia’s GPU supply strategy will prioritize products with the highest gross revenue per gigabyte of memory. Translation: high-end datacenter GPUs get priority, consumer GPUs get scraps.

PC manufacturers are responding with a combination of price increases (15-20%) and spec downgrades. Lenovo’s diversified supply chain with 30 global manufacturing plants might help mitigate risks, but the CFO was clear: costs are passing through.

Developers and Enterprises Face Direct Impact

For developers and enterprises, the implications are immediate. Cloud providers are absorbing 50% higher DRAM costs this quarter compared to last quarter, and they’ll pass those increases to customers through adjusted service pricing. Infrastructure costs are structurally higher now. That $5,000/month cloud bill? Expect it closer to $6,000-6,500 in 2026.

Hardware budgets are under siege. Developers looking to refresh development machines face 20%+ price increases. GPU availability for ML/AI workloads is constrained, forcing more reliance on expensive cloud compute precisely when cloud costs are rising. Server purchases for on-premise infrastructure hit the same 60-70% DRAM price hikes that hyperscalers are seeing.

This creates access barriers. Large enterprises with deep pockets can absorb cost increases. However, smaller teams, startups, and independent developers face real constraints. The two-tier semiconductor economy is emerging: AI infrastructure gets priority access at any price, everyone else competes for scraps.

Strategic Implications: Plan for 18 Months of Elevated Costs

With shortage timelines extending through 2027, developers and enterprises need strategic responses now. First, budget for 20-30% infrastructure cost increases across cloud, hardware, and storage. These aren’t temporary spikes—multi-year contracts are locking in elevated prices.

Second, optimize for memory efficiency. Projects like DeepSeek’s 30% memory reduction through model optimization suddenly have real economic value. Code that wastes memory costs more money now. Architecture decisions around caching, in-memory computation, and data structures matter more when memory is expensive and scarce.

Third, re-evaluate cloud versus on-premise economics. The traditional “cloud is cheaper” calculus shifts when cloud providers pass through 60% memory cost increases. Some workloads might justify capital expenditure on servers before prices climb further, though securing hardware itself is challenging.

Fourth, extend hardware refresh cycles. That three-year laptop replacement policy might need to stretch to four or five years when prices are 20% higher and availability is constrained.

The memory chip shortage through 2027 isn’t just a supply chain hiccup. It represents a fundamental restructuring of semiconductor economics driven by AI’s insatiable appetite for high-bandwidth memory. Developers and enterprises aren’t just planning around a shortage—they’re adapting to a new cost structure that may never fully revert to 2024 levels. The question isn’t when prices normalize. It’s what “normal” means in an AI-first semiconductor market.