Eighty-four percent of developers now use AI code review tools. Only 3% trust them. That 51-point gap isn’t an adoption lag—it’s a warning sign. Stack Overflow’s December 2025 survey marked the first-ever decline in AI tool sentiment, dropping to 60% positive from 77% two years ago. Meanwhile, Hacker News discussions trending today openly ask: “Is there an AI code review bubble?” The answer, backed by mounting evidence, is yes.

The Trust Crisis: 84% Use, 3% Believe

Stack Overflow’s December 2025 survey of 49,000 developers revealed a striking paradox: 84% adoption coexists with just 3% who “highly trust” AI output. Among experienced developers—the segment that should benefit most—high trust drops to 2.6%, while 20% actively “highly distrust” AI tools. Overall trust in AI accuracy collapsed from 43% in 2024 to 33% in 2025.

However, this is bubble indicator #1. When usage is driven by FOMO and hype rather than actual value, the fundamentals have decoupled from the market. Consequently, 72% use AI tools daily despite this lack of trust—momentum over merit.

The “Almost Right” Problem: Why AI Code Costs More

The top developer frustration in 2025 is AI code that’s “almost right, but not quite”—code that compiles and looks plausible but contains subtle logical flaws, edge case bugs, or security vulnerabilities that only surface at runtime or in production. Sixty-six percent of developers report spending more time debugging AI code than they saved in initial writing.

CodeRabbit’s December 2025 analysis quantified this. Examining 470 GitHub pull requests, they found AI-generated code produces 1.7x more issues than human code: 10.83 issues per PR versus 6.45 for human-only code. The breakdown reveals systemic problems—1.75x more logic errors, 1.64x more maintainability issues, 1.57x more security findings, and 1.42x more performance problems.

Moreover, the mechanism is clear: AI optimizes for plausibility, not correctness. Code that looks right gets committed. As a result, Google’s 2025 DORA Report found that 90% AI adoption correlated with a 9% increase in bug rates. Furthermore, teams celebrate 98% more pull requests while ignoring 9% more bugs—productivity theater, not real productivity.

Related: Vibe Coding Hits 92% Adoption—But 45% Code Fails Security

The Productivity Paradox: Feeling Faster, Measuring Slower

Developers using AI tools believe they’re 20% faster. Objective measurements show they’re actually 19% slower. The METR study ran controlled tests and found a 39-percentage-point gap between perception and reality—the emperor has no clothes.

The mechanism is generation-review asymmetry. AI accelerates code generation by 30-40%, but the DORA 2025 report found AI adoption correlated with 91% longer code review times and 154% larger pull requests. With 40% of commits now AI-generated but review capacity flat, the review bottleneck becomes the constraint. Therefore, productivity gains evaporate in the merge queue.

In fact, DORA’s findings are damning: teams with 75.9% AI adoption saw delivery throughput decline 1.5% and stability drop 7.2%. When you measure outcomes—deployment frequency, lead time, change failure rate—instead of outputs like code volume, AI makes teams slower. Consequently, vendors sell productivity illusions measured in lines generated, not value delivered.

The Quality Gate Breaks: 75% Manual Review Required

Seventy-five percent of developers now manually review every AI-generated code snippet before committing—a tacit admission that AI can’t be trusted. Yet 52% admit they don’t always check, meaning nearly half the time, buggy AI code ships unchecked. This creates a verification bottleneck: developers know AI is unreliable but lack time to review properly.

The trust erosion is measurable. Ninety-six percent of developers don’t fully trust AI-generated code accuracy, according to Sonar’s 2026 survey. The Register reported in January 2026 that while 96% doubt accuracy, only 48% always verify before committing. The gap between skepticism and action is where bugs slip through.

Moreover, the consequences compound. Forrester predicts that by 2026, 75% of technology decision-makers will face moderate to severe technical debt—quick AI fixes accumulating into unmaintainable code that slows future development. In other words, quality degradation is the hidden cost teams ignore while chasing code volume metrics.

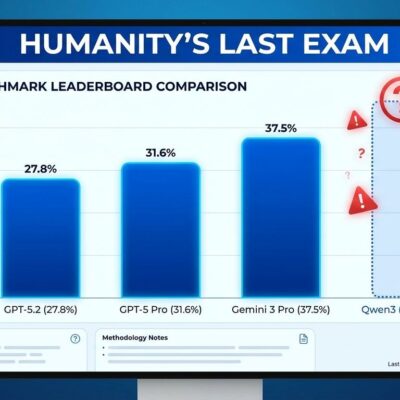

The $202B AI Code Review Bubble: Evidence Mounts

Global AI investment hit $202.3 billion in 2025—50% of all venture capital deployed worldwide. That concentration is unprecedented in technology investment history. Nevertheless, Stack Overflow’s December 2025 survey marked the first-ever decline in AI sentiment, dropping from 77% positive in 2023 to 60% in 2025. When 84% adoption meets 3% trust and declining sentiment, you have classic bubble dynamics.

France-Épargne’s “State of AI 2026” report warns: “Capital and valuations are running well ahead of fundamentals, particularly for companies without clear customer pull, durable differentiation, or credible paths to profitability.” The combination of extreme valuations, circular financing, and concentrated capital flow creates conditions for meaningful price adjustment.

Furthermore, even major VCs are positioning. Bloomberg reported January 19 that Andreessen Horowitz placed a $3 billion bet against the AI bubble. When adoption continues despite declining fundamentals—trust, sentiment, measured productivity—momentum is driving the market, not value. Developers already see this (60% positive, first decline ever). Consequently, the correction is inevitable.

What Developers Should Do Next

The pattern is clear: the AI code review market is a bubble waiting to burst. Here’s what matters:

- Don’t trust AI blindly. Seventy-five percent manually review for good reason—be one of them. The 1.7x issue rate demands skepticism.

- Measure outcomes, not outputs. Track DORA metrics (deployment frequency, lead time, change failure rate), not code volume. Productivity theater doesn’t ship products.

- Review capacity is your bottleneck. AI accelerates generation by 30%, review takes 91% longer. The constraint isn’t coding speed—it’s verification throughput.

- Quality gates are mandatory. The 9% bug increase with AI adoption proves you can’t skip review because “AI wrote it.” The “almost right” problem is real.

- Position for correction. With 84% adoption, 3% trust, declining sentiment, and $202B in concentrated investment, repricing is coming. Developers know it. VCs are positioning. The market will follow.

The bubble will burst—not because AI has no value, but because the value delivered falls far short of the value promised. When reality catches up to hype, markets correct. The only question is timing.