PageIndex, an open-source RAG framework trending #2 on GitHub this week with 1,374 stars, achieved 98.7% accuracy on the FinanceBench benchmark by eliminating vector databases and chunking entirely. Instead of semantic similarity search, it uses LLM-powered reasoning and tree search—inspired by AlphaGo—to navigate hierarchical document structures like a human expert would. Traditional vector RAG achieves just 50% accuracy on the same benchmark, making this a 48.7 percentage point improvement that challenges the $2.2 billion vector database market.

For over two years, vector databases have been the default infrastructure for RAG systems. PageIndex questions this orthodoxy with a radical claim: semantic similarity isn’t the same as relevance, and reasoning outperforms searching when accuracy matters.

Why Vector RAG Fails: Similarity Isn’t Relevance

Traditional vector-based RAG has a fundamental problem: it retrieves what’s “similar in meaning” but misses what’s “correct in context.” Ask a vector RAG system for “Q3 2024 revenue” and it might return data from Q2 or Q4 because those quarters are semantically similar—but they’re the wrong answer.

The numbers expose this gap. DigitalOcean’s analysis found that “just because two pieces of text are semantically similar doesn’t mean they’re both useful for answering your specific question.” A RAG practitioner confirmed this: “Even after optimizing the ‘Chunking + Embedding + Vector Store’ pipeline, accuracy is usually below 60%.”

Chunking compounds the issue. Breaking documents into fragments loses context and hides connections between sections. Consequently, simple questions end up buried in irrelevant context, while complex ones don’t get enough information. Accuracy suffers.

PageIndex’s 98.7% accuracy on FinanceBench—a benchmark requiring precise extraction from SEC filings—proves this isn’t a marginal improvement. It’s questioning the foundational architecture of a market projected to hit $10.6 billion by 2032.

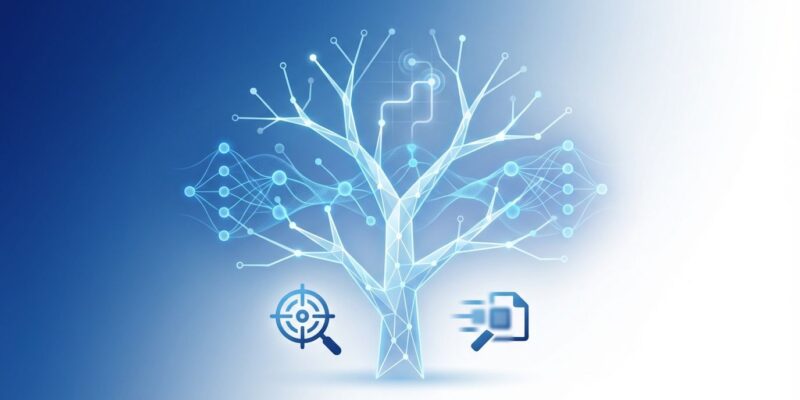

How PageIndex Works: Reasoning Over Searching

PageIndex replaces vector embeddings with a two-step approach. First, it builds hierarchical tree indexes from documents, preserving natural structure rather than chopping content into chunks. Second, it uses LLM reasoning plus tree search to navigate these indexes, mimicking how human experts read complex documents.

Inspired by AlphaGo’s combination of deep learning and Monte Carlo Tree Search, PageIndex treats document retrieval as a reasoning problem. Traditional RAG asks: “Find chunks with high cosine similarity to this query embedding.” PageIndex asks: “If I need Q3 2024 revenue, I’d navigate to Financial Statements → Revenue Analysis → Q3 subsection.”

Here’s what the tree structure looks like:

{

"title": "Q3 2024 Financial Report",

"node_id": "root",

"children": [

{

"title": "Revenue Analysis",

"start_page": 15,

"end_page": 22,

"children": [

{

"title": "Q3 Revenue by Geography",

"start_page": 18,

"end_page": 20

}

]

}

]

}No chunking means context is preserved. No vectors means retrieval is explainable—you can trace the reasoning path and debug failures. However, this architectural shift trades speed and cost for accuracy. Vector search returns results in under a second with minimal API costs (one-time embedding computation). PageIndex performs query-time LLM reasoning with multiple API calls per query. It’s slower and pricier, but accurate when mistakes are costly.

The Scalability Reality Check

Hacker News developers weren’t shy about raising concerns. The discussion thread (432+ points, 278 comments) exposed skepticism about scalability, latency, and cost. One developer noted: “I don’t see this scaling… you’d have to deal with lots of latency and costs.” Another flagged concerns about “document collections beyond a few hundred documents max.”

The critique is valid. PageIndex doesn’t eliminate RAG’s fundamental trade-offs—it shifts them. Traditional RAG offers sub-second latency at pennies per query. Meanwhile, long-context LLMs like Llama 4 Scout (10M tokens) cost $1.10 per inference with 30-60 second latency. PageIndex sits somewhere between: query-time reasoning is slower than vector lookup but likely faster than processing millions of tokens.

Furthermore, the “vectorless” framing drew pushback. As one HN commenter observed, PageIndex “gets there completely and totally with iterative and recursive calls to an LLM.” It’s not eliminating dependencies—it’s replacing vector approximation with LLM reasoning approximation. Both have costs.

PageIndex isn’t a universal RAG replacement. It’s a specialized tool that makes sense when accuracy justifies higher overhead. Therefore, the question is: when does that trade-off pencil out?

When PageIndex Beats Vectors (and When It Doesn’t)

PageIndex excels in precision-sensitive domains where “close enough” isn’t acceptable. Financial analysis is the primary use case: Mafin 2.5, powered by PageIndex, achieved 98.7% accuracy on FinanceBench questions requiring exact numbers from SEC filings. Traditional vector RAG’s 50% accuracy would be disastrous for financial decisions.

Other ideal domains include legal research (contract clause extraction, case law with context dependencies), medical records (patient history retrieval where hallucinations are unacceptable), and regulatory compliance (where traceability matters).

However, PageIndex isn’t right for consumer-scale applications needing sub-second responses at high volume, or large document corpora where vectors still win on speed and cost. The AI community is converging on hybrid approaches: use vectors to filter 10,000 documents down to 100, then apply PageIndex for final precise retrieval. This combines scale with accuracy.

Decision criteria are straightforward: If accuracy is paramount, documents are well-structured, query volume is manageable, and cost/latency are acceptable trade-offs for precision, PageIndex makes sense. Otherwise, vectors remain the pragmatic choice.

RAG Evolves Despite Existential Questions

PageIndex’s timing is strategic. LLMs now support 2M-10M token context windows (Gemini 1.5 Pro, Llama 4 Scout), sparking debate: “Why retrieve when you can stuff everything into context?” The answer involves cost (processing 10M tokens costs $1.10+ per query vs. RAG’s pennies), latency (30-60 seconds for large contexts vs. <1 second for RAG), and accuracy (the "lost in the middle" problem where LLMs' performance degrades with massive contexts).

Industry consensus from RAGFlow’s 2025 year-end review suggests RAG and long-context will coexist, with balance shifting by use case. Additionally, PageIndex doesn’t defend RAG’s relevance—it reimagines RAG’s implementation. Whether RAG survives the long-context era may depend on whether innovations like PageIndex can deliver accuracy gains that justify the overhead.

This is RAG’s evolution, not its obituary. PageIndex proves reasoning-based retrieval is viable and accurate, but also exposes the trade-offs. The vector database market isn’t disappearing—it’s being forced to compete on dimensions beyond speed.

Key Takeaways

- PageIndex achieves 98.7% accuracy by replacing vector search with LLM-powered reasoning and tree search, a 48.7 point improvement over traditional vector RAG’s ~50%

- Trade-offs matter: Accuracy gains come with higher cost and latency; PageIndex isn’t scalable for all use cases

- Ideal for precision-critical domains: Finance, legal, and medical applications where mistakes are costly

- Challenges, doesn’t replace: Vector databases (Pinecone, Weaviate, Chroma) still win on speed/cost; hybrid approaches likely

- RAG evolves: Despite long-context LLMs, innovations like PageIndex keep RAG relevant by focusing on accuracy over simplicity