A developer just unlocked a hidden feature in Claude Code that Anthropic doesn’t want you to know about yet. Yesterday, January 24, 2026, Mike Kelly discovered “Swarms” – a feature-flagged capability that transforms Claude Code from a single AI assistant into a team orchestrator. Instead of one AI writing your code, you get multiple specialist agents working in parallel, coordinating via shared task boards, and messaging each other to solve problems. Within hours, his discovery hit 281 points on Hacker News with 207 developers fiercely debating whether this is the future of development or a dangerous step too far.

Here’s what makes this discovery strange: Anthropic built this powerful capability, then hid it behind feature flags. No announcement, no documentation, no official release. Kelly had to create an unlock tool called claude-sneakpeek just to access it. Why hide your most advanced feature?

From AI Coder to AI Team Lead

The paradigm shift is stark. Traditional Claude Code writes code when you ask it to. Swarms mode doesn’t work that way. Instead, you talk to a team lead that plans, delegates, and synthesizes – but doesn’t write a single line of code itself.

When you approve a plan, it enters “delegation mode” and spawns specialist background agents. Each agent gets focused context and a specific role. They share a task board tracking dependencies, work on tasks simultaneously, and coordinate via inter-agent messaging using @mentions. Fresh context windows per agent prevent the token bloat that cripples single-agent approaches at scale.

Instead of Claude Code building a full-stack feature alone, the team lead might spawn frontend, backend, testing, and documentation specialists who work in parallel. The architecture agent maintains system design while code agents tackle different components. A testing agent validates changes continuously. Workers coordinate amongst themselves, not just with you.

The Community Divide: Brilliant or Premature?

The Hacker News thread reveals a development community split three ways. Optimists report building complete projects in three days with swarms handling 50,000+ line codebases that choke single agents. The specialization creates natural quality checks – one developer loves “when CAB rejects implementations” from the review agent.

However, skeptics raise harder questions. “When Claude generates copious amounts of code, it makes it way harder to review than small snippets,” one commenter noted. Human code review becomes nearly impossible at swarm scale. Worse, agents make fundamentally wrong decisions – like trying to reimplement the Istanbul testing library instead of running npm install. The reliability just isn’t there yet.

Then there’s the liability problem. “If a human is not a decision maker in the production of the code, where does responsibility for errors propagate to?” This isn’t theoretical – legislators are already drafting laws requiring documented human accountability for AI-generated code. Knowledge loss concerns are real too. One engineer put it bluntly: “About 50% of my understanding comes from building code.”

The pragmatists split the difference: use swarms for scaffolding and exploration, but keep humans in the loop for production. They’re probably right.

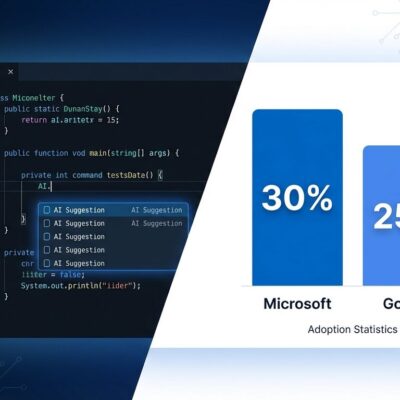

AI Coding Tool Wars Heat Up

This discovery doesn’t happen in a vacuum. GitHub Copilot just announced model deprecations on January 13, pushing hard into multi-model support. Cursor dominates large projects with its $20/month Pro tier and multi-file awareness. Windsurf Cascade pitches autonomous agentic workflows. Claude Code was known for architectural reasoning – now it’s racing to add orchestration.

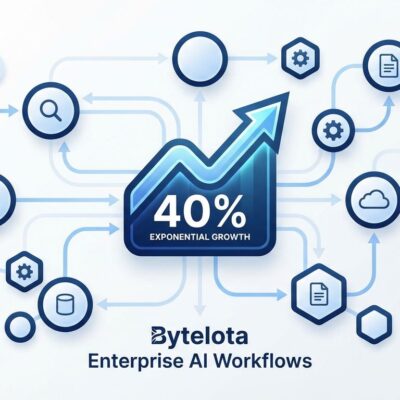

The industry pattern is clear: single powerful agent → orchestrated teams of specialists. It mirrors the monolithic applications → microservices shift from a decade ago. Gartner reports a 1,445% surge in multi-agent system inquiries from Q1 2024 to Q2 2025. By the end of 2026, 40% of enterprise applications will include task-specific AI agents, up from less than 5% in 2025.

Anthropic sees where this is going. The question isn’t whether multi-agent systems are coming – it’s whether Swarms is ready now.

Why Anthropic Hid This Feature

Feature-flagging usually means one of three things: testing with power users before general release, waiting for competitive timing, or the feature isn’t ready for production. Given the reliability concerns developers are reporting, option three seems most likely.

The timing is suspicious though. Kelly’s discovery coincided with a Fortune article the same day about Claude Code’s “viral moment.” Either this is carefully orchestrated hype or Anthropic is scrambling because someone found their secret.

Either way, the cat’s out of the bag. Developers can try claude-sneakpeek now or wait for the official launch Anthropic will have to announce soon.

What Developers Should Actually Do

If you’re building production systems, wait. Swarms mode is experimental, feature-flagged for good reasons, and has documented reliability issues. The code review problem alone should give you pause.

If you’re exploring or prototyping, Kelly’s unlock tool is on GitHub. Just know you’re using software the vendor hasn’t officially released. Read the 207-comment Hacker News thread first – it’s full of real experiences, not marketing.

Longer term, start learning to work with AI teams, not just AI assistants. The skill shift is happening whether you like it or not: from writing code → orchestrating, delegating, and reviewing AI teams. The developers who figure out when to trust AI, when to question it, and when to ignore it completely will be the ones who win in 2026.

One thing’s certain: the AI coding tool wars just got more interesting.