On January 12, 2026, Mark Zuckerberg announced Meta Compute—a new top-level initiative to build tens of gigawatts of AI infrastructure this decade and hundreds of gigawatts long-term. But this isn’t just about powering Meta’s AI ambitions. When energy access becomes the bottleneck for AI development, infrastructure isn’t just an operational cost. It’s a strategic moat. And Meta is building a wall.

Power Replaced Compute as AI’s Bottleneck

Three tech CEOs have independently reached the same conclusion: the bottleneck in AI has shifted from compute to power. Data center electricity consumption is doubling from 460 terawatt-hours in 2024 to over 1,000 TWh in 2026, according to the International Energy Agency. Goldman Sachs forecasts a 165% increase in data center power demand by 2030 compared to 2023.

The US grid can’t keep pace. Seventy percent of America’s electrical infrastructure was built between the 1950s and 1970s. PJM Interconnection—the largest grid operator serving 65 million people across 13 states—projects a six-gigawatt shortfall by 2027. Meanwhile, data centers can be built in two to three years, but grid interconnection studies and infrastructure upgrades take four to eight years. The timeline mismatch is stalling AI projects now, not later.

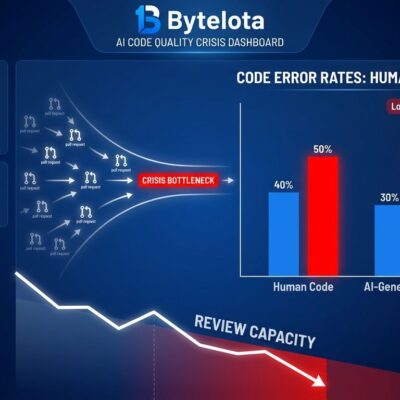

More than half of AI initiatives have been delayed or canceled in the last two years citing infrastructure complexity, according to research from The Register. Two-thirds of IT decision-makers at US enterprises say their AI environments are too complex to manage. This isn’t a future problem—it’s happening right now.

Meta Compute: Organization, Scale, and Nuclear Partnerships

Meta Compute is a top-level initiative reporting directly to Zuckerberg, consolidating software, hardware, and facilities management under unified leadership. The organization is led by Santosh Janardhan (technical architecture, in-house silicon, operations) and Daniel Gross (supply chain, long-range capacity planning). Dina Powell McCormick, Meta’s President and Vice Chairman, handles government partnerships and financing.

The scale is staggering. Meta plans to build tens of gigawatts this decade and hundreds of gigawatts long-term. For context, one gigawatt powers roughly 750,000 homes. To secure this capacity, Meta has become one of the most significant corporate purchasers of nuclear energy in American history. The company announced agreements with Vistra, TerraPower, and Oklo totaling up to 6.6 gigawatts of nuclear energy for Ohio and Pennsylvania data center clusters, as detailed in Meta’s official announcement.

“How we engineer, invest, and partner to build this infrastructure will become a strategic advantage,” Zuckerberg wrote in the announcement. That’s the key insight: Meta is treating infrastructure buildout as competitive strategy, not operational necessity. Energy access and supply chain control are now differentiators.

Every Major Tech Company Is Going Nuclear

Meta isn’t alone. Microsoft committed $16 billion to restart Three Mile Island’s 835-megawatt reactor, targeting 2028 operation. Google signed what it calls the “world’s first corporate agreement to purchase nuclear energy” from small modular reactors developed by California-based Kairos Power, with the first reactor expected by the end of the decade. Amazon is investing over $20 billion to convert Susquehanna into an AI campus and partnering with X-energy on projects that could deliver 5,000+ megawatts by the late 2030s.

Hyperscalers are projected to spend $527 billion on AI infrastructure in 2026 alone, according to UBS estimates. Data centers equipped for AI processing loads will require $5.2 trillion in capital expenditures by 2030. NVIDIA CEO Jensen Huang called it the “largest infrastructure build-out in history,” requiring “trillions of dollars.”

When Google, Microsoft, Amazon, and Meta all agree on the same strategy, it’s not innovation—it’s cartel behavior. They’re not competing on AI capabilities anymore. They’re competing to lock out everyone else.

Barriers to Entry Are Rising for Everyone Else

For developers and companies that can’t afford gigawatt-scale infrastructure, options are shrinking. Cloud costs are rising while complexity increases. At least 15% of enterprises are shifting toward private AI deployments on private clouds in 2026, driven by cost pressures and data sovereignty concerns, according to Forrester Research.

The open-source AI movement promised democratization, but infrastructure costs tell a different story. A typical self-hosted Llama 70B deployment requires eight A100 GPUs, costing roughly $80,000 annually in cloud expenses plus engineering overhead. That breaks even against GPT-4 API costs at around 20 to 30 million tokens per month. For smaller projects and startups, the math doesn’t work.

GPU utilization remains below 30 to 40 percent in many environments, forcing organizations to choose between wasting expensive resources or throttling innovation. Most startups won’t build a true moat around AI; many will be acquired, copied, or outpaced by incumbents with broader reach and faster scale, according to analysis from Latitude Media.

Infrastructure Is the New Oligopoly

The industry sold us on “AI democratization.” Open models, accessible APIs, developer-friendly tools—the narrative was that AI would level the playing field. But when energy access becomes the limiting factor, only companies with nuclear partnerships and gigawatt-scale infrastructure can compete at scale. That’s not democratization. That’s oligopoly.

We’re witnessing the creation of AI landlords. Meta, Google, Microsoft, and Amazon will own the physical infrastructure and rent access while locking out competitors. The question for everyone else isn’t “Can we build better AI?” It’s “Can we afford to run it?”

You can have the weights. You can have the code. But when infrastructure access requires government partnerships and multi-billion-dollar energy commitments, “open” becomes meaningless. The playing field isn’t level—and it’s getting steeper.