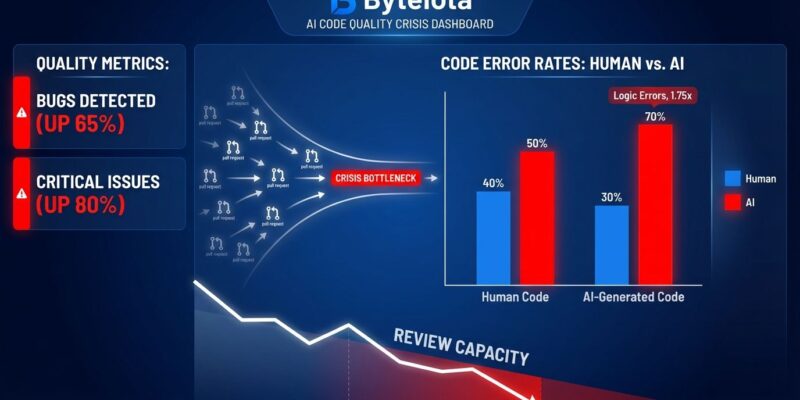

Developers are coding 20% faster with AI tools like GitHub Copilot and Cursor. But here’s the catch: while pull requests per author increased 20% year-over-year, incidents per pull request jumped 23.5%. The bottleneck didn’t disappear—it shifted from writing code to reviewing it. Engineering managers now face mountains of AI-generated diffs they can’t properly validate, and December 2025 data reveals a systemic quality crisis nobody’s addressing.

AI Code Contains 1.75× More Logic Errors

CodeRabbit’s December 2025 analysis of 470 GitHub pull requests found AI-generated code produces 1.7× more issues overall—10.83 issues per PR versus 6.45 for human code. The study compared 320 AI-co-authored PRs against 150 human-only PRs using structured issue taxonomy.

The breakdown is worse than most teams realize. Logic and correctness errors occur 1.75× more frequently in AI code. Code quality and maintainability issues jump 1.64×. Security vulnerabilities rise 1.57×. Performance problems increase 1.42×. Some categories spike even higher: XSS vulnerabilities are 2.74× more likely, and excessive I/O operations occur roughly 8× more often.

However, AI code also has 1.4× more critical issues and 1.7× more major issues. This isn’t a minor quality dip. It’s a measurable degradation across every category that matters—logic, security, performance, maintainability.

The root causes are clear: AI doesn’t understand local business logic, produces surface-level correctness without control-flow protection, deviates from repository-specific conventions, and requires explicit constraints to avoid security patterns. Consequently, it optimizes for clarity over computational efficiency because it doesn’t know your domain.

Teams Generate Code Faster Than They Can Review It

In 2025, monthly code pushes climbed past 82 million. Merged pull requests reached 43 million. 41% of new code originated from AI-assisted generation. Meanwhile, review capacity remained flat.

The numbers tell the story. PR volume increased 20% year-over-year. Median PR size grew 33%, from 57 to 76 lines changed between March and November 2025. Yet, review throughput stayed constant. Engineering organizations report that review capacity—not developer output—is now the limiting factor in delivery.

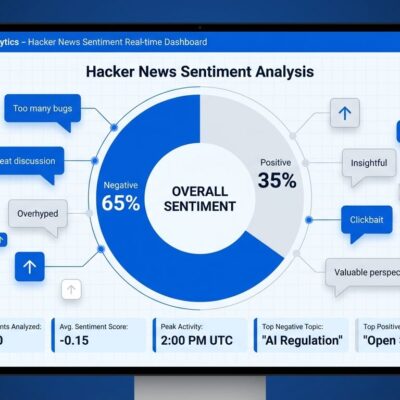

Furthermore, the context gap makes this worse. Among developers who say AI degrades code quality, 44% blame missing context. Moreover, even among developers who say AI improved quality, 53% still want better contextual understanding. 65% report AI tools miss critical context during important tasks.

The #1 requested fix is “improved contextual understanding,” capturing 26% of all developer votes according to Qodo AI’s Code Quality Report. Without context, AI-generated code becomes a liability. Developers spend 60-70% of their time just understanding code, and AI tools aren’t solving that problem—they’re compounding it.

As the CodeRabbit report notes: “Human reviewers experienced fatigue handling elevated code volumes, potentially causing additional oversight issues.” When review capacity can’t scale with generation speed, quality suffers.

Speed Without Quality Is Just Technical Debt

Sonar’s 2026 State of Code survey found 96% of developers don’t fully trust the functional accuracy of AI-generated code. There’s a reason: as one industry observer noted, “Code looks fine until the review starts.”

The productivity narrative is broken. Teams using AI see code generation speed increase 30%, but actual velocity improves only 10-20%. The gap? Verification can’t keep pace with generation speed.

Google’s DORA 2024 report found that as AI adoption increased to 75.9% of teams, delivery throughput decreased 1.5% and delivery stability dropped 7.2%. Faster coding doesn’t mean faster shipping when quality control becomes the bottleneck.

The math is stark: 20% more PRs plus 23.5% more incidents equals accumulating bugs faster than features. AI-authored code contains worse bugs and requires more attention than human-written code. As a result, the long-term maintenance burden is exploding.

This is technical debt at scale. Speed without quality control creates negative outcomes, not productivity gains.

Hybrid Review Models Are the Way Forward

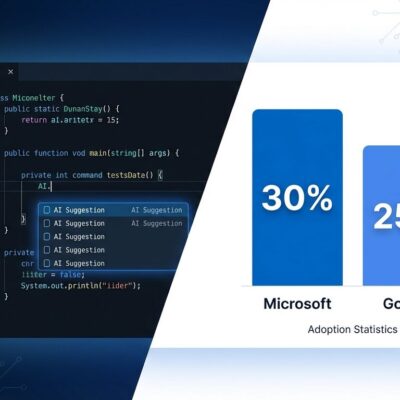

The solution isn’t abandoning AI—it’s hybrid human-AI workflows. Code review agent adoption surged from 14.8% in January 2025 to 51.4% in October, driven primarily by GitHub Copilot Code Review’s general availability.

What works: AI handles mechanical issues like syntax checking, formatting enforcement, common patterns, and security scanning. Humans review architecture decisions, business logic validation, context-dependent code, and security-critical changes. Teams using AI-assisted review tools report 30% faster merge request approvals for small and medium-sized changes.

Best practices are emerging. Smaller PRs reduce review burden per pull request. Better AI prompts with more context produce fewer review issues during generation. Context-aware tools like Qodo’s Context Engine learn repository-specific patterns instead of treating every codebase the same. Tiered review processes let AI handle simple PRs while humans focus on complex changes.

The market is responding. Copilot and Cursor have 89% retention rates 20 weeks post-adoption. Claude Code maintains 81% retention. Nearly 50% of companies now have at least 50% AI-generated code, up from 20% at the start of 2025.

If AI speeds up coding, code review must speed up too. Hybrid models scale review capacity without sacrificing quality. That’s the 2026 imperative: solving the review bottleneck, not just celebrating generation speed.