Three AI giants launched competing healthcare platforms within six days in January 2026. OpenAI announced ChatGPT Health on January 7, followed by Anthropic’s Claude for Healthcare on January 11 at the J.P. Morgan Healthcare Conference, and Google’s MedGemma 1.5 on January 13. This coordinated timing isn’t coincidence—it’s strategic positioning for a healthcare AI market projected to hit $40-45 billion by 2026.

When three tech giants announce similar products within a week, that’s competitive strategy, not innovation. They’re racing to establish market dominance before regulation solidifies.

Three Strategies: Consumer, Enterprise, Open-Source

Each company picked a different lane. OpenAI targets consumers with ChatGPT Health, connecting personal medical records and wellness apps like Apple Health and MyFitnessPal. Over 230 million people already ask health questions on ChatGPT weekly—OpenAI is converting existing usage into a dedicated product.

Anthropic focuses on enterprises. Claude for Healthcare integrates with hospital systems via FHIR (Fast Healthcare Interoperability Resources), CMS databases, and ICD-10 coding. Early partners Novo Nordisk and Eli Lilly report reducing documentation time from weeks to minutes. This is enterprise software, not consumer tech—Claude targets the institutional buyers who control healthcare budgets.

Google took the open-source route. MedGemma 1.5 offers free models for 3D medical imaging (CT/MRI scans) and histopathology analysis. Millions of downloads on Hugging Face signal strong developer adoption. Google is betting on ecosystem lock-in—give away the models, capture the cloud infrastructure revenue.

Related: AI Infrastructure ROI Crisis: $600B Bet, 95% See Zero Returns

Why Six Days? This Is a Land Grab

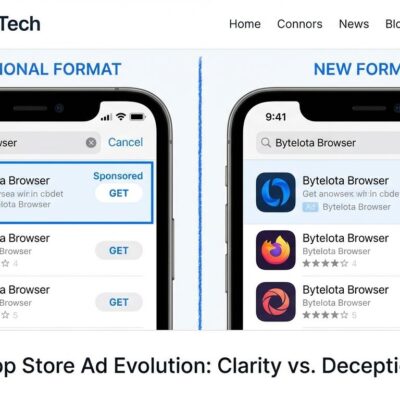

The timing reveals competitive dynamics. When three companies announce similar products within a week, they’re not independently innovating—they’re responding to each other. Healthcare AI is advancing faster than regulatory frameworks, creating a window where first-movers can set de facto standards.

The healthcare AI market is projected to reach $40-45 billion by 2026, growing at 38-46% annually through 2030. That’s $110 billion by decade’s end. Companies are positioning now because regulatory clarity is coming—HHS proposed new AI risk management rules in 2025, but full enforcement hasn’t arrived yet.

“Artificial intelligence in healthcare is advancing faster than the law,” notes a Milbank Quarterly analysis. “Without one clear AI statute, clinicians are practicing in a legal environment where different rules overlap and evolve.” Translation: Move fast while the rules are still being written.

HIPAA Compliance: Critical But Confusing

All three platforms emphasize HIPAA compliance, but the requirements are easy to get wrong. Healthcare AI demands Business Associate Agreements (BAAs), zero-data-retention policies, encrypted isolated storage, and audit logging. Consumer AI plans—ChatGPT Free/Plus or Claude Pro—cannot sign BAAs and are not HIPAA compliant. Only enterprise tiers qualify.

ChatGPT Health uses purpose-built encryption and isolation, keeping health conversations separate from regular ChatGPT usage. Claude for Healthcare offers HIPAA-ready infrastructure with BAAs, audit logs, and Constitutional AI tuned for clinical ethics. Both commit to zero-training policies: health data never trains future models.

The common mistake? Using public AI tools without BAAs triggers HIPAA violations. One breach can cost millions in fines. For developers entering healthcare AI, compliance isn’t optional—it’s table stakes.

Who’s Responsible When AI Makes Mistakes?

Healthcare AI creates a liability vacuum. When AI gives wrong medical advice, who’s legally responsible—the physician who relied on it, the hospital that deployed it, or the AI company that built it? U.S. malpractice law has no clear answer.

“Under current U.S. malpractice law, liability rests on the ‘reasonable physician under similar circumstances’ standard,” explains a Stanford HAI policy brief. “There is no doctrine assigning shared responsibility to AI systems, even when their recommendations directly influence patient care.”

The EU is exploring non-fault liability rules for high-risk AI failures, but the U.S. has no equivalent framework. This legal gray zone creates risk for everyone—developers, healthcare providers, and patients. Companies are deploying healthcare AI faster than liability frameworks can evolve.

Related: Anthropic Claude Ban: AI Tool Blocks AI Tool Builders

What This Means for Developers

Healthcare AI is creating specialized roles—data scientists, ML engineers, clinical informaticists, AI product managers. But entry barriers are high. You need technical skills (Python, TensorFlow, FHIR integration) plus healthcare domain knowledge (medical terminology, HIPAA regulations, clinical workflows).

Job listings emphasize interdisciplinary requirements: programming languages like Python or R, database management with SQL, healthcare-specific software including EHR systems, and regulatory expertise around HIPAA and medical device standards. It’s not enough to know AI—you must understand healthcare systems.

This is a less crowded career path with higher pay than general software development. But the learning curve is steep. For developers willing to invest in healthcare-specific education, opportunities exist now while the field is young and standards are still forming.

Key Takeaways

- Three approaches, one market: OpenAI targets consumers, Anthropic targets enterprises, Google offers open-source. Pick your lane based on compliance tolerance and business model.

- Timing is strategy: Six-day launch window reveals competitive positioning before regulation solidifies. First-movers are setting standards.

- Compliance is mandatory: HIPAA violations cost millions. Consumer AI plans don’t qualify—only enterprise tiers with BAAs.

- Liability is unclear: Legal frameworks haven’t caught up. When AI makes mistakes, responsibility remains undefined.

- Opportunities for specialists: Healthcare AI roles are emerging, but require technical skills plus healthcare domain knowledge.

Healthcare AI shifted from experimental to market-ready in January 2026. The race is on.