While the tech industry obsesses over bigger AI models and more GPUs, 2026 marks a counter-trend gaining serious momentum. Dell, Gartner, Digitimes, and IBM have independently declared this year as the inflection point when edge AI transitions from experimental deployments to production scale. The shift is driven by small language models delivering 80-90% of large model capabilities while running entirely on-device, quantization breakthroughs enabling 4-8x model size reduction, and hybrid edge-cloud architectures that strategically distribute workloads. This isn’t hype—it’s a fundamental architectural shift driven by convergence of technical maturity, infrastructure readiness, and regulatory pressure.

The Small Language Model Revolution

Gartner predicts organizations will use task-specific small language models three times more than general-purpose LLMs by 2027, and the technical foundation for this shift is already here. SmoothQuant, published at ICML 2023 by MIT, enables 8-bit weight and activation quantization with up to 1.56x speedup and 2x memory reduction while maintaining nearly lossless accuracy. OmniQuant, presented at ICLR 2024, pushes further with aggressive 4-bit quantization that delivers +4.99% to +11.80% accuracy improvements over baseline approaches.

The practical result? Models with millions to low billions of parameters running on laptops, mobile devices, and industrial edge nodes—delivering 80-90% of large model capabilities for specific tasks while consuming a fraction of the resources. Google’s Gemma-3n-E2B-IT demonstrates where this leads: a multimodal SLM handling text, image, audio, and video inputs entirely on-device.

For developers, this fundamentally changes what’s possible at the edge. Task-specific models now outperform general-purpose LLMs for focused use cases without requiring constant cloud connectivity. The question isn’t whether SLMs work—it’s what new architectures they enable.

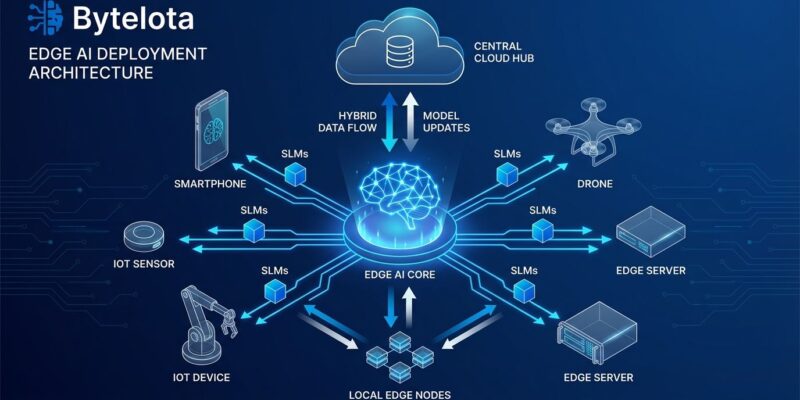

Hybrid Edge-Cloud Architecture Replaces Cloud-First

The industry is abandoning both “cloud-only” and “edge-only” extremes. Hybrid edge-cloud architectures place simple, frequent, latency-sensitive decisions at the edge while complex computations, model training, and aggregated analytics happen in the cloud. Architectural patterns include hub-and-spoke deployments where regional cloud hubs manage edge device clusters, and multi-tier systems with edge devices feeding fog servers that connect to cloud infrastructure.

Manufacturing offers clear examples: factory sensors perform real-time quality control at the edge with microsecond response times, on-premises fog servers handle preprocessing, and cloud infrastructure trains models and aggregates cross-facility analytics. Retail follows similar patterns—in-store cameras process customer behavior for immediate personalization while the cloud analyzes trends across locations. Healthcare deploys patient monitors that detect anomalies instantly without data leaving the device, then aggregates population health insights in the cloud.

This requires developers to rethink workload placement entirely. The question shifts from “edge vs cloud” to “which processing happens where, and why?” Infrastructure teams face new challenges: orchestrating thousands of distributed edge nodes while maintaining centralized control, managing hybrid deployments, designing for eventual consistency. These aren’t theoretical concerns—they’re production requirements for 2026 edge AI deployments.

Production Reality: Where 70% of Pilots Fail

Despite industry enthusiasm, the numbers tell a harder story. Fewer than 33% of organizations have fully deployed edge AI, and approximately 70% of Industry 4.0 edge AI projects stall in pilot phase. The gap between proof-of-concept and production reveals fundamental operational challenges.

Edge orchestration—deploying, updating, and securing AI models across thousands of diverse devices—is the primary production blocker. Hardware diversity compounds the problem: different edge devices use varying communication protocols, creating interoperability nightmares. Security vulnerabilities multiply because edge devices are physically accessible, often deployed in uncontrolled environments without the dedicated protection teams that guard centralized cloud servers. The EU AI Act, becoming fully enforceable in 2026, requires high-risk AI systems to be auditable, traceable, and explainable—difficult to achieve across distributed edge fleets.

However, production-ready solutions are emerging. Self-healing infrastructure using AI agents that restart services, reroute traffic, and isolate faulty sensors autonomously is achieving 99.999% uptime in deployed systems from Scale Computing and telecommunications providers. This shift from experimental to production-grade edge AI is happening now, but it requires planning for device management, security patching, failure recovery, and monitoring at scale from day one.

Developers building edge AI systems need to budget 30-40% of project effort for operational concerns, not just AI model development. The pilot-to-production gap is where most projects die—those that succeed treat infrastructure as a first-class concern, not an afterthought.

Real-World Proof Points: Manufacturing, Retail, Healthcare

Production deployments demonstrate measurable business value. Manufacturing programmable logic controllers make microsecond adjustments for quality control, using edge AI to analyze equipment vibration, temperature, and pressure in real-time. Predictive maintenance detects early failure signs before major issues occur, reducing downtime and maintenance costs.

Retail supermarkets deploy camera vision systems that monitor empty shelves and notify staff instantly, analyze customer behavior for personalized recommendations based on real-time in-store location, and run fraud detection at point of sale—all while keeping customer data in-store for privacy compliance. Healthcare facilities use patient monitors that process vital signs locally, alerting medical staff immediately on anomalies without data leaving the room, simplifying HIPAA compliance while enabling continuous monitoring.

These aren’t theoretical use cases. Edge AI delivers ROI when latency below 100ms is required, privacy regulations demand local processing, offline operation is needed, or bandwidth costs prohibit constant cloud communication. Knowing when edge makes sense versus cloud is critical for architects and infrastructure teams planning 2026 deployments.

Meanwhile, 5G infrastructure reality lags behind marketing promises. Empirical measurements in central Europe show 61-110 milliseconds of latency in production 5G environments—far from the 1-millisecond claims, and exceeding latency requirements for AI applications by approximately 270%. This makes edge processing critical even with 5G. However, 5G-Advanced and early 6G infrastructure targeting 100-microsecond latency are maturing through partnerships like Nvidia-Nokia’s AI-powered radio access network deployments.

Key Takeaways

- Small language models delivering 80-90% of LLM capabilities at 4-8x smaller size enable practical edge deployment through quantization breakthroughs (SmoothQuant W8A8, OmniQuant W4A4)

- Hybrid edge-cloud architectures replace cloud-first and edge-only approaches with intelligent workload distribution: simple/frequent decisions at edge, complex analysis in cloud

- Production deployment faces real challenges: 70% of Industry 4.0 pilots stall due to edge orchestration complexity, hardware diversity, security concerns, and regulatory compliance

- Self-healing infrastructure achieving 99.999% uptime signals production-ready edge AI, but requires budgeting 30-40% of effort for operational concerns from day one

- Real deployments in manufacturing (predictive maintenance), retail (inventory monitoring), and healthcare (patient monitoring) demonstrate measurable ROI when latency, privacy, or offline requirements justify edge deployment

The 2026 inflection point isn’t about hype cycles—it’s about convergence. Technical breakthroughs in SLMs and quantization, infrastructure maturity through 5G-Advanced rollout, and regulatory pressure from the EU AI Act are making edge AI pragmatic instead of aspirational. The shift from cloud-first to hybrid edge-cloud architectures is happening now, and developers who understand intelligent workload distribution will build the next generation of distributed AI systems.