Berkeley researchers released LEANN this week, an open-source RAG system claiming 97% storage savings—collapsing a 188 GB vector index into just 4 GB. However, the project rocketed to #6 on GitHub trending with 9,137 stars, capturing developer frustration with a harsh reality: enterprise RAG systems are hemorrhaging money. Consequently, one Fortune 500 CTO had to explain a $2.4M RAG investment delivering negative ROI. Meanwhile, industry research shows 72% of enterprise RAG implementations fail within their first year, with infrastructure costs as the primary killer.

Vector database bills ranging from $75K/month to millions annually are breaking enterprise AI budgets. Moreover, LEANN challenges the fundamental assumption that “you must store all embeddings,” offering a radical compute-for-storage trade-off that could reshape RAG architecture. Furthermore, AWS validated this trend in December 2025 by launching S3 Vectors with “storage-first architecture” claiming 90% cost reductions. This isn’t a research curiosity—it’s a response to an industry crisis.

Recomputing Embeddings Instead of Storing Them

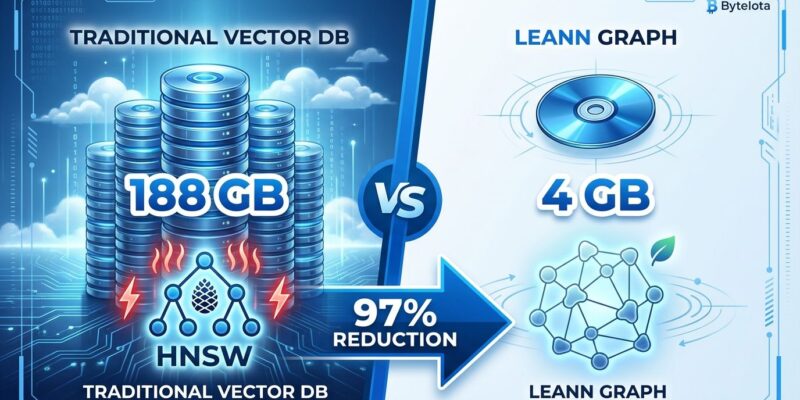

LEANN achieves 97% storage reduction by recomputing embeddings on-demand rather than storing them. Traditional vector databases like Pinecone and Weaviate store every 768-dimensional embedding vector, resulting in massive indexes. For instance, for a 76 GB dataset, HNSW (the standard approach) generates a 188 GB index. In contrast, LEANN stores only a compact proximity graph—just 4 GB—and recomputes embeddings during queries using the original encoder model.

The technical approach relies on two innovations. First, on-the-fly embedding recomputation eliminates the largest storage component (approximately 173 GB of embeddings). Second, high-degree preserving graph pruning keeps only the top 2% of “hub” nodes that matter for search accuracy. Notably, academic benchmarks from Berkeley SkyLab show LEANN maintains 90%+ recall and 2.48-second retrieval times on an RTX 4090 while achieving this dramatic compression.

This directly addresses the enterprise cost crisis. If you can cut storage by 97%, you eliminate one of the primary reasons RAG projects fail. For local or edge deployments, this makes RAG feasible on consumer hardware instead of requiring cloud vector databases costing thousands per month.

Why Vector Database Costs Are Breaking Enterprise Budgets

Vector database costs have become the “freight train hitting a brick wall” of enterprise AI. Pinecone charges $0.33/GB/month, translating to $3,500 monthly for 1 billion vectors. Similarly, Weaviate Cloud runs $2,200/month at billion-scale. However, real-world deployments report worse: a healthcare enterprise hit $75K/month by month six, while a Fortune 500 company burned $2.4M on a RAG implementation that delivered negative ROI.

The infrastructure economics are brutal. Traditional vector databases store full embeddings because retrieval speed demands it—sub-100ms queries require everything in memory or fast SSDs. Consequently, this creates runaway storage costs that scale linearly with corpus size. Add millions of documents, pay proportionally more. As a result, 72% of enterprise RAG implementations fail within their first year, with infrastructure costs identified as the primary killer.

AWS validated the “storage-first architecture” trend when S3 Vectors reached general availability in December 2025, claiming 90% cost reductions. In fact, in just four months, AWS reported 250,000+ vector indexes created, 40 billion vectors ingested, and 1 billion queries processed. Therefore, when the largest cloud provider launches a storage-optimized solution and Berkeley releases an open-source alternative claiming even better compression, the industry is signaling: the old model is too expensive.

The Compute-for-Storage Trade-Off: When 2 Seconds Beats 100 Milliseconds

LEANN trades search latency for storage efficiency. Traditional vector databases deliver sub-100ms queries but require massive storage. In contrast, LEANN takes approximately 2 seconds (on an RTX 4090) but uses 97% less storage. Nevertheless, the key insight: response generation dominates end-to-end latency in RAG pipelines. Specifically, LLM inference typically exceeds 20 seconds, so adding 2 seconds for search represents only 10% overhead.

Technical optimizations make this viable. For example, two-level search using Product Quantization for approximate filtering provides ~1.4× speedup by avoiding unnecessary recomputation. Additionally, dynamic batching aggregates embedding computations across graph traversal steps for ~1.8× speedup through better GPU utilization. The result: 2.48-second retrieval maintains 25.5% downstream QA accuracy—matching the HNSW baseline despite 97% compression.

This trade-off works for real-world RAG applications where storage is the bottleneck, not speed. For instance, local personal RAG systems, edge devices, IoT deployments, and low-QPS enterprise workloads can all accept 2-second search times if it means running on consumer hardware instead of paying $3,500/month for Pinecone. Therefore, the question isn’t “Is 2 seconds too slow?” but “Is storage your bigger problem than latency?”

When to Use LEANN (And When to Stick with Pinecone)

LEANN excels in scenarios where storage constraints outweigh latency requirements. Specifically, local-first deployments—semantic file system search replacing Spotlight, email archive search spanning years of Apple Mail, browser history recovery (“that Rust article I read 3 months ago”)—all accept 2-second latency. Additionally, privacy-critical applications benefit from 100% local execution with zero cloud dependency. Similarly, edge devices and IoT deployments gain RAG capabilities without massive storage overhead.

However, LEANN fails for real-time applications requiring sub-100ms responses. For example, user-facing chatbots, high-QPS workloads exceeding 100 queries per second, and production systems needing enterprise SLAs should stick with traditional vector databases. Moreover, the GPU requirement is non-negotiable—CPU-only environments see 10-60× slower recomputation, turning 2 seconds into minutes. Currently, Windows users must use WSL until native support arrives.

The decision tree is straightforward: If you’re building a chatbot with thousands of concurrent users, Pinecone remains the better choice. Conversely, if you’re indexing local documents for personal RAG, LEANN just saved you $2,000 yearly. The code example from the LEANN repository demonstrates its simplicity:

# Install LEANN

uv pip install leann

# Index your documents

leann index --path ~/Documents --output ~/leann-index

# Semantic search (not keyword-based)

leann search "contract renewals from Q4" --index ~/leann-indexIs This the End of Expensive Vector Databases?

LEANN signals a broader industry split: the vector database market is bifurcating into “hot data” (real-time, high-QPS, managed services like Pinecone) and “cold data” (infrequent queries, local/edge deployment, open-source alternatives like LEANN). Indeed, AWS S3 Vectors’ December 2025 launch with “storage-first architecture” validates this division. Furthermore, analysts predict vectors will transition from “a specific database type” to “a data type in multimodel databases,” suggesting traditional vector DB vendors must adapt or lose market share to cheaper alternatives.

GitHub momentum tells the story: 9,137 stars in seven months, #6 trending ranking as of January 19, 2026. This isn’t isolated enthusiasm—it’s market response to crisis. Consequently, companies are exploring graph-based architectures claiming 95% infrastructure cost reductions. Additionally, migration strategies now emphasize storing source-of-truth embeddings in cold storage (S3/GCS) before indexing in vector databases, precisely the approach LEANN embodies.

LEANN won’t replace Pinecone for every use case. In fact, real-time search engines and high-traffic APIs still need sub-100ms queries. However, when Berkeley researchers and AWS both release storage-optimized solutions within weeks of each other, and enterprise failure rates hit 72%, the industry is rethinking RAG architecture. Ultimately, LEANN proves there’s a better way for storage-constrained deployments—and that matters for a significant segment of RAG applications.

The Bottom Line

- 97% storage reduction is real: LEANN’s compute-for-storage trade-off works (188 GB → 4 GB), validated by academic benchmarks showing 90%+ recall maintained

- Vector DB costs are unsustainable: $3,500/month+ pricing drives 72% enterprise RAG failure rates, creating demand for alternatives

- Storage-first architecture is trending: AWS S3 Vectors (90% savings) and LEANN (97% savings) signal industry shift away from expensive storage models

- Choose based on constraints: LEANN excels for local/edge deployments with latency tolerance; Pinecone wins for real-time applications

- Open-source alternatives are viable: LEANN demonstrates that expensive vector databases aren’t the only path to production RAG systems

Storage-optimized RAG represents the future for cost-sensitive deployments. Notably, the 2-second latency trade-off makes economic sense when the alternative is $75K/month infrastructure bills or failed projects. LEANN isn’t perfect—it requires GPU infrastructure and doesn’t suit all use cases—but it proves the “store everything” model isn’t inevitable. Therefore, for developers facing vector database sticker shock, that’s a game-changing realization.