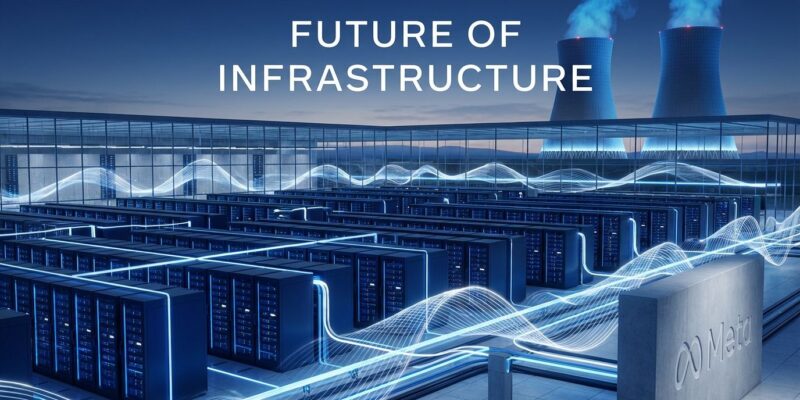

Meta just declared war in the AI infrastructure race. On January 12, CEO Mark Zuckerberg announced Meta Compute, a new organization to build “tens of gigawatts this decade, and hundreds of gigawatts or more over time.” To put that in perspective: Meta’s targeting enough power to run millions of homes—all for training AI models. The company already committed $60 billion by 2028 and signed the largest corporate nuclear deal in history (6.6 gigawatts by 2035). Zuckerberg is betting that infrastructure, not model architecture, decides who wins the superintelligence race.

Infrastructure as the New Moat

“How we engineer, invest, and partner to build this infrastructure will become a strategic advantage,” Zuckerberg wrote. That’s not corporate speak—it’s a strategic pivot. Meta is signaling that owning compute capacity matters more than renting it from AWS, Google Cloud, or Azure.

The numbers back up the ambition. OpenAI committed over $1 trillion across 10 years. Microsoft is spending $7 billion on a Wisconsin AI data center coming online early 2026. Anthropic pledged $50 billion for U.S. infrastructure. The pattern is clear: companies building their own infrastructure are betting they’ll control access to cutting-edge AI.

For developers, this creates a new reality. GPU scarcity isn’t going away—H100 rental prices jumped 10% in just four weeks in early 2026. Lambda Labs reports persistent capacity shortages. As hyperscalers race to lock down compute for themselves, third-party access becomes more constrained.

The Superintelligence Signal: Daniel Gross Joins Meta

Daniel Gross, CEO and co-founder of Safe Superintelligence (SSI), left the company in June 2025 to join Meta’s superintelligence lab. Gross co-founded SSI with Ilya Sutskever, OpenAI’s former chief scientist. The company’s mission was building safe AGI outside Big Tech’s pressures.

Meta tried to acquire SSI outright. When Sutskever declined, Zuckerberg recruited Gross anyway. Gross now leads Meta Compute’s long-term capacity strategy—essentially planning Meta’s path to superintelligence-scale infrastructure.

When the CEO of an AI safety startup focused on superintelligence joins your infrastructure team, you’re signaling serious AGI ambitions. The talent consolidation trend is unmistakable: Big Tech’s resources are winning over independent AI research.

The Energy Reality: Nuclear at Scale

Meta’s nuclear commitments reveal the true cost of the AI race. In January 2026, the company signed deals with Vistra, TerraPower, and Oklo for 6.6 gigawatts by 2035—the largest corporate nuclear commitment in history. One gigawatt powers roughly 750,000 homes. Meta’s targeting enough to power 5 million homes by 2035, with “hundreds of gigawatts” as the long-term goal.

By late 2027, AI data centers in the U.S. will need 20-30 gigawatts combined, according to MIT Technology Review. The U.S. power grid, built decades ago, wasn’t designed for this scale. Over 36 projects representing $162 billion in investment have been blocked or delayed due to power constraints.

Nuclear offers clean, reliable baseload power—something solar and wind can’t match for 24/7 AI training. But nuclear is slow to build. In the short term, fossil fuels are filling the gap.

What This Means for Developers

Short-term (2026-2027): Expect continued GPU scarcity and volatile pricing. H100s range from $1.45 to $3.29 per hour, but availability matters more than price when you can’t get allocation. Smaller startups face the hardest challenge.

Medium-term (2028-2030): If infrastructure buildouts succeed, supply might catch up to demand. But companies building infrastructure will prioritize their own workloads. Meta’s internal AI teams get first access to Meta Compute. Infrastructure ownership creates moats.

Long-term (2030+): The AI industry consolidates around infrastructure owners. If you don’t own gigawatt-scale compute, you’re renting from someone who does. That changes power dynamics—and pricing leverage.

The Bottom Line

Meta Compute is a long-term strategic bet that infrastructure determines AI leadership. Zuckerberg is betting $600 billion by 2028 that owning compute capacity matters more than model improvements. The timeline is 5-10 years for full scale, but the implications are immediate: GPU scarcity continues, cloud pricing remains volatile, and infrastructure access becomes gated by hyperscalers.

For developers, the takeaway is stark. The companies building gigawatt-scale infrastructure today will control access to cutting-edge AI tomorrow. That might be good for Meta’s strategic position. It’s less clear whether it’s good for innovation.