Your Kubernetes clusters are burning money—and you probably don’t even know it. A study analyzing 3,042 production clusters across 600+ companies just exposed a hidden crisis: 68% of pods waste 3-8x more memory than they actually use. The worst case? One company hemorrhaging $2.1 million annually on unused resources.

This isn’t a few misconfigured deployments. This is systematic waste driven by fear, outdated tutorials, and a culture that defaults to “better safe than sorry” at 3-8x the necessary cost.

The Scale of the Problem

The January 2026 study from Wozz tracked 847,293 pods across production environments and found the average company wastes $847 per month on memory over-provisioning alone. Real-world examples showed companies cutting costs from $47,200 to $11,100 monthly—a 76% reduction—simply by right-sizing memory requests.

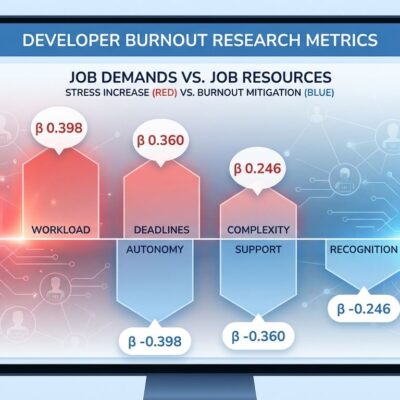

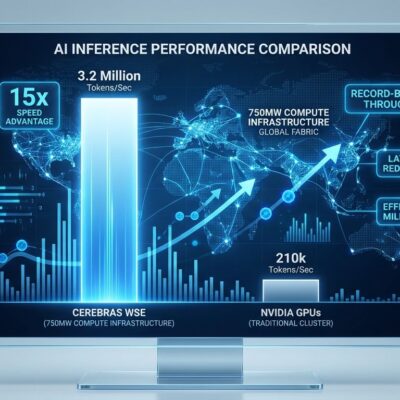

The waste spans every application type:

| Application Type | Avg Requested | Actual Used (P95) | Waste Rate |

|---|---|---|---|

| Node.js | 1.8 GiB | 587 MiB | 68% |

| Python/FastAPI | 2.1 GiB | 612 MiB | 72% |

| Java/Spring Boot | 3.2 GiB | 1.1 GiB | 66% |

| AI/ML Inference | 16.4 GiB | 2.7 GiB | 84% |

AI and machine learning workloads are the worst offenders, requesting 6x more memory than actually needed. Cloud providers charge for requested memory, not used memory—creating zero incentive to optimize and no feedback loop for waste.

Why This Happens

Three factors drive the over-provisioning crisis, and they’re all rooted in human behavior, not technical limitations.

Tutorial cargo-culting. The study traced 73% of memory: "2Gi" configurations back to outdated documentation. Developers copy-paste resource specs from tutorials written years ago without customizing them for actual workload needs. That generic “2Gi” might have been arbitrary to begin with.

Trauma provisioning. After experiencing a single OOMKilled incident, 64% of teams admitted adding 2-4x headroom “just to be safe.” One failure creates lasting fear. Teams lack confidence in right-sizing, so they default to massive safety buffers that cost 3-8x more than necessary.

Visibility gap. Only 12% of teams can identify their actual P95 memory usage. Without monitoring, teams are flying blind. They guess at resource needs, err on the side of caution, and never revisit those decisions. The waste compounds over time as more workloads inherit the same inflated specs.

The Fix: Data Over Fear

The solution isn’t complex—it’s a formula and a principle.

The formula: memory_request = P95_actual_usage × 1.2

Monitor your actual memory consumption for 30 days to establish the 95th percentile usage. Multiply by 1.2 to add a 20% safety buffer. That’s it. Not 3x. Not 8x. Just 1.2x.

The principle: Set memory limit equal to memory request. Tim Hockin, a Kubernetes maintainer at Google, is blunt: “Always set memory limit == request.” Memory is non-compressible—once allocated to a pod, it can only be reclaimed by terminating the container. Setting limit equal to request prevents cascading failures and unpredictable resource contention.

# ❌ DON'T: Fear-based over-provisioning

resources:

requests:

memory: "8Gi" # Actual P95: 1Gi

limits:

memory: "16Gi"

# ✅ DO: Data-driven right-sizing

resources:

requests:

memory: "1.2Gi" # P95 (1Gi) × 1.2

limits:

memory: "1.2Gi" # Equal to requestAutomation Replaces Manual Guesswork

Manual right-sizing is already obsolete. The 2026 tooling landscape offers continuous, automated optimization.

ScaleOps performs real-time right-sizing without pod restarts, leveraging Kubernetes’ native In-Place Pod Resizing feature to adjust resources based on live workload behavior. Kubecost, built by former Google engineers, provides real-time cost attribution and has expanded network cost allocation in 2026 to track egress and inter-AZ data transfer. CAST AI goes beyond recommendations—it monitors and adjusts clusters automatically using AI, claiming up to 70% cost reductions.

These tools integrate with GitOps workflows, maintaining Git as the source of truth while generating pull requests for optimization changes. No more periodic reviews. No more drift. Continuous optimization becomes the default.

The 2026 Context: FinOps Mandate

Memory over-provisioning isn’t happening in isolation. It’s colliding with the 2026 mandate for FinOps integration and platform engineering discipline.

The industry is shifting to “surface costs at the time of decision”—embedding cost awareness during development, not after deployment. Platform engineering teams are standardizing golden paths with pre-optimized resource templates, and policy engines are enforcing right-sizing at deployment time. AI workloads are changing traditional metrics, making GPU utilization as critical as CPU and memory.

2026 is the year teams stop tolerating waste. The “just to be safe” culture is being replaced by data-driven efficiency, and the tools to make it painless already exist.

Measure Your Waste This Week

Here’s your immediate action: check your P95 memory usage this week. Pick your highest-cost workload and monitor it for 30 days. Calculate P95 × 1.2. Compare that to your current request. Odds are high you’re over-provisioned by 3x or more.

The Wozz study proved 68% of teams are wasting money on memory they’ll never use. Don’t be part of that statistic. Data beats fear. Measure, optimize, repeat.