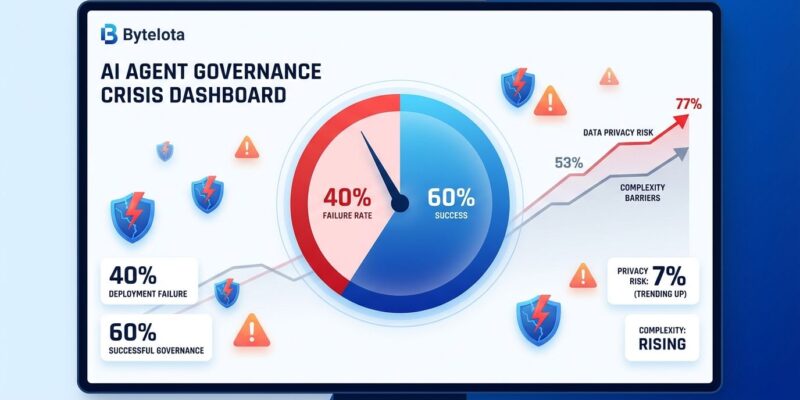

While $300 billion races toward AI agent deployments in 2026, a governance crisis is unfolding beneath the surface. Gartner predicts 40% of agentic AI projects will be canceled by 2027—not because the technology doesn’t work, but because organizations lack the governance frameworks to deploy it safely. The numbers tell a stark story: 96% of leaders recognize AI agents pose heightened security risks, yet below 50% have implemented agent-specific governance policies. Companies are deploying agents faster than they can secure them.

The Deployment Crisis

The failure statistics are worse than most enterprises realize. Only 5% of custom enterprise AI tools reach production, according to an MIT study. That’s not a typo—95% fail before launch. Meanwhile, 42% of companies abandon AI initiatives before production, up from just 17% year-over-year. Overall, 80% of AI projects never reach production, nearly double the failure rate of typical IT projects.

Three specific barriers are killing projects. Integration complexity affects 46% of enterprises—the hardest part isn’t agent intelligence, but secure access to production systems. Data privacy concerns exploded from 53% to 77% in a single quarter as agent-to-agent workflows multiply risk surface area. Data quality worries jumped from 37% to 65% in the same period. These aren’t gradual trends. They’re rapid escalations showing organizations are overwhelmed.

The root cause isn’t technical incompetence. It’s governance. When organizations rush to implement AI agents without mature frameworks, they expose themselves to operational and existential risks. Deloitte’s Tech Trends 2026 report found that 65% of leaders cite agentic system complexity as their top barrier for two consecutive quarters. The problem is structural, not temporary.

The Pilot Illusion

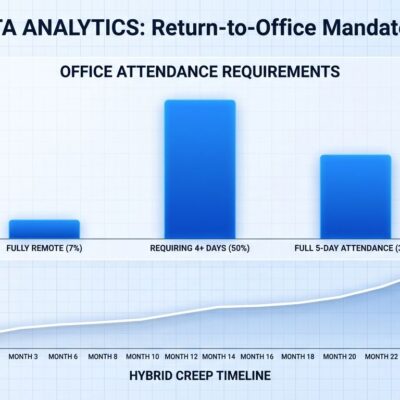

AI agents that work perfectly in controlled pilots collapse in production. The gap between demos and reality is brutal. Pilots test 50-500 controlled queries with predictable patterns. Production handles 10,000-100,000+ daily requests amid edge cases, concurrency, and real business stakes. A three-agent workflow costing $5-50 in demos can generate $18,000-90,000 monthly bills at scale. Response times jump from 1-3 seconds to 10-40 seconds. Tool calling—the mechanism agents use to interact with systems—fails 3-15% of the time in production.

The failure cases are high-profile. IBM terminated its $62 million Watson deployment at MD Anderson Cancer Center due to performance issues. McDonald’s scrapped its AI drive-thru program after viral customer complaints. Microsoft’s ChatDev framework achieves only 33% correctness on basic programming tasks despite dedicated verifier agents. These aren’t edge cases. They’re predictable outcomes when governance lags deployment.

Cost explosion alone kills projects. Organizations discover too late that multi-agent systems multiply costs exponentially through token consumption and API calls. Production edge cases trigger failure modes never seen in pilots. Data quality problems that barely register in testing amplify catastrophically at scale. The pattern repeats: optimism in pilots, chaos in production.

The Bounded Autonomy Solution

Leading organizations aren’t giving up on AI agents. They’re implementing bounded autonomy—a framework where systems operate independently within well-defined constraints. Agents maintain freedom of action within predetermined safe envelopes while preserving human agency over boundary conditions.

The architecture has four components. Clear operational limits define what agents can and cannot do. Escalation paths route high-stakes decisions to humans based on confidence thresholds. Comprehensive audit trails log every agent decision immutably. Programmatic guardrails enforce boundaries through technical controls—validation schemas for outputs, approval workflows for risky actions, and automatic circuit breakers when anomalous behavior is detected.

This isn’t theory. The dominant 2026 model is AI proposing actions while humans approve decisions. This pattern is especially critical in finance, healthcare, and legal sectors where autonomous errors carry regulatory and liability consequences. Bounded autonomy solves the AI agent governance problem without sacrificing agent capabilities.

Platform Standards Converge

The industry is converging on shared governance standards around three pillars: identity, permissions, and observability. Every AI agent needs a unique digital identity with ownership details, version history, and lifecycle status. Microsoft’s Entra Agent Identity distinguishes production, development, and test agents while tracking who owns what.

Permission systems are shifting to just-in-time access that grants privileges only for required durations. Task-based authorization limits agents to specific operations. Context-aware policies adjust permissions based on data sensitivity. RBAC and comprehensive audit logs track every access decision. The goal is preventing the authorization bypass paths that turn agents into privilege escalation vectors.

Observability is non-negotiable. Real-time monitoring shows what agents do as they do it. Centralized logging aggregates decisions, tool calls, and data access across agent fleets. Audit trails must attribute actions to human requesters, not just agents—critical for accountability when things go wrong. IBM’s watsonx.governance and Microsoft Azure AI Foundry both implement these patterns, showing platform convergence is real.

What Developers Need to Know

Governance isn’t a compliance checkbox. It’s what separates pilots from production. The data is clear: purchasing AI agent tools from specialized vendors succeeds about 67% of the time, while internal builds succeed only 33% as often. Governance is complex enough that building from scratch doubles your failure rate.

Focus on the three pillars: identity, permissions, observability. Implement bounded autonomy from day one—clear limits, escalation paths, audit trails. Don’t assume pilot performance predicts production behavior. Budget for 10-20x cost scaling and design your architecture accordingly. Test governance controls as rigorously as you test functionality.

KPMG’s AI at Scale report puts it bluntly: 2026 represents an inflection point where governance, security, and integration capabilities—not model performance—will determine which organizations successfully scale AI agents in production. The organizations that solve governance first create competitive advantage. The rest join the 40% failure statistic.