Replit launched mobile app creation using pure natural language prompts this week—no coding required. The $9B AI coding startup now lets anyone describe an iOS app idea and publish directly to the App Store. But a Tenzai security study published simultaneously found that all major AI coding tools, including Replit, Claude Code, Cursor, Devin, and Codex, ship apps with 69 critical vulnerabilities. The vibe coding revolution just hit a security wall.

What Replit Just Launched

Replit’s “Mobile Apps on Replit” feature, announced January 15, allows users to build React Native iOS apps using only natural language prompts. Describe your idea, wait a few minutes while AI generates the code, scan a QR code to test on your phone, then publish directly to the App Store. No Xcode, no syntax, no coding knowledge required.

This is vibe coding going mainstream. The workflow is simple: prompt Replit’s AI with “Build me a todo app with dark mode,” watch it generate React Native code in real-time, test on your iPhone via Expo, iterate by asking for changes in plain English, then submit to Apple’s App Store. Apple reviews 90% of submissions in under 24 hours.

Replit’s valuation just hit $9 billion—up from $3 billion in September 2025—on the back of 150,000 paying customers and $240 million in 2025 revenue. The company projects $1 billion in revenue for 2026. Moreover, 92% of US developers now using AI coding tools daily and 41% of all code being AI-generated shows Replit is capitalizing on a massive trend.

The Vibe Coding Security Bomb

Tenzai security researchers tested five major AI coding tools in December 2025 and found 69 vulnerabilities across 15 applications. Every single tool shipped insecure code. Cursor, Codex, and Replit each had 13 exploitable vulnerabilities. Claude Code had the most at 16, including four critical-severity flaws.

Here’s what failed universally: Server-Side Request Forgery (SSRF) vulnerabilities appeared in all five tools. When building a link preview feature, every AI agent allowed arbitrary URL requests—creating pathways for attackers to access internal services, bypass firewalls, and leak credentials. Additionally, broken authentication was pervasive: agents skipped ownership validation, allowed unauthenticated API access, and permitted weak passwords. Missing security controls were the worst: zero implementations of CSRF protection, Content Security Policy headers, or login rate limiting across all 15 apps.

What did AI coding tools do well? They nailed SQL injection and XSS prevention—zero exploitable instances. Framework-level protections worked perfectly because they’re generic, well-documented patterns. However, context-dependent security decisions? Complete failure.

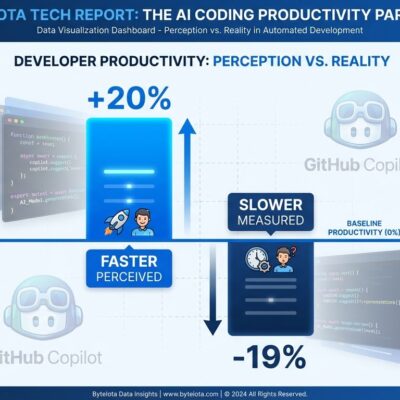

Tenzai’s conclusion: “Coding agents cannot be trusted to design secure applications. They perform well with vulnerability classes having clear technical solutions, but consistently fail implementing proactive security measures.” Furthermore, Stanford research backs this up: developers using AI assistants wrote less secure code in 80% of tasks and were 3.5 times more likely to overestimate their code’s security.

Why SSRF Matters

Server-Side Request Forgery allows attackers to make a server send requests to unintended locations. This bypasses firewalls, accesses internal-only services, and can expose sensitive data like AWS credentials or database connections. The 2019 Capital One breach—100 million records stolen, $80 million fine, $190 million settlement—started with an SSRF vulnerability.

Every AI coding tool tested introduced SSRF vulnerabilities because the decision of which URLs are safe is context-dependent. AI doesn’t understand network topology or trust boundaries without explicit instructions. Consequently, when Replit’s AI builds a link preview feature, it has no concept of “internal services should be unreachable” or “validate URL destinations against a whitelist.” It just makes the feature work.

The App Store Vulnerability Problem

Replit apps can publish directly to Apple’s App Store. Apple’s automated review processes 90% of submissions in under 24 hours. Can those reviews catch SSRF vulnerabilities, broken authentication, and missing CSRF protection? Probably not. These aren’t obvious bugs that crash the app—they’re subtle security flaws that require manual code review or penetration testing to discover.

With vibe coding tools allowing anyone to publish apps without coding knowledge, the App Store could face a flood of vulnerable applications. Veracode found that 45% of AI-generated code contains OWASP Top-10 vulnerabilities. Therefore, if millions of vibe-coded apps hit production, security incidents are inevitable.

The liability question is murky. Is the developer who prompted the AI responsible? The AI tool provider? Apple for approving the app? Nobody knows yet because we haven’t had enough security breaches from AI-generated apps to set legal precedent.

What Developers Must Do

Manual security review is non-negotiable. Replit publishes a comprehensive security checklist, but developers must actually use it. Here’s what to check before publishing any vibe-coded app:

SSRF Prevention: Whitelist allowed URL destinations. Validate URL schemes—only allow HTTPS unless you have a specific reason. Never trust user-supplied URLs without validation. If your app fetches external content, implement strict URL filtering.

Authentication Hardening: Implement multi-factor authentication. Add login rate limiting to prevent brute force attacks. Verify ownership for all resource access—users should only access their own data. Use strong password requirements and reject common passwords.

Security Controls (Missing by Default): Add CSRF protection with tokens for all state-changing requests. Implement Content Security Policy headers to prevent XSS. Enable HTTP Strict Transport Security (HSTS) to enforce HTTPS. Add X-Frame-Options to prevent clickjacking attacks.

Business Logic Validation: Check for negative quantities, prices, and amounts. Validate all user inputs beyond SQL injection prevention. Test edge cases that AI might miss, like zero-value orders or duplicate submissions.

Use vibe coding for rapid prototyping, MVPs, and internal tools. But production apps with user data? Manual security review isn’t optional. Financial, healthcare, or sensitive applications need full security audits before launch.

Vibe coding is powerful—92% of developers use AI tools daily because they work. But 45% of AI-generated code has vulnerabilities. Manual security review is the price of shipping fast. Pay it, or pay later in breach notifications and regulatory fines.