Superpowers, an agentic skills framework for AI coding agents, surged to GitHub’s trending page today with 1,406 stars gained—bringing disciplined software development workflows to Claude Code, Codex, and OpenCode. Created by Jesse Vincent in October 2025, the framework tackles a problem every developer using AI agents faces: they jump straight to code without understanding requirements, skip testing, and produce inconsistent results. Superpowers enforces mandatory workflows—design, planning, test-driven development, and systematic debugging—transforming AI agents from reactive assistants into structured developers who can work autonomously for hours.

Mandatory Skills-Based Workflows: Beyond AI Suggestions

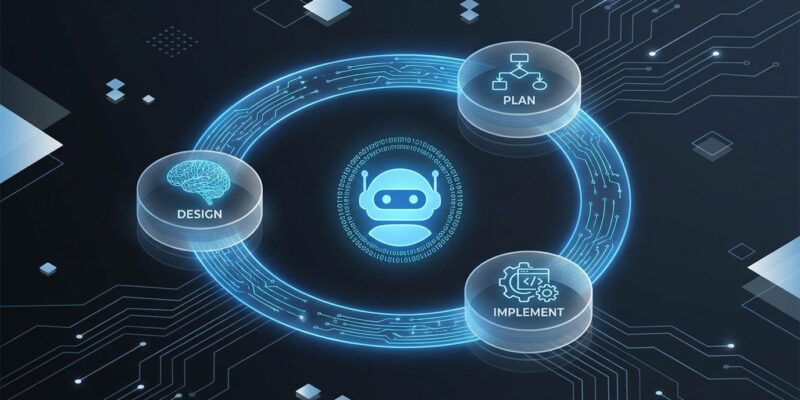

Superpowers uses composable “skills”—markdown files that automatically trigger based on context—to enforce a mandatory design → plan → implement workflow. Unlike optional AI assistance tools that politely suggest best practices, Superpowers makes structured processes mandatory. The TDD skill, for example, “actually deletes code written before tests exist.” No negotiation. No shortcuts.

Jesse Vincent describes the power of this approach: “When I wanted to add git worktree workflows to Superpowers, it was a matter of describing how I wanted the workflows to go…and then Claude put the pieces together.” Moreover, the system enables Claude to “work autonomously for a couple hours at a time” without deviating from approved plans. That’s not an assistant following prompts—that’s an agent executing a structured methodology.

Skills activate automatically based on task context. When Claude detects you’re building something, the brainstorming skill triggers before a single line of code gets written. Consequently, it asks clarifying questions, explores alternatives, and refines specifications through Socratic dialogue. Only after design approval does the planning skill kick in, breaking work into 2-5 minute tasks with complete implementation details. This isn’t gentle guidance—it’s enforced discipline.

Related: Cursor AI Browser Experiment: The Vibe Coding Crisis

Installation: Two Commands for Claude Code, Manual Setup for Others

Platform choice dramatically impacts onboarding complexity. Claude Code users get the easiest path—two commands via the built-in plugin marketplace:

/plugin marketplace add obra/superpowers-marketplace

/plugin install superpowers@superpowers-marketplace

# Quit and restart Claude

/help # Verify skills loadedThat’s it. Under one minute from decision to fully operational agentic framework. However, OpenCode and Codex require manual setup—repository cloning, symlink creation, platform-specific configuration. For OpenCode:

git clone https://github.com/obra/superpowers ~/.config/opencode/superpowers

mkdir -p ~/.config/opencode/plugin

ln -s ~/.config/opencode/superpowers/.opencode/plugin/superpowers.js \

~/.config/opencode/plugin/superpowers.js

# Restart OpenCodeCodex support is experimental with CLI-based integration. Furthermore, the installation gap matters. Claude Code’s plugin system removes friction entirely, while Codex and OpenCode users face steeper learning curves. Therefore, factor platform lock-in risks against setup convenience when choosing where to invest.

From Brainstorming to Subagent-Driven Development: Core Skills

Superpowers ships with 10+ core skills covering the full development lifecycle. The brainstorming skill activates when Claude sees you’re building something—it “steps back and asks you what you’re really trying to do” instead of jumping to implementation. Subsequently, the planning skill breaks approved designs into 2-5 minute tasks with exact file paths, complete code snippets, and verification steps.

TDD enforcement goes beyond reminders. The test-driven development skill follows the RED-GREEN-REFACTOR cycle and includes an anti-patterns reference. If code appears before tests, Superpowers deletes it. That’s not a suggestion—it’s a constraint. Even experienced developers skip TDD under pressure. Superpowers doesn’t.

The systematic debugging skill implements a 4-phase root cause analysis: root-cause tracing, defense-in-depth validation, and condition-based waiting techniques. Meanwhile, subagent-driven development dispatches specialized agents for individual tasks, each undergoing two-stage review—first for specification compliance, then for code quality. This enables parallel work on complex features while preventing the drift that typically happens when agents work unsupervised for extended periods.

Git worktree skills automatically manage parallel branches, letting multiple agents work on different features simultaneously without merge conflicts. Additionally, the code-review skill launches a dedicated reviewer agent to catch issues before they land. These aren’t theoretical capabilities—they’re working skills developers use daily.

Real-World Results: Explosive Growth, Mixed Reviews

Superpowers gained 27,000 stars in three months—approximately 9,000 stars per month, making it one of the fastest-growing developer tools in the AI coding space. The 1,406 stars gained today pushed it to #3 on GitHub trending. Nevertheless, Hacker News discussions reveal the complexity beneath the hype.

While Jesse Vincent reports successful autonomous multi-hour development sessions, critics argue the framework lacks rigorous benchmarks. One commenter bluntly stated: “Seems cute, but ultimately not very valuable without benchmarks or some kind of evaluation.” In contrast, another noted that “using advanced agentic systems makes development ‘harder work’ cognitively”—the mental overhead of managing structured workflows creates exhaustion even as code quality improves.

The “trivial task” problem persists across AI coding tool discussions. One observer captured the frustration: “The hardest problem in computer science in 2025 is presenting an example of AI-assisted programming that somebody won’t call trivial.” However, Simon Willison counters that Vincent’s approach is “wildly more ambitious than most other people” working with AI agents.

The cognitive load trade-off is real. Superpowers adds structure, which means more upfront thinking—design before code, planning before implementation, tests before features. That’s work. Consequently, whether that work pays off depends entirely on project complexity and quality requirements.

Related: AI Coding Productivity Paradox: METR Study Shows 19% Slower

When Superpowers Makes Sense (and When It Doesn’t)

Superpowers excels for complex features requiring 2+ hours of development, production code needing high quality and test coverage, and teams frustrated with inconsistent AI agent behavior. The mandatory workflows shine when stakes are high—production systems, customer-facing features, compliance-critical code.

Skip Superpowers for quick bug fixes, single-file scripts, prototyping, and exploratory coding where structure limits creativity. The workflow overhead isn’t justified when you’re testing ideas, debugging edge cases, or making trivial changes. Using Superpowers for “hello world” examples is overkill—like bringing a full QA process to fixing a typo.

The decision comes down to project complexity versus overhead tolerance. Multi-file features with autonomous agent work? Superpowers delivers value. Production projects requiring systematic debugging and TDD enforcement? Superpowers pays for itself in reduced technical debt. Quick refactors where raw Claude Code or Codex works fine? Skip the framework and move fast.

Key Takeaways

- Superpowers enforces mandatory workflows—design, planning, TDD, systematic debugging—through composable skills that automatically trigger based on context, transforming AI agents from assistants into disciplined developers

- Installation ranges from trivial (Claude Code: 2 commands, under 1 minute) to manual (OpenCode and Codex: repository cloning, symlinks, platform-specific configuration)

- The framework enables autonomous multi-hour development sessions where agents work independently while following approved plans, but cognitive overhead is real—managing structured workflows requires mental effort

- With 27,000 stars in 3 months and 1,406 gained today, Superpowers shows explosive growth, but Hacker News critics correctly note the lack of rigorous benchmarks and question whether examples demonstrate real-world value

- Use Superpowers for complex features, production code, and team consistency. Skip it for quick fixes, prototypes, and exploratory coding where structure becomes overhead

Superpowers represents a fundamental shift in how we think about AI coding agents—not as assistants waiting for instructions but as junior developers who need structured methodology. Whether that shift justifies the workflow overhead depends on what you’re building and what quality standards you’re holding.