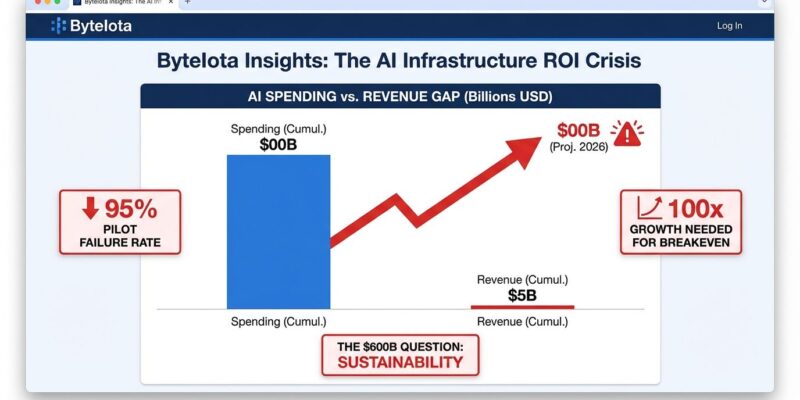

Tech’s biggest hyperscalers are spending $400 billion in 2025 on AI infrastructure, with projections hitting $500 billion in 2026 and $600 billion in 2027—part of a $3 trillion investment Moody’s estimates is needed by 2030. However, the numbers reveal a crisis: AI services generated only $25 billion in revenue in 2025, just 10% of what’s being spent. Furthermore, Goldman Sachs found data centers face $40 billion in annual depreciation costs while generating only $15-20 billion in revenue. The math doesn’t work. It’s the biggest investment gamble in tech history, and the cracks are starting to show.

The MIT Reality Check: 95% of AI Pilots Fail

MIT’s “GenAI Divide” study published last year dropped a bomb: 95% of enterprise AI pilots delivered zero measurable P&L impact despite $30-40 billion in investment. The study analyzed 52 executive interviews, 153 leader surveys, and 300 public AI deployments. While 80% of organizations piloted tools like ChatGPT or Copilot and 40% deployed them, these systems mainly boost individual productivity—not business returns.

Only 5% of integrated systems created significant value. What separates winners from the 95%? External vendor tools succeed twice as often as internal builds, yet most companies waste resources building in-house. Additionally, the winners target operations and finance—areas where AI delivers measurable ROI—while most budgets flow to sales and marketing, where returns are lowest.

Moreover, a “shadow AI economy” has emerged. MIT found 90% of workers use personal AI tools like ChatGPT and Claude daily for work while only 40% of companies have official LLM subscriptions. The infrastructure is being built for corporate adoption that’s already being bypassed.

Sequoia’s “$600B Question” Quantifies the Gap

Sequoia Capital has been tracking this AI infrastructure spending crisis closely. Their analysis evolved from “AI’s $200B question” with a $125B hole to “AI’s $600B question” with a $500B hole. The gap is growing faster than revenue. Partner David Cahn calculated that AI companies must earn about $600 billion per year to pay for their infrastructure. Current reality? OpenAI revenue reached $3.4 billion (up from $1.6B in late 2023), but losses exceeded $11.5 billion in Q2 2025 alone.

For the AI infrastructure buildout to make economic sense, AI revenues must grow from $20 billion to $2 trillion annually by 2030—a 100-fold increase. Nevertheless, nobody’s hitting those numbers. The revenue gap between OpenAI and other AI startups continues to widen, with most never approaching profitability. Sequoia is warning investors that the emperor has no clothes.

The Spending Escalation Nobody Can Stop

Six US hyperscalers—Microsoft, Amazon, Google, Meta, Oracle, and CoreWeave—spent roughly $400 billion in 2025, tracking toward $500-600 billion in 2026 (a 36% year-over-year increase) and over $600 billion in 2027. Consequently, individual companies now exceed $100 billion in annual capital expenditure: Amazon $125B, Microsoft $140B (including leases), Google $93B, Meta $72B. Some hyperscalers are dedicating 45-57% of revenues to infrastructure spending.

Roughly 75%—$450 billion—of 2026 spending is directly tied to AI infrastructure rather than traditional cloud services. Moody’s projects peak investment in 2029, then a decline in 2030. However, here’s the uncomfortable question: what happens between now and then if revenue doesn’t materialize?

Echoes of the Dot-Com Bubble

The parallels to the late 1990s are impossible to ignore. During the dot-com boom, telecom companies laid over 80 million miles of fiber optic cables driven by inflated demand forecasts. Most of it was never used. Similarly, today’s $600 billion AI infrastructure buildout follows the same playbook: building massive capacity for demand that hasn’t materialized.

Valuations are more conservative today—the Nasdaq-100 trades at 28x forward earnings versus 89x at the 1999 peak—but the infrastructure overhang is comparable. Microsoft, Meta, Tesla, Amazon, and Google invested about $560 billion in AI infrastructure over two years but brought in just $35 billion in AI-related revenue combined. That’s a 6% return.

Physical infrastructure bubbles are harder to unwind than stock bubbles. Hardware depreciates. Next-gen chips like Nvidia’s B100 will accelerate the obsolescence of current H100 GPUs ($30K each, renting at $1.53-2.63/hour), compounding losses if revenue doesn’t catch up.

The Energy Wall Might Come First

Even if revenue projections were accurate, physical constraints may prevent infrastructure deployment. Power availability—not location, not cost—is now the primary data center site selection criterion. Consequently, multi-year grid connection delays are standard. PJM Interconnection, the largest US grid operator serving 65 million people, projects a 6 gigawatt shortfall below reliability requirements by 2027.

Approximately 70% of the US electrical grid is approaching end-of-life, built between the 1950s and 1970s. Furthermore, US electricity demand is projected to jump from 4,110 billion kilowatt-hours in 2024 to 4,260 billion kWh in 2026, with data center demand expected to triple by 2035 (200 TWh to 640 TWh). Hyperscalers are scrambling to implement on-site power generation—natural gas, batteries, even nuclear partnerships—because the grid can’t scale fast enough.

What Developers Need to Know

Total Cost of Ownership for running production-grade AI models at scale has become what Deloitte calls “the single biggest barrier to adoption.” The narrative has fundamentally shifted from “what can AI do?” to “what can our infrastructure afford to power?”

Developers are responding. Brute-force scaling of frontier models is giving way to cost-aware architecture: blending large central models with smaller, highly efficient domain-specific LLMs at the edge. AI FinOps—financial observability combined with strategic restraint—is emerging as a critical skill. Furthermore, the consolidation signal is clear: ClickHouse’s $15 billion valuation and acquisition of Langfuse on January 16 shows the market is maturing rapidly.

The winners won’t be those with the most infrastructure. They’ll be the companies that deliver actual value efficiently while the hyperscalers figure out how to justify their spending.

The 2026 Reckoning

Moody’s warned in their January 14 report that “demonstrating actual revenue generation will become increasingly important in the AI ecosystem to silence growing chatter about an ‘AI bubble.'” 2026-2027 is the reckoning period. Either revenue accelerates dramatically—that 100x growth Sequoia identified—or we’re looking at the largest infrastructure write-down in tech history.

Watch these indicators: hyperscaler earnings calls (are they slowing capex?), enterprise pilot-to-production conversion rates (is that 5% improving?), and power grid availability (can infrastructure even be deployed?). The math currently doesn’t work. Something has to give.