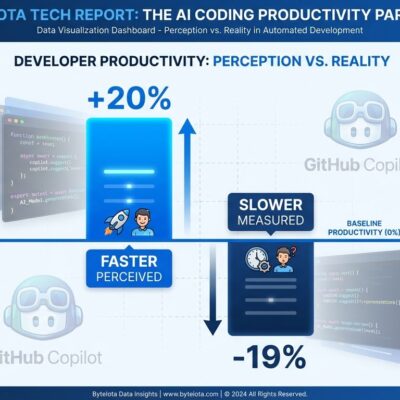

MIT Technology Review just crowned generative coding as a breakthrough technology for 2026, recognizing how AI tools like GitHub Copilot and Cursor are transforming software development. Microsoft says AI writes 30% of its code. Google reports 25%. Meta predicts 50% soon. But here’s the problem: a rigorous study from the nonprofit research organization METR found experienced developers are actually 19% slower when using AI tools—despite believing they’re 20% faster. The gap between breakthrough recognition and productivity reality has never been wider.

The 40-Point Perception Gap

METR’s randomized controlled trial studied 16 experienced developers across 246 tasks in mature projects where they had an average of five years’ experience. The results contradict everything the industry has been claiming.

Developers predicted they’d complete tasks 24% faster with AI before starting. After finishing, they estimated AI made them 20% faster. Objective measurement showed the opposite: completion times increased 19%. That’s a 40-point gap between perception and reality. Economics experts predicted 39% faster completion. ML experts said 38% faster. Both were spectacularly wrong.

This isn’t some vendor-funded case study cherry-picking results. METR is a nonprofit research organization with no AI tools to sell. When independent measurement contradicts vendor claims this dramatically, developers need to pay attention.

Trust is Falling—For the First Time Ever

Stack Overflow’s 2025 developer survey captures the industry’s growing skepticism. Trust in AI accuracy dropped from 40% to 29%—the first decline since these tools launched. More developers now actively distrust AI (46%) than trust it (33%). Only 3% report “highly trusting” AI output.

The top frustration, cited by 45% of respondents, perfectly captures the problem: “AI solutions that are almost right, but not quite.” That phrase should haunt every AI coding company. Almost right means more debugging. Almost right means 66% of developers now spending more time fixing AI-generated code. Almost right is why 75% still ask another person when they need reliable help.

Experienced developers show the steepest skepticism: just 2.6% highly trust AI tools, while 20% highly distrust them. These are the developers who can spot when AI is confidently wrong.

The Hidden Quality Tax

GitClear’s analysis of 211 million changed lines of code reveals why that 19% slowdown matters less than what’s happening to code quality. Code duplication increased from 8.3% to 12.3% between 2020 and 2024—a 4x growth that tracks directly with AI adoption. Refactoring collapsed from 25% of changed lines in 2021 to less than 10% in 2024.

Yes, developers produce 10% more durable code that doesn’t get deleted immediately. But durable doesn’t mean good. It means code that sticks around—accumulating technical debt, violating DRY principles, creating maintainability nightmares for future teams. GitClear’s assessment is blunt: AI-generated code “resembles an itinerant contributor, prone to violate the DRY-ness of the repos visited.”

Companies celebrating that 10% productivity boost aren’t accounting for the quality tax they’ll pay in twelve months when that duplicated, under-refactored code becomes unmaintainable.

Why MIT Still Got It Right

None of this means MIT Technology Review made the wrong call. Generative coding absolutely deserves breakthrough recognition—just not for the reasons vendors are marketing.

The transformation is real: 90% of Fortune 100 companies adopted GitHub Copilot. 50,000+ enterprise organizations deployed AI coding tools. Mark Zuckerberg predicts half of Meta’s development will be AI-generated within a year. Microsoft’s CTO projects 95% of all code will be AI-generated within five years. These aren’t marginal shifts.

The breakthrough isn’t that AI makes developers faster right now. It’s that AI is fundamentally changing what software development means—who can do it, how teams collaborate, what problems become tractable. That’s transformative even if current productivity claims don’t hold up.

Where AI Actually Delivers

Developers interviewed by MIT Technology Review agree on four use cases where AI genuinely helps: boilerplate code generation, writing tests, fixing bugs, and explaining unfamiliar code to new team members. GitHub’s research shows 55% faster completion on these repetitive, well-defined tasks.

The problem is net productivity—the entire workflow, not isolated moments of assistance. AI excels at churning out component templates and generating test cases. It fails at complex problem-solving requiring deep understanding, novel solutions to unfamiliar problems, and architectural decisions that determine long-term maintainability.

Developers optimizing for AI’s strengths while avoiding its weaknesses see real gains. Those treating AI as a universal productivity multiplier end up 19% slower, confused about why their subjective experience doesn’t match objective reality.

The Honest Conversation 2026 Needs

Generative coding deserves its breakthrough status. The transformation is happening, the adoption is massive, the potential is enormous. But 2026 needs to move past vendor marketing toward honest measurement.

Independent research shows developers are slower, trust is falling, code quality is declining. That’s not a reason to abandon AI tools—it’s a reason to use them better. Celebrate the boilerplate acceleration. Question the productivity claims. Demand transparency about the gap between perception and reality.

The future of development is human + AI, not AI replacing humans. MIT recognized the transformation. Now the industry needs to recognize the limitations.