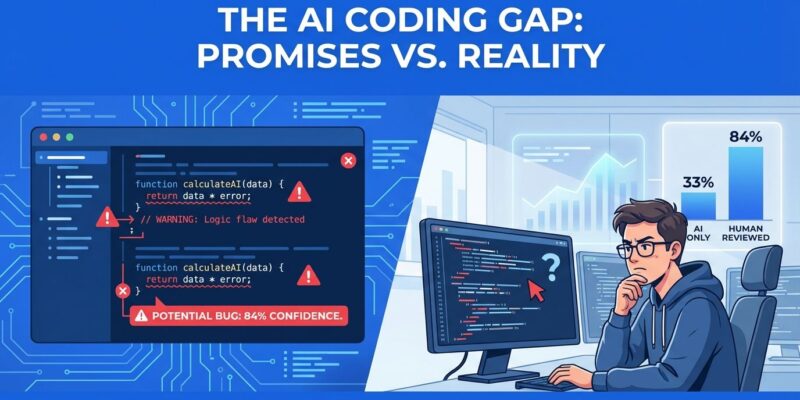

The AI coding honeymoon is over. Stack Overflow’s 2025 Developer Survey reveals a paradox: 84% of developers now use AI coding tools, up from 76% last year. But only 33% trust the accuracy of what these tools produce. Even more telling, 46% actively distrust AI output. For the first time since tracking began, positive sentiment declined from over 70% in 2023-2024 to just 60% in 2025.

This isn’t cautious optimism. It’s mass adoption without confidence. Developers are using tools they fundamentally don’t believe in.

The 51-Point Gap Nobody’s Talking About

The 51-point gap between usage and trust tells a story of necessity, not preference. Only 3% of developers “highly trust” AI output. Experienced developers are most skeptical—just 2.6% report high trust while 20% actively distrust the technology.

Stack Overflow’s characterization says it all: “Developers remain willing but reluctant to use AI.” This isn’t enthusiasm. It’s pragmatism mixed with resignation. Use AI tools or fall behind. Trust them? That’s optional.

Research firm Qodo found 76% of developers operate in the “red zone”—frequent hallucinations combined with low confidence in AI-generated code. It’s the early Stack Overflow copy-paste era all over again: useful but unreliable, human verification required.

Quality Problems Justify the Skepticism

Developer skepticism isn’t paranoia—it’s pattern recognition. CodeRabbit’s 2025 report found AI-generated pull requests average 10.83 issues compared to 6.45 for human code. That’s 1.7 times more defects: 1.75x more logic errors, 1.57x more security vulnerabilities, 3x worse readability, and 8x more performance inefficiencies.

The Stack Overflow survey reveals where this hits hardest. The single biggest frustration, cited by 66% of developers, is dealing with AI solutions that are “almost right, but not quite.” That’s worse than obviously wrong code—it demands careful review. Forty-five percent report debugging AI code takes more time than writing from scratch. When asked to choose between AI and a person, 75% pick the human.

Here’s the productivity paradox: a 2025 METR study found experienced developers expected AI to make them 24% faster. Reality? AI made them 19% slower. Time savings from faster generation got consumed by verification and debugging. Developers still believed AI had sped them up by 20%, despite being measurably slower.

Forced Adoption in a Shrinking Market

The trust crisis intensifies with job market context. A Stanford study analyzing ADP payroll data found employment for software developers aged 22-25 declined nearly 20% from late 2022 through mid-2025. The timing tracks precisely with the GenAI tool surge following ChatGPT’s November 2022 launch—the most severe impact among all occupations studied.

Meanwhile, developers over 30 in the same fields saw employment grow 6-12%. The age-based disparity reveals the split: junior roles eliminated, senior roles enhanced. Entry-level developers face a brutal double bind—adopt the tools replacing you, or fall behind.

This creates adoption without trust. It’s not “I believe in this technology.” It’s “I can’t afford not to use it.”

What 2026 Actually Looks Like

The first-ever sentiment decline signals market correction. Better to face AI limitations now than accumulate quality debt while pretending the emperor has clothes.

Google CEO Sundar Pichai claims 25% of new code at Google is AI-generated. Microsoft CEO Satya Nadella puts it at 20-30%. What they don’t emphasize: all requires human review. “Generated” doesn’t mean “trusted”—it means “complementing human work under supervision.” As The New Stack notes, agentic coding “isn’t fully trusted at this point.” Trust remains the bottleneck.

Tool vendors need to address the 1.7x defect rate. Developers need better context understanding—current tools fail at structural tasks. The industry needs transparency about limitations, not CEO soundbites optimized for earnings calls.

For developers, the skillset is shifting. Junior developers can’t compete on code production alone. Critical thinking matters more than typing speed. Code review, debugging AI output, and understanding limitations are becoming core competencies. The employment gap shows where value lives: judgment, architecture, verification—not generating boilerplate.

Trust Must Be Earned

Developers are testing marketing claims against operational reality and finding them lacking. Eighty-four percent adoption with 33% trust isn’t a success story—it’s a warning. The tools are here to stay, but the honeymoon is over.

This reality check is overdue. The faster the industry accepts AI coding tools as imperfect augmentation rather than reliable automation, the faster we can build workflows that account for their limitations. Developers get it. Now leadership needs to catch up.