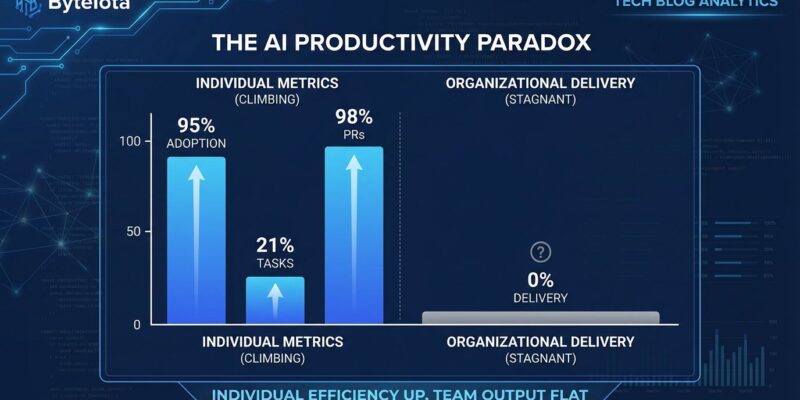

The 2025 DORA Report reveals a productivity paradox challenging billions in AI investments: 95% of developers use AI coding tools, individual task completion jumped 21%, and pull requests merged increased 98%—yet organizational delivery metrics remain completely flat. The disconnect exposes why most companies aren’t seeing ROI from AI tools.

Organizations poured billions into GitHub Copilot, Cursor, and ChatGPT expecting team-level gains. The authoritative DORA data shows individual developer speed doesn’t translate to organizational throughput. The problem isn’t the AI tool—it’s what the AI amplifies.

AI as Amplifier, Not Universal Solution

AI doesn’t fix organizational dysfunction—it magnifies it. The DORA Report states bluntly: “AI’s primary role is as an amplifier, magnifying an organization’s existing strengths and weaknesses.” High-performing teams with strong architecture and code review processes see AI multiply their advantages. Struggling teams with technical debt and siloed workflows find AI accelerates their problems.

The data exposes the mechanism. While developers merge 98% more pull requests with AI assistance, those PRs are 154% larger, take 91% longer to review, and introduce 9% more bugs. Code review becomes the bottleneck. Quality assurance capacity maxes out. Organizational throughput stays flat while individual developers sprint faster on a hamster wheel.

Thoughtworks engineering leader Chris Westerhold warned: “AI will help you build the wrong thing, faster.” That’s exactly what happens when poorly defined architecture meets AI code generation at scale. Developers produce ten times the code volume at ten times the architectural debt—all while metrics dashboards show green.

Related: AI Code Quality Crisis: 4x Duplication Since AI Adoption

Seven Capabilities Separate Success From Failure

DORA 2025 introduces the AI Capabilities Model—seven organizational prerequisites that determine whether AI creates value or chaos. Organizations missing even one capability see AI amplify weaknesses instead of strengths.

The seven capabilities: clear AI governance, healthy data ecosystems, AI-accessible internal data, robust version control, small-batch delivery discipline, user-centric product focus, and quality internal developer platforms. That last one matters most. The report found direct correlation between high-quality IDPs and ability to unlock AI value—90% of organizations adopted at least one platform, but platform maturity determines outcomes.

Teams without user-centric focus experience negative impacts from AI adoption. The AI accelerates feature velocity while simultaneously degrading product-market fit. Platform engineering emerges as the critical enabler. Organizations with mature IDPs see +20% productivity gains that actually show up in delivery metrics, not just individual dashboards. Those without platforms see AI gains absorbed by toolchain fragmentation and context-switching overhead.

The capability checklist gives engineering leaders clarity. Instead of asking “should we buy more AI seats?”, the question becomes “do we have the organizational foundation to make AI work?” Most don’t. The AI hype cycle sold tools when it should have sold architecture consulting.

Team Archetype Matters More Than Tool Choice

DORA 2025 replaces simplistic elite/high/medium/low classifications with seven team archetypes based on performance patterns. The framework reveals why AI works brilliantly for some teams and catastrophically for others.

At the top: “Harmonious High-Achievers” who accelerate without burning out. AI multiplies their existing excellence. At the bottom: “Foundational Challenges” where process gaps and cultural friction prevent AI from delivering anything beyond technical debt. In between: “Legacy Bottleneck,” “Stable and Methodical,” “Pragmatic Performers,” “High Impact Low Cadence,” and “Constrained by Process.”

A Legacy Bottleneck team with GitHub Copilot will underperform a Harmonious High-Achiever team with basic AI assistance. Archetype matters exponentially more than tool selection. Organizations wasting budget on enterprise AI licenses would get better ROI fixing their archetype first—refactoring legacy systems, streamlining bureaucracy, strengthening code review culture.

The 12-Month Operationalization Window

Organizations have roughly 12 months to shift from AI experimentation to operationalization before competitive disadvantages compound irreversibly. That warning comes from Thoughtworks analysis of the DORA data, and it creates urgency engineering leaders can’t ignore.

Early movers who operationalized AI in 2024—not just bought licenses, but strengthened architecture, implemented platform engineering, established governance—are now seeing organizational-level gains translate to business outcomes. Late adopters still experimenting with AI find individual productivity increases absorbed by systemic dysfunction. The gap widens monthly as the AI amplifier effect compounds advantages for high-capability organizations.

The 12-month window isn’t arbitrary. It’s how long the competitive gap takes to become structural. After that, late movers face years of architectural rework under pressure from competitors shipping faster. The time to operationalize is now, not when “conditions are perfect.”

What Engineering Leaders Should Do NOW

DORA 2025 and Thoughtworks provide specific action items. First: strengthen platform engineering. Treat internal infrastructure as a product with self-service tools, unified developer experience, and built-in quality guardrails. Platform engineering adoption is projected to hit 80% of large organizations by 2026, up from 45% in 2022. Organizations without mature platforms can’t operationalize AI—the toolchain fragmentation kills any productivity gains.

Second: operate as an optimization engine using evidence-based data, not intuition. Monitor whether AI amplifies strengths or weaknesses. Track instability metrics—bug rates, code review time, change failure rates—not just velocity metrics. Organizations measuring only PR count and task completion miss the dysfunction hiding beneath green dashboards.

Third: shift engineering value from “writing code” to “validating code and architecting systems.” Westerhold explains: “The real value of an engineer is no longer just in writing code. It’s in prompt engineering, solution architecture and validating AI-generated outputs.” That’s the mindset shift required. Junior developers become code validators. Senior developers become system architects. AI handles the typing.

Fourth: don’t wait for perfect conditions. Organizations delaying operationalization until they “fix everything first” will fall behind competitors who improved architecture while scaling AI simultaneously. The window closes faster than most engineering leaders expect.

Related: Lovable AI $330M Funding: Vibe Coding Explodes to $6.6B

Key Takeaways

- AI amplifies existing organizational strengths and weaknesses—tool choice matters far less than architectural and process foundations

- Seven capabilities determine success: AI governance, data ecosystems, internal data access, version control, small batches, user focus, and platform quality

- Team archetype predicts AI outcomes better than AI tool selection—Legacy Bottleneck teams should fix fundamentals before scaling AI

- Organizations have 12 months to operationalize AI before competitive gaps become structural and irreversible

- Platform engineering, evidence-based optimization, and architectural focus matter exponentially more than buying enterprise AI licenses

The DORA 2025 paradox challenges the fundamental assumption driving AI tool investment: that individual developer productivity translates to organizational outcomes. It doesn’t. Organizations win by strengthening the system AI amplifies, not by buying better amplifiers.