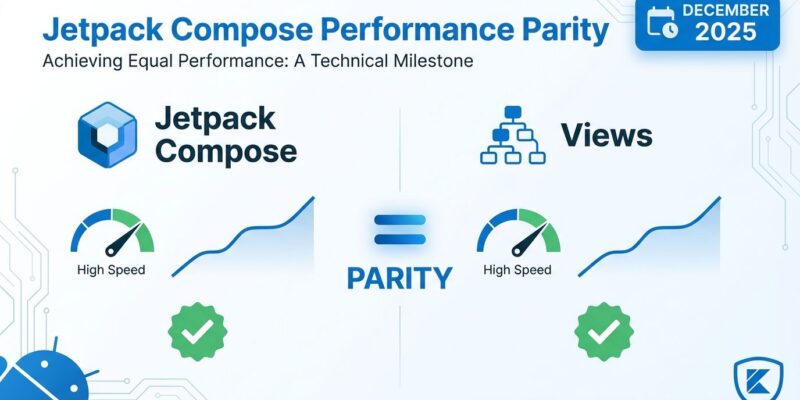

Google’s Jetpack Compose December 2025 release (BOM 2025.12.00) delivers the performance milestone Android developers have been waiting for: Compose now matches the performance you would see if using Views, according to internal scroll benchmarks. This achievement closes a three-year debate and eliminates the last major technical objection to Compose adoption. The “Compose is slow” argument is over.

Performance Parity Achieved After Three-Year Journey

The December release marks a turning point for Android UI development. Google’s official announcement doesn’t hedge: Compose now matches Views performance in internal scroll benchmarks, with scroll jank reduced to just 0.2% during long scrolling tests. For context, that’s the metric developers cite when arguing Views are faster than Compose—and it no longer applies.

This milestone didn’t happen overnight. Since Compose 1.0 launched in 2021, the Android team has systematically addressed performance bottlenecks through runtime optimizations, compiler improvements, and architectural changes. The December 2025 release (Compose 1.10) represents the culmination of that work. Performance parity isn’t a claim anymore; it’s measured reality.

What changed? Pausable composition is now enabled by default in lazy prefetch—the technical breakthrough that makes this possible.

How Pausable Composition Eliminates Frame Drops

Pausable composition is a fundamental change to how the Compose runtime schedules work. Previously, once a composition started, it had to run to completion. If you had complex UI—nested composables, heavy calculations, large lists—that work could block the main thread longer than a single frame budget (16.67ms at 60fps). Result: dropped frames, janky scrolling, frustrated users.

The new behavior is smarter. The runtime now monitors frame time budgets and can “pause” composition work if it’s running out of time, then resume in the next frame. This prevents any single composition from monopolizing the main thread. Combined with the CacheWindow APIs introduced in Compose 1.9, which control how many items lazy layouts prefetch, developers get smoother scrolling without manual optimization.

Additional improvements stack on top. Background text prefetching offloads text measurement to background threads using LocalBackgroundTextMeasurementExecutor, warming caches without blocking UI. Modifier optimizations to Modifier.onPlaced and Modifier.onVisibilityChanged reduce overhead. Google’s performance deep-dive details the technical approach, but the developer experience is the point: Compose just got faster, and you didn’t have to change your code.

What This Means for Android Development

Performance parity shifts the Compose adoption calculus. The last major technical objection—”Views are faster”—is no longer valid. New Android projects should strongly consider Compose as the default choice, not the experimental alternative. Companies already made this bet: Airbnb, Disney+, Meta (Threads), Spotify, ChatGPT, and Google Play all use Compose in production, reporting faster delivery cycles and reduced defects.

For teams still on Views, the migration cost/benefit analysis just changed. Performance was the defensible reason to delay. What’s left are adoption challenges—learning declarative UI paradigms, retraining teams, integrating with legacy View code—but those are transition costs, not technical deal-breakers. Industry consensus in 2025 is that Compose has matured past the “wait and see” phase.

Developer skills are shifting too. Declarative UI and Kotlin expertise are becoming baseline expectations for Android roles. The debate is moving from “should we use Compose?” to “how do we migrate efficiently?”

The Ecosystem Effect: Kotlin 2.0 Strong Skipping

Compose didn’t achieve performance parity alone. Kotlin 2.0’s Strong Skipping mode, available in Jetpack Compose compiler 1.5.4+, eliminated what developers called “Stability Worry”—unnecessary recompositions caused by unstable parameters. Strong Skipping allows even composables with unstable parameters to generate skipping code, radically reducing unskippable composables without requiring code changes.

This is ecosystem maturity in action. Performance improvements come from compiler optimizations working in concert with runtime changes. Developers get the benefits for free, just by upgrading dependencies. The official performance documentation shows how Kotlin and Compose tooling evolved together to solve the performance problem systematically.

Beyond Performance: What’s Next?

With performance solved, the December release also ships features that signal Compose’s next phase. The new retain API manages state across configuration changes without serialization—useful for persisting objects like ExoPlayer instances across device rotations without interrupting media playback. Material 3 1.4 adds components like HorizontalCenteredHeroCarousel and TimePicker mode-switching. Animation improvements include dynamic shared elements and veiled transitions.

These features point to Compose’s future: now that performance parity is achieved, development focus shifts to developer experience, tooling, and ecosystem richness. Performance was the last technical barrier. What comes next is making Compose the obvious choice for productivity, not just capability.

The Compose performance debate is over. Developers who dismissed it as “too slow” no longer have data to back that claim. New Android projects have zero performance-based justification for choosing Views. If you’re starting a project in 2025, Compose should be your default—and you won’t be giving up performance to get declarative UI benefits.