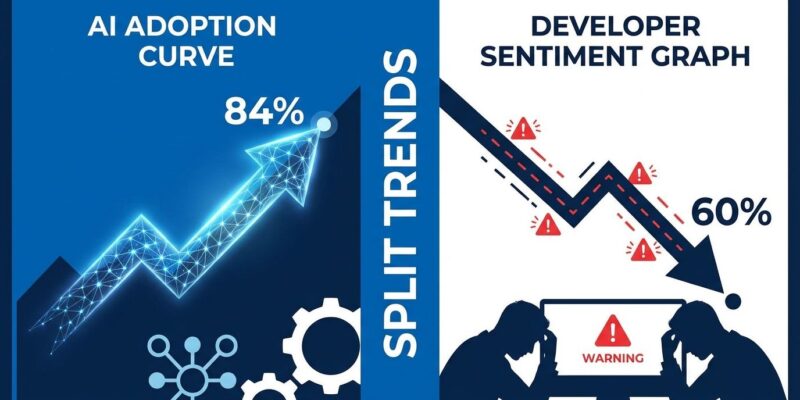

The 2025 Stack Overflow Developer Survey (49,000 developers) and JetBrains State of Developer Ecosystem 2025 (24,534 developers) reveal a critical paradox in the software industry: AI coding tool adoption surged from 76% to 84% year-over-year, but positive developer sentiment crashed from 72% to just 60% in the same period. This represents a 12-percentage-point drop in satisfaction despite near-universal adoption—the largest sentiment decline for developer tools ever recorded. Across 73,534 surveyed developers from 371 countries, the message is clear: developers are using AI tools, but they’re not happy about it.

The Trust Collapse: Forced Adoption Meets Developer Reality

Developer trust in AI coding tools collapsed in 2025 across three critical metrics. Positive sentiment fell from 72% to 60%, trust in AI accuracy dropped from 40% to 29%, and job security confidence declined from 68% to 64%. Moreover, these aren’t minor fluctuations—they represent a fundamental shift in how developers perceive AI tools after gaining real-world experience with them.

The paradox deepens when you consider that 84% adoption doesn’t signal enthusiasm—it signals forced compliance. According to the JetBrains survey, 68% of developers expect employers will require AI proficiency soon. Consequently, when adoption is driven by employer mandates rather than organic enthusiasm, you get resentment, not satisfaction. Developers are checking compliance boxes, not embracing transformative tools.

This is the gap between marketing hype and developer reality. AI tool vendors promised “10x developers.” Developers got tools that are sometimes helpful, frequently frustrating, and increasingly mandatory.

The “Almost Right” Problem: Why AI Makes Developers Slower

The Stack Overflow survey identified the top developer frustration: 45% cite “AI solutions that are almost right, but not quite” as their biggest complaint. Worse, 66% report spending MORE time fixing “almost-right” AI-generated code than it would take to write code manually. Furthermore, the “almost right” problem is uniquely frustrating because you have to read and understand AI-generated code first, then debug subtle issues, then rewrite it—taking longer than starting from scratch.

A July 2025 randomized controlled trial by METR (Model Evaluation & Threat Research) exposed a stunning perception gap. The study tracked 16 experienced developers completing 246 tasks in mature projects. Objective measurement showed developers were 19% slower when using AI tools. However, those same developers believed they were 20% faster. That’s a 39-percentage-point gap between perception and reality.

The productivity loss isn’t mysterious. Developers spend 9% of their time reviewing and cleaning AI outputs, plus another 4% waiting for AI generations. That’s 13% of development time consumed by managing AI tools. Meanwhile, 75% of developers still ask other people for help when distrusting AI answers—humans remain more reliable than algorithms.

Code Quality Crisis: Bugs, Security, and Technical Debt

Independent research reveals AI-generated code is creating measurable quality problems. GitHub and GitClear data shows a 41% increase in bugs for projects relying heavily on AI, plus a 7.2% drop in system stability. Additionally, analysis describes “downward pressure on code quality” as developers accept AI suggestions without full understanding. The acceptance rate for AI code is telling: only 33% for suggestions overall, and just 20% for individual lines of code.

The JetBrains survey identified developers’ top five concerns about AI tools: inconsistent quality of generated code, limited understanding of complex logic, privacy and security risks, potential skill degradation, and lack of context awareness. Indeed, these aren’t theoretical worries—they’re documented problems developers encounter daily.

Security threats are evolving. A new attack vector called “slopsquatting” exploits AI hallucinations: AI generates non-existent package names, attackers create malicious packages with those names, and developers unknowingly import malicious code into their projects. Black Duck’s director of product management warns that “hallucinations along with intentional malicious code injection are definitely a concern,” noting that hallucinations create unintended functionality while malicious injection creates security vulnerabilities. GitHub data shows 97% of developers have used AI coding tools, but most don’t fully understand the risks involved.

Where AI Actually Helps: Realistic Expectations

Despite the problems, AI tools do provide value in specific scenarios. The JetBrains survey found that 85% of developers regularly use AI for coding, and 90% save at least one hour weekly through automation. A smaller subset—20%—saves eight or more hours weekly, equivalent to a full workday. Meanwhile, GitHub Copilot studies report that 73% of developers stay in flow state and 87% preserve mental effort during repetitive tasks.

The pattern is clear: AI excels at routine, repetitive tasks like boilerplate generation, scaffolding, and parsing documentation. However, it struggles with complex problem-solving requiring domain knowledge, business context, or institutional memory built over years. Tasks needing clear patterns and examples work well. Tasks requiring architectural decisions or deep system understanding don’t.

The industry needs to recalibrate expectations. AI provides approximately 20% productivity gains on specific tasks—not the “10x developer” promise marketed by vendors. When expectations are realistic (useful assistant for repetitive work) rather than overhyped (revolutionary replacement for developer thinking), developers won’t feel disappointed. Therefore, the current trust crisis stems from the gap between what was promised and what was delivered.

The Vendor-Reality Gap and What Happens Next

Vendor-funded studies paint a dramatically different picture than independent surveys. GitHub and Accenture report that 90% of developers feel more fulfilled and 95% enjoy coding more when using Copilot. Meanwhile, Stack Overflow and JetBrains surveys—independent of tool vendors—show only 60% positive sentiment and 29% trust in accuracy. That’s a 30-35 percentage point gap between vendor claims and developer reality.

The forced adoption problem compounds the trust issue. With 68% of developers expecting employers to mandate AI proficiency, and 72% reporting that “vibe coding” (generating entire applications from prompts) isn’t part of their professional work, adoption becomes a checkbox exercise rather than genuine productivity improvement. Furthermore, professional developers show more skepticism (61% positive sentiment) than those learning to code (53%), suggesting that experience with AI tools breeds disillusionment, not enthusiasm.

If positive sentiment drops another 10 percentage points to below 50%, expect major backlash against AI tool mandates. Developers will resist, companies will face retention issues, and the AI coding tool market will face a reckoning. The industry has one year to prove that AI tools deliver on their promises—or admit they oversold and recalibrate.

Key Takeaways

- AI coding tool adoption hit 84% in 2025, but developer satisfaction crashed from 72% to 60%—a 12-point drop representing the largest sentiment decline for developer tools ever recorded

- Developers are 19% slower with AI tools but believe they’re 20% faster—a 39-point perception gap between marketing hype and measured reality

- 66% of developers spend more time fixing “almost-right” AI code than writing from scratch, while 41% more bugs appear in AI-heavy projects

- Vendor studies claim 90% satisfaction while independent surveys show 60%—a 30-point gap exposing the trust crisis between AI tool makers and developers

- Forced adoption by employers (68% expect AI proficiency requirements) creates resentment, not enthusiasm, as developers use tools they’re losing faith in