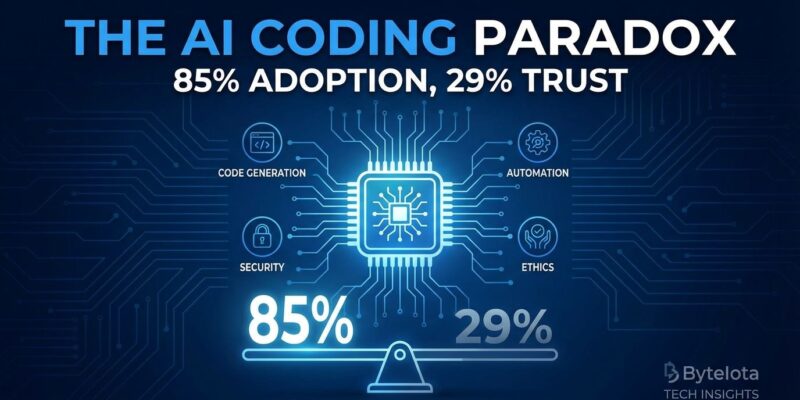

Eighty-five percent of developers now use AI coding tools regularly, yet trust in these tools has plummeted from 40% to 29% in just one year—a 27.5% collapse, according to JetBrains’ October 2025 State of Developer Ecosystem survey of 24,534 developers. Even more striking: 84% of developers use or plan to use AI tools, but 46% don’t trust the accuracy of what they produce, up from 31% last year. The industry is sleepwalking into mass adoption of technology it fundamentally distrusts.

You Think AI Makes You Faster. The Data Says You’re Wrong

Research from METR shows experienced open-source developers take 19% longer to complete coding tasks when using AI tools compared to working without them. Yet these same developers estimated they were sped up by 20% on average—a staggering 39-percentage-point gap between perception and reality.

The METR study involved 16 experienced developers from major open-source repositories averaging 22,000+ stars, completing 246 issues using Cursor Pro with Claude 3.5/3.7 Sonnet models. Tasks averaged 2 hours each, with developers compensated at $150/hour to ensure serious participation. Moreover, code quality remained consistent across both AI-allowed and AI-disallowed conditions, ruling out quality differences as a factor in the slowdown.

This destroys the core premise driving AI tool adoption. If AI makes experienced developers slower—not faster—then the entire value proposition collapses. Consequently, the perception gap reveals how hype and marketing have disconnected from measurable reality.

Trust Collapse Meets Adoption Explosion

While trust cratered, adoption exploded. Developer trust in AI coding accuracy dropped from 40% in 2024 to just 29% in 2025. Simultaneously, regular AI tool usage jumped from 49% to 85%—a 73% increase. This creates a paradox: an industry adopting technology as confidence in it evaporates.

The JetBrains survey reveals deeper concerns. Developers cite inconsistent code quality, limited understanding of complex logic, privacy risks, skill degradation, and lack of context awareness as top worries. Additionally, 68% expect employers will soon require AI tool proficiency, suggesting adoption is driven by fear and coercion rather than measured value.

Industries don’t normally adopt technology as trust declines—that’s backwards. This pattern suggests decisions driven by peer pressure (everyone else is using it), FOMO (falling behind), and hype (perceived necessity) rather than actual productivity gains.

Critical Thinking Breakdown

Research from Saarland University, presented at the 2025 IEEE/ACM International Conference on Automated Software Engineering, shows developers working with AI assistants are significantly more likely to accept AI-generated suggestions without critical evaluation. In contrast, developers working with humans ask critical questions and carefully examine contributions.

The study divided 19 programming students into pairs: 6 with human partners, 7 with GitHub Copilot. AI-assisted teams showed less intense interactions covering a narrower range of topics, focused primarily on code itself. Human pairs engaged in broader discussions with more digressions—characterized as essential for knowledge exchange. Professor Sven Apel noted programmers working with AI assistants “were more likely to accept AI-generated suggestions without critical evaluation” and assumed the code would function correctly.

This uncritical reliance creates technical debt—hidden future costs from undetected mistakes accumulating over time. Furthermore, developers aren’t just using AI tools; they’re changing how they evaluate code, shifting from critical analysis to passive acceptance. This cognitive shift may be more damaging than any individual bug.

The “Almost Right But Not Quite” Problem

GitHub Copilot quality issues have intensified. Developers report the tool “changed from a reliable, productive and educational co-intelligence into a frantic, destructive and dangerous actor.” Research shows code churn—lines reverted or updated within two weeks—will double in 2024 compared to the 2021 pre-AI baseline.

Developers report initial Copilot outputs contain 60% speculative content, with revised versions still showing 30% speculation. Model relationships are 90% guessed, database schemas 100% guessed. A 2025 study analyzing 2,025 coding problems found the first solution is less likely to be correct (skipping it is advised), novel problems are unlikely to be solved by Copilot, and checking all solutions is necessary but “the effort is usually not justified.”

“Almost right but not quite” is worse than completely wrong. Wrong code fails fast and gets caught in testing. “Almost right” code passes initial review, gets merged, and creates bugs discovered later—often in production. This means more time spent debugging than was saved initially, a net negative for productivity.

The Adoption Trap

Despite declining trust and questionable productivity gains, developers can’t stop. The adoption trap is real: usage driven by anxiety rather than value. When 68% expect employers will soon require AI proficiency, adoption becomes coercive, not voluntary. Four out of five companies now embrace third-party AI tools in development workflows, leaving the skeptical 15% who haven’t adopted increasingly isolated.

Developers report saving time—nine in ten save at least one hour weekly, one in five save eight or more hours (a full workday). However, these gains likely come from simple boilerplate tasks, not the complex work where METR showed a 19% slowdown. The industry is creating a bubble where usage and investment increase even as trust and actual productivity decline.

Key Takeaways

- AI coding tools don’t make experienced developers faster—METR data shows a 19% slowdown, while developers wrongly believe they’re 20% faster, a massive 39-percentage-point perception gap driven by hype

- Trust has collapsed from 40% to 29% in one year while adoption exploded from 49% to 85%, creating a paradox where 84% use tools that 46% don’t trust—adoption is driven by fear and peer pressure, not measured value

- Developers accept AI suggestions without critical evaluation, unlike with human colleagues, creating technical debt from undetected mistakes and degrading fundamental code review skills

- Code churn will double compared to pre-AI baselines as “almost right but not quite” suggestions create bugs discovered later, making debugging time exceed initial time savings

- The adoption trap is real: 68% expect AI proficiency will be required, forcing adoption through coercion rather than demonstrated productivity—question the hype, trust the data, and it’s okay to be part of the skeptical 15%