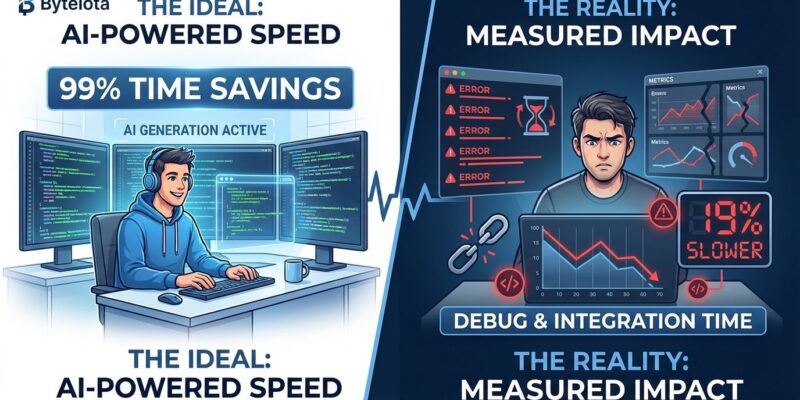

Ninety-nine percent of developers report time savings from AI coding tools, with 68% claiming to save 10+ hours weekly. That’s according to major 2025 surveys from JetBrains (24,534 developers), Stack Overflow (49,000+), and Atlassian (3,500). However, METR’s rigorous controlled study published in July 2025 found the opposite: experienced developers take 19% LONGER to complete tasks when AI tools are allowed. The perception gap—43 percentage points between what developers believe (24% faster) and measured reality (19% slower)—isn’t just about AI hype. Moreover, it exposes a deeper crisis: 66% of developers now distrust the productivity metrics used to evaluate their work.

This measurement crisis affects everything from performance reviews and compensation to AI investment ROI and engineering management strategy. Consequently, the industry is scrambling to replace broken DORA metrics with holistic alternatives, but no consensus exists yet.

99% Report Time Savings. Reality: 19% Slower.

METR’s July 2025 study stands alone as the only rigorous experimental analysis of AI coding productivity. Researchers recruited 16 experienced open-source developers—contributors to repos averaging 22,000+ stars and 1 million+ lines of code—and ran a randomized controlled trial using Cursor Pro and Claude 3.5/3.7 Sonnet. The finding: developers took 19% longer to complete real issues from their own repositories when AI was allowed.

The perception gap is staggering. Before starting tasks, developers forecast AI would reduce completion time by 24%. After finishing, they estimated 20% time savings. Measured reality showed 19% slower performance. That’s a 43-percentage-point gap between belief and reality.

Despite being measurably slower, 69% of participants continued using AI tools after the study ended. This reveals something deeper than productivity: AI tools feel productive even when they’re not. Furthermore, developers experience psychological benefits—faster initial code generation, reduced cognitive load during boilerplate work—that don’t translate to faster task completion.

The discrepancy stems from hidden costs METR quantified but most studies ignore: time spent crafting prompts, reviewing AI-generated code, debugging “almost right” outputs, and context-switching between human and AI workflows. Stack Overflow’s 2025 survey confirms this: 66% of developers are frustrated by AI code that’s “almost right, but not quite”, and 45% of their time now goes toward debugging AI-generated code.

66% Don’t Believe Metrics Reflect Their Work

Two-thirds of developers don’t believe current productivity metrics reflect their true contributions, according to JetBrains’ 2025 State of Developer Ecosystem survey. This represents a collapse of confidence in how developer work is measured, evaluated, and compensated.

Traditional DORA metrics—deployment frequency, lead time, change failure rate, mean time to recovery—were designed for pre-AI DevOps workflows. They measure velocity (how fast code ships) not value (quality of outcomes). In AI-assisted development, DORA can’t distinguish between human-written and AI-assisted code, can’t measure time spent debugging AI outputs, and can’t account for the 40% increase in delivery instability that comes with AI adoption.

The 2025 DORA report itself acknowledges this limitation, finding “zero evidence that speed gains are worth” the instability trade-off. Teams deploy more frequently with AI (velocity up), but change failure rates spike 40% (quality down). DORA optimizes for the wrong outcome: shipping fast, not shipping well.

Developers now rate non-technical factors—collaboration, communication, context-switching—as 62% critical compared to 51% for technical factors like CI pipeline speed. DORA measures none of this. As a result, the metric system incentivizes behavior (commit frequently, deploy often) that doesn’t correlate with the work developers find most valuable (solving complex problems, collaborating effectively, delivering quality outcomes).

Leadership Doesn’t Understand: 63%, Up From 44%

The leadership-developer gap widened sharply in 2025. JetBrains found 63% of developers say leadership doesn’t understand their pain points, up from 44% in 2024—a 19-percentage-point increase in one year. This coincides with aggressive AI tool adoption driven by executive decisions, often without developer input.

Positive sentiment toward AI tools dropped from 70%+ in 2023-2024 to 60% in 2025 as the honeymoon period ends and frustrations surface. Stack Overflow’s survey reveals stark trust erosion: 46% of developers actively distrust AI accuracy, and only 3% report “highly trusting” AI outputs. Experienced developers show the greatest caution, with the lowest trust rates and highest distrust rates across all experience levels.

The disconnect creates a vicious cycle. Leaders invest in AI based on vendor promises of 25% productivity gains. Developers experience the “almost right” problem—code that looks correct but fails in edge cases, requiring more debugging than writing from scratch. Metrics fail to capture this reality because DORA doesn’t measure debugging time or quality trade-offs. Meanwhile, Gartner’s 2025 analysis confirms the gap: while companies claim 25% productivity improvements on paper, actual net gains range from 1-14% after accounting for hidden costs.

When 63% of developers believe leadership doesn’t grasp their reality, and metrics can’t bridge that gap, organizations risk massive misallocation of capital. The METR finding—19% slower—contradicts executive assumptions, but without trusted measurement systems, these insights can’t inform better decisions.

DORA Measures Velocity, Not Value

DORA’s limitations in the AI era have sparked an industry-wide shift toward holistic measurement frameworks. SPACE (Satisfaction, Performance, Activity, Communication, Efficiency) adds developer well-being and collaboration to traditional velocity metrics. DX Core 4 balances Speed, Effectiveness, Quality, and Business Impact. DevEx focuses specifically on developer friction points and engagement.

More than 360 organizations now use DX Core 4, reporting measurable results: 3-12% efficiency gains, 14% increases in R&D focus, and 15% improvements in developer engagement. These frameworks acknowledge what DORA misses: productivity is multidimensional, not just speed.

The shift represents an industry-wide acknowledgment that velocity-focused metrics optimize for the wrong outcomes. However, adoption remains fragmented. Most companies still rely on DORA supplemented with ad-hoc developer surveys, creating a measurement patchwork that prevents cross-company comparisons and evidence-based tool decisions.

DORA co-creator Nicole Forsgren acknowledges the paradox: “Developers are using Generative AI to produce code faster than ever before, but metrics aren’t showing an overall productivity improvement. Developers become faster but do not necessarily create better software or feel more fulfilled.” The emphasis on fulfillment is telling—productivity isn’t just output, it’s sustainable output that doesn’t burn out teams.

No Consensus on How to Measure Reality

The industry has diagnosed the problem—productivity measurement is broken in the AI era—but lacks consensus on solutions. While SPACE has academic backing from Microsoft and GitHub researchers, DX Core 4 has enterprise adoption, and DevEx has developer community support, no single framework dominates. Most organizations still lean on DORA despite acknowledging its shortcomings.

This fragmentation creates a measurement vacuum. Without standardized metrics, organizations can’t compare approaches, learn from peers, or make evidence-based decisions about AI tool adoption. Developers remain stuck in a system where they feel productive (99% report time savings), metrics contradict this (19% slower in controlled studies), leadership doesn’t understand the gap (63%), and no one agrees on how to measure ground truth.

The measurement crisis will likely worsen before it resolves. AI tools continue evolving faster than metrics can adapt. The 2025 baseline—90% AI adoption, 60% positive sentiment, 66% metric distrust—establishes a new normal where productivity gains are assumed but unproven.

One thing is certain: 68% of developers expect employers to require AI proficiency soon, regardless of productivity evidence. The question isn’t whether AI becomes mandatory—it’s whether we’ll ever accurately measure its impact.

Key Takeaways

- The perception gap is massive: Developers believe AI saves 20-24% time; controlled study shows 19% slower (43-point gap)

- Metrics are broken: 66% don’t trust productivity metrics; DORA measures velocity, not value

- Leadership is disconnected: 63% say leaders don’t understand pain points, up from 44% in one year

- No measurement consensus: SPACE, DX Core 4, DevEx emerging, but DORA still dominates despite acknowledged failures

- The real crisis: Organizations can’t measure productivity accurately, making evidence-based AI decisions impossible

- AI adoption accelerates anyway: 90% using tools, 68% expect mandatory proficiency—measurement crisis won’t slow adoption