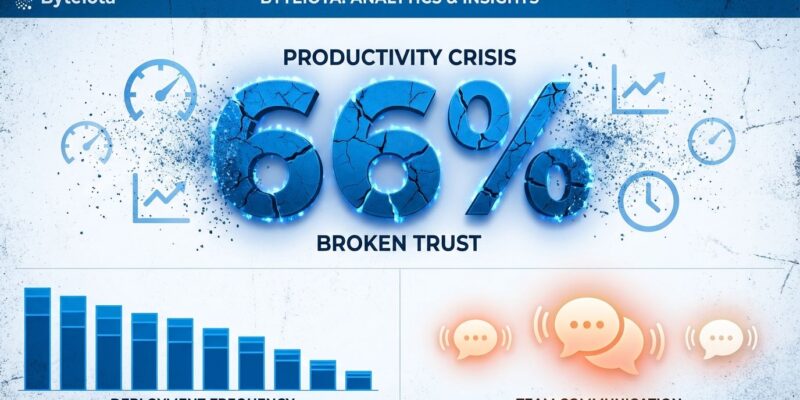

JetBrains surveyed 24,534 developers for their State of Developer Ecosystem 2025 report (published October 2025) and uncovered a productivity measurement crisis: 66% don’t believe current metrics accurately reflect their work. Companies track DORA metrics—deployment frequency, lead time for changes, change failure rate, and time to restore service—obsessing over shipping velocity. Meanwhile, developers report that non-technical factors like communication clarity, transparent goals, and collaboration quality matter more than how fast code deploys.

This validates what every developer feels but can’t prove: the systems measuring their performance are broken. Organizations quantify what’s easy to track (deployments, cycle time, story points) while ignoring what actually drives effectiveness (clear requirements, manageable technical debt, autonomy to make decisions). The 66% distrust rate isn’t noise. It’s proof that productivity measurement has failed.

The 66% Crisis: Communication Beats Velocity

The JetBrains data exposes a stark disconnect. While 62% of developers cite non-technical elements—internal collaboration, communication effectiveness, and clarity of objectives—as critical to their productivity, only 51% point to technical factors like CI/CD pipeline speed or tooling quality. Technical managers see the gap too: they want double the current investment in communication infrastructure and technical debt reduction compared to what companies actually spend.

Most organizations lack dedicated roles for developer productivity. Responsibility falls to team leads juggling delivery deadlines, or individual contributors measuring themselves—an obvious conflict of interest. Nobody owns the question: “Are we measuring the right things?” Meanwhile, executives worship DORA dashboards that tell them deployments are up 40% while developers burn out building features nobody uses.

The problem isn’t that companies measure. It’s that they measure what’s convenient instead of what matters. Deployment frequency is easy to count. Clarity on project goals? That requires surveys, conversations, and qualitative feedback—messy, time-consuming, and impossible to graph.

What DORA Measures (And What It Ignores)

DORA tracks four metrics designed for DevOps pipeline optimization: deployment frequency (how often code ships), lead time for changes (commit to production time), change failure rate (percentage of deployments that break things), and time to restore service (how fast teams recover from failures). These metrics emerged from Dr. Nicole Forsgren’s “Accelerate” research in 2018, categorizing teams as Elite, High, Medium, or Low performers based on delivery speed and stability. The official DORA documentation explains the four key metrics in detail.

DORA answers “did the code ship fast and reliably?” but ignores “did developers have clarity on what to build?” and “was the work valuable?” That’s the design—DORA was built to identify CI/CD bottlenecks, not measure human effectiveness. The metrics work brilliantly for optimizing deployment pipelines. They fail catastrophically when applied to human performance.

Developers aren’t assembly line workers. Knowledge work requires collaboration on unclear problems, communication across teams, and time to understand complex systems. None of that shows up in deployment frequency. A team shipping 50 deployments per week looks elite on dashboards but might be thrashing on poorly-defined requirements while ignoring critical technical debt.

Gaming the System: When Metrics Become Targets

Goodhart’s Law states: “When a measure becomes a target, it ceases to be a good measure.” DORA metrics are textbook examples. Once organizations tie metrics to performance reviews or team comparisons, gaming becomes rational behavior. Developers optimize for the measurement instead of the outcome. Research from Jellyfish explains how Goodhart’s Law applies to software engineering metrics.

The patterns are predictable. Teams break features into micro-deployments—whitespace changes, comment updates, config tweaks—inflating deployment frequency while feature velocity stays flat. Change failure rate gets gamed by defining “incidents” so narrowly that degraded performance, data inconsistencies, and user-reported bugs don’t count. Lead time manipulation involves keeping pull requests open for days while work continues elsewhere, then rushing approval and merge right before deployment to show artificially short commit-to-deploy times.

The most insidious gaming: teams avoid risky but valuable work to protect metrics. Refactoring legacy code, experimenting with new approaches, or tackling technical debt all risk increasing change failure rates. So teams stick to safe, incremental changes. The dashboard looks great. Innovation dies.

Better Alternatives: SPACE, DevEx, and Holistic Measurement

The industry knows DORA isn’t enough. Alternative frameworks explicitly address the human factors DORA ignores. SPACE (2021), created by researchers at Microsoft, GitHub, and the University of Victoria, adds five dimensions: Satisfaction and Well-being, Performance, Activity, Communication and Collaboration, and Efficiency and Flow. DevEx (2023) focuses on three developer-centric factors: Feedback Loops, Cognitive Load, and Flow State.

Dr. Nicole Forsgren, who created DORA metrics, co-authored the SPACE framework. That’s not a rejection of DORA—it’s an acknowledgment that pipeline metrics alone can’t capture developer productivity. The SPACE paper explicitly states: “Developer productivity is multidimensional, and must be measured using multiple metrics capturing workflow, communication, and well-being.” Even DORA’s creator knows DORA isn’t sufficient.

Google, Microsoft, and Spotify have used survey-based developer productivity metrics—satisfaction surveys, clarity assessments, autonomy ratings—for years alongside technical metrics. They recognize that fast deployment pipelines don’t matter if developers lack clear goals, drown in technical debt, or quit from burnout. High-performing organizations use DORA for pipeline optimization AND SPACE or DevEx for human factors. ByteIota recently covered DX Core 4: How 300+ Companies Measure Developer Productivity, another framework gaining traction.

What Developers and Managers Should Do

For developers: advocate for qualitative feedback alongside metrics. Document time spent on unmeasured but valuable work—mentoring juniors, clarifying vague requirements, untangling legacy code, improving team documentation. Push back when metrics drive counterproductive behaviors. If your team is gaming deployment frequency instead of shipping value, say so. The 66% statistic gives you cover: “Two-thirds of developers agree these metrics don’t reflect our work.”

For managers: use DORA for pipelines, not people. Track deployment frequency to find slow CI/CD processes. Use lead time to identify approval bottlenecks. But never tie metrics to performance reviews or compensation—that guarantees gaming. Instead, combine quantitative metrics with regular developer satisfaction surveys. Ask “why is deployment frequency down?” not “how do we increase deployment frequency?” The first question finds root causes. The second triggers gaming.

Prioritize communication clarity and technical debt reduction over shipping velocity. The Pragmatic Engineer’s analysis of 17 tech companies found that only Microsoft fully embraces standardized frameworks like DORA and SPACE. Most companies recognize context matters: what works for web app teams doesn’t work for infrastructure, embedded systems, or R&D teams. Measurement must fit the work, not the other way around.

Stop optimizing for deployment frequency. Start optimizing for clarity, communication, and value delivered. That’s harder to measure, requires qualitative feedback, and doesn’t produce pretty dashboards. But it’s what the 66% have been trying to tell us.

Key Takeaways

- 66% of developers don’t trust current productivity metrics (JetBrains 2025, 24,534 developers)—this is a measurement crisis, not a developer problem

- DORA metrics were designed for DevOps pipeline optimization, not human performance—they answer “did we ship fast?” not “did we ship value?”

- Non-technical factors (communication clarity, goal transparency, collaboration quality) matter more than velocity according to 62% of developers vs 51% citing technical factors

- Gaming behaviors are inevitable when metrics become targets—teams optimize for dashboards instead of outcomes, killing innovation and value delivery

- Better approach: use DORA for pipeline bottlenecks, SPACE/DevEx for human factors, and qualitative feedback for context—combine all three instead of worshiping deployment frequency alone

The productivity measurement crisis won’t be solved by better metrics. It requires recognizing that knowledge work is multidimensional, context-dependent, and fundamentally human. Stop measuring developers like factory workers. Start measuring what actually drives effectiveness.