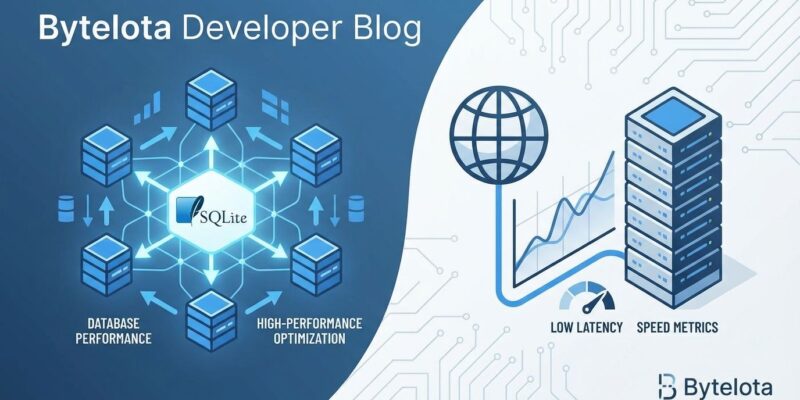

SQLite just hit 102,545 transactions per second over a billion-row database—outperforming Postgres by 30x in comparable conditions. Developer Anders Murphy published the benchmark December 2, 2025, sparking fierce debate on Hacker News (341 points, 123 comments). But the real story isn’t about SQLite’s speed. It’s about network latency: Murphy demonstrated that when network round-trips dominate transaction time, database engine choice matters less than architectural decisions.

Amdahl’s Law strikes again. Postgres dropped from 13,756 TPS to as low as 348 TPS once network delays and serializable isolation entered the picture. SQLite’s embedded architecture—zero network calls, zero coordination overhead—eliminates the bottleneck that plagues client-server databases.

Amdahl’s Law: When Network Latency Dominates Performance

Murphy’s benchmark revealed a fundamental insight most developers ignore: network latency, not database engine, is often the real bottleneck. Even 5ms per database round-trip adds catastrophic overhead when compounded across thousands of transactions. Postgres performance collapsed from 13,756 TPS with low latency to 348 TPS when serializable isolation and network delays combined.

SQLite’s embedded architecture sidesteps this entirely. No network calls. No TCP handshakes. No connection pooling complexity. The database lives in the same process as your application code. Murphy’s tests showed SQLite hitting 102,545 TPS with batching and concurrent reads—a 7-295x speedup over networked Postgres depending on configuration.

This reframes the database choice question. It’s not “which is faster?” It’s “where does my bottleneck live?” For single-server deployments where network latency dominates transaction time, switching to embedded SQLite could deliver 10-30x performance gains without touching a single query.

How Murphy Hit 102K TPS: Batching, SAVEPOINT, Read Pools

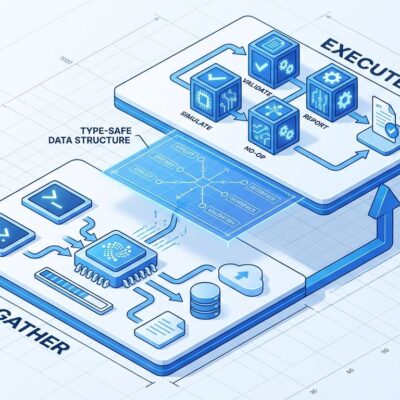

Murphy combined three optimization techniques to hit 102K TPS. First, dynamic batching: SQLite’s single-writer architecture lets you batch hundreds of transactions together (161,868 TPS without concurrent reads). Second, SAVEPOINT for nested transactions—individual failures don’t kill the entire batch. Third, separate read pools to prevent write starvation.

The SAVEPOINT technique is particularly clever. Traditional batching fails if any transaction in the batch fails—you lose everything. SAVEPOINT enables fine-grained rollback within batches:

BEGIN TRANSACTION;

SAVEPOINT batch_1;

INSERT INTO users (id, name) VALUES (1, 'Alice');

RELEASE batch_1;

SAVEPOINT batch_2;

UPDATE accounts SET balance = balance - 100 WHERE id = 1;

-- If fails, ROLLBACK TO batch_2 (doesn't kill entire batch)

RELEASE batch_2;

COMMIT;Performance breakdown: simple batching hit 161K TPS, adding SAVEPOINT dropped to 121K TPS (25% cost for fine-grained rollback), and combining with concurrent reads yielded the final 102K TPS. These patterns aren’t SQLite-specific—they apply to any database. But SQLite’s single-writer architecture makes batching especially effective because there’s no lock contention between writers.

Hacker News Erupts: 123 Comments Split Three Ways

The community reaction split into three camps. Enthusiasts validated Murphy’s findings with production success stories: “We run SQLite in production handling millions of requests/day. It just works.” One developer reported 10x speedups migrating from Postgres to embedded SQLite for edge workers.

Skeptics questioned the methodology. “5ms latency is unrealistic. Unless you use wifi or your database is in another datacenter.” Others argued Murphy weakened Postgres’s configuration to make SQLite look better—an unfair comparison.

Operations engineers raised real-world concerns. “We hit WAL checkpointing failures that paralyzed our app for minutes. SQLite’s operational maturity isn’t there yet.” Another warned about durability risks: “We lost a day’s worth of data after a power outage using synchronous=NORMAL. Learned our lesson—now we use FULL for critical data.”

The debate reveals SQLite’s true position: not a Postgres replacement, but a legitimate choice for specific architectures. Both sides make valid points—the key is understanding where each database excels.

The Honest Trade-Offs: Durability, HA, and Murphy’s Warning

Murphy’s benchmark initially used PRAGMA synchronous=NORMAL—fast but risks data loss on power failure. Community criticism prompted an epilogue testing synchronous=FULL (FSYNC every transaction). SQLite still dominates Postgres even with stronger durability guarantees, though absolute numbers drop. There’s no free lunch. Analytics logs and caches can tolerate NORMAL. Financial transactions and user data require FULL.

Murphy explicitly warns: “If you are not in the business of running on a single monolithic server…DO NOT USE IT.” SQLite has strict limitations. Single-writer architecture can’t scale concurrent writes. No built-in high-availability or replication (solutions like rqlite, dqlite, and LiteFS exist but add complexity). Operational maturity lags Postgres—WAL checkpointing and lock debugging are less refined.

When SQLite wins: single-server monolithic apps, read-heavy workloads, edge computing scenarios where you can’t rely on remote databases, embedded applications. When Postgres wins: high concurrent writes, multi-region replication and failover, complex analytical queries requiring parallel execution, teams needing mature operational tooling.

Murphy’s honesty elevates the benchmark’s credibility. He’s not advocating SQLite-for-everything—he’s demonstrating it dominates in specific scenarios while acknowledging real limitations. This helps developers make architecture decisions based on actual constraints, not hype.

Key Takeaways

- Network latency is often the bottleneck, not database engine—Amdahl’s Law reveals when 5ms per round-trip adds catastrophic overhead compounded across thousands of transactions

- For single-server deployments, SQLite can deliver 10-30x speedups over Postgres by eliminating network overhead entirely—embedded architecture means zero TCP handshakes, zero connection pooling

- Batching + SAVEPOINT patterns apply to any database, but SQLite’s single-writer makes batching especially effective—161K TPS simple batching, 121K TPS with fine-grained rollback

- Trade-offs matter:

synchronous=NORMALis fast but risks data loss on power failure;synchronous=FULLguarantees durability but slower; choose based on use case (analytics vs financial data) - Murphy’s warning stands: SQLite dominates for single-server only—if you need high concurrent writes, multi-region HA, or complex analytics, Postgres remains the better choice

The lesson isn’t “use SQLite everywhere.” It’s “measure where your bottleneck lives.” Developers default to Postgres without questioning whether network latency is silently destroying their performance. For the right architecture, SQLite isn’t a compromise—it’s the faster choice.