Samsung just announced it’s deploying 50,000 Nvidia GPUs to build an “AI Megafactory” where artificial intelligence manufactures the very chips that power AI. It’s a $2.5 billion bet on a recursive technology cycle. The scale is staggering. Meta’s AI supercomputer uses 6,080 GPUs. This factory will have eight times that manufacturing semiconductors. Samsung isn’t tinkering with AI tools on the production line. It’s rewiring chip-making from the ground up with a single intelligent network that analyzes, predicts, and optimizes every millisecond in real-time.

The timing isn’t coincidental. It’s desperation masked as innovation.

Samsung’s Market Share Is Collapsing

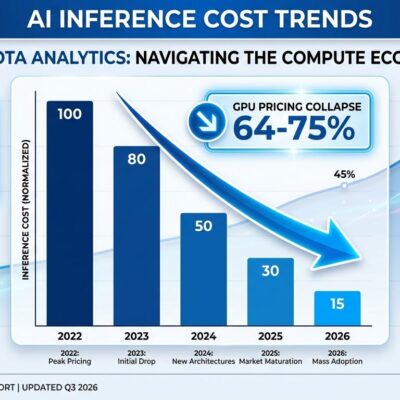

Samsung’s foundry business is hemorrhaging market share. In Q3 2024, Samsung held 12% of the foundry market. By Q1 2025, that dropped to 7.7%. TSMC climbed from 64% to 67.6% and keeps pulling away. Intel’s foundry posted a $7 billion operating loss in April 2024. The traditional playbook – shrink nodes, improve yields, repeat – isn’t working anymore.

TSMC dominates because it got to 2nm first with 60% yields. Intel’s 18A process struggles at 10-30% yields. Samsung can’t win a pure process technology race. So Samsung is betting on different territory: AI-driven manufacturing efficiency. Not better nodes – better intelligence. If traditional chip-making is hitting physics limits, maybe the next battleground is how smart your manufacturing process can get.

It’s a forced move. And it might be brilliant.

What 50,000 GPUs Actually Do in a Chip Factory

This isn’t “AI helps with design.” This is AI as the nervous system of semiconductor manufacturing.

Computational lithography gets 20x faster. Photomasks – the templates used to etch circuits onto silicon wafers – used to take two weeks to process. With Nvidia’s cuLitho library accelerating optical proximity correction, Samsung now does it overnight. Five hundred DGX H100 systems replace the work of 40,000 CPU systems. Physics simulations, electromagnetic modeling, and iterative optimization all parallelized on GPUs at unprecedented scale.

Digital twins virtualize the entire factory. Using Nvidia Omniverse, Samsung builds a virtual representation of its fab. Test production changes virtually before touching physical equipment. Run predictive maintenance algorithms to catch failures days before they happen. Identify anomalies in real-time across thousands of manufacturing steps. If you’re about to waste $500,000 in silicon on a bad process run, the digital twin flags it before you commit.

Real-time process control replaces batch optimization. AI adjusts parameters millisecond by millisecond across every stage: design, fabrication, testing, quality control. The result: 30% better defect detection, 15% higher wafer yields, and a single intelligent network connecting processes that were previously siloed.

Samsung’s HBM4 memory – hitting 11 gigabits per second vs the industry standard 8 Gbps – is being manufactured using this AI-optimized production. The chips AI produces will power the next generation of AI data centers.

The Recursive AI Paradox

AI chips are manufacturing the chips that make AI smarter. Which makes the manufacturing AI smarter. Which produces better chips. Which makes the AI smarter.

It’s a feedback loop that compounds. Each generation of AI-optimized chips accelerates the next manufacturing cycle. Samsung’s HBM4 memory will power Nvidia’s next-gen GPUs. Those GPUs will train more advanced AI models. Those models will optimize Samsung’s manufacturing even further, creating HBM5, then HBM6.

Traditional semiconductor manufacturing was linear: better tools led to better chips. AI manufacturing is exponential. Better chips train smarter AI, which produces better chips, which train smarter AI. The gap between AI-optimized manufacturing and traditional methods doesn’t just grow. It accelerates. Companies that get on this curve early pull away from everyone else, and the distance increases every cycle.

There’s something unsettling about it. We’re building systems that improve themselves, and the rate of improvement is itself improving.

Does $2.5 Billion Make Sense?

Let’s do the math. 50,000 Nvidia H100 GPUs cost $25,000 to $40,000 each. That’s $1.25 billion to $2 billion just for the silicon. Add complete DGX H100 systems with infrastructure: $1.875 billion to $2.8 billion. Then electrical upgrades – 35 megawatts of continuous power requires substantial infrastructure – another $500 million to $750 million. Total: $2.5 billion to $3.5 billion.

Sounds astronomical. Until you compare alternatives.

Samsung’s semiconductor revenue hit roughly $60 billion in 2024. If this AI factory improves wafer yields by just 5% – conservative given the 15% improvements documented elsewhere – that’s $3 billion in annual savings. The investment pays for itself in a year. If it accelerates time-to-market by even three months, the competitive advantage is worth billions more.

Now compare building a new advanced fabrication plant: $15 billion to $20 billion, three to four years to come online, and you’re still running traditional manufacturing processes. The AI Megafactory is 25-30% of one year’s R&D budget and deploys in under two years. Unlike a traditional fab, the returns compound – every chip it produces makes the manufacturing AI smarter.

This isn’t expensive. It’s the cheapest way to stay competitive.

What Happens Next

If Samsung succeeds, everyone follows or falls behind. TSMC will scale AI manufacturing to defend its position. Intel, bleeding $7 billion annually from its foundry business, may see AI optimization as a survival strategy. The competitive dynamic shifts from “who has the best 2nm process” to “whose AI makes chips most efficiently.”

Samsung plans to roll this out globally. The Taylor, Texas facility gets AI manufacturing. Other Samsung fabs worldwide follow. This becomes the blueprint. If 50,000 GPUs produces the expected results, TSMC and Intel start their own multi-billion-dollar GPU deployments.

For developers, this means faster hardware iterations. The 20x speedup in computational lithography translates to shorter product cycles. AI-optimized chip designs reach production faster. HBM4 memory built with AI manufacturing powers the next wave of AI data centers. The recursive loop accelerates everything downstream.

The challenges are real. Operating 50,000 GPUs in a manufacturing context isn’t the same as running a data center. Power requirements are massive: 35 megawatts equals a small power plant. Integration complexity is brutal when you’re connecting AI across design, fabrication, testing, and quality control. Samsung just locked itself into deep dependency on Nvidia’s ecosystem.

But the alternative is worse. Stand still, and TSMC or an AI-optimized competitor pulls ahead every manufacturing cycle.

The Real Bet

Samsung isn’t betting $2.5 billion that AI helps make chips. They’re betting that AI-driven manufacturing efficiency matters more than incremental node improvements. That the next competitive edge isn’t 2nm vs 1.5nm, but how intelligently you use the nodes you have.

It’s a forced move born from losing ground to TSMC. But forced moves sometimes reveal better strategies. If Samsung pulls this off, we’ll look back at 2025 as the year chip manufacturing became an AI problem. And 50,000 GPUs in a single factory will seem quaint compared to what comes next.