Databricks just closed a $5 billion funding round at a staggering $134 billion valuation—32 times its expected revenue. Revenue is exploding at 55% year-over-year, and AI products alone hit $1 billion in annual run rate. But here’s the catch investors are downplaying: gross margins are slipping from a 77% target to 74%, and the culprit is clear. AI products are more compute-intensive than traditional analytics, and the cost of running real-time inference at scale is eating into profits faster than revenue can grow. This is the first major signal that AI infrastructure isn’t just expensive to build—it’s expensive to run.

The Margin Squeeze: A Warning Sign

On paper, Databricks looks unstoppable. A $5 billion raise, explosive growth, and a valuation that makes most tech companies jealous. But zoom into the financials, and you’ll find something troubling. Gross margins have dropped from an internal target of 77% to 74%—a seemingly small decline that reveals a much bigger problem.

Traditional SaaS companies like Adobe and GitLab maintain margins around 88%. Even the average SaaS business sits comfortably at 80-90%. AI-native companies, however, are struggling. According to Bessemer’s 2025 data, fast-growing AI startups average just 25% margins early on, with more mature ones hovering around 60%. Databricks at 74% is actually performing well above the AI average, but the trend line is moving in the wrong direction.

This matters because it challenges the prevailing narrative that scale solves everything in AI infrastructure. Databricks is one of the most successful AI platforms in the world, and even they can’t escape the fundamental cost structure of real-time AI workloads.

Why AI Products Cost More to Run

The margin pressure isn’t a mystery—it’s baked into how AI infrastructure works. Real-time inference requires dedicated GPU resources running 24/7, and those resources are expensive. Industry data shows that real-time AI processing costs 2-3 times more than batch processing. Batch jobs can be 30-70% cheaper because they don’t need instant availability, but they’re useless for interactive AI products where users expect immediate responses.

Here’s the kicker: inference accounts for 80-90% of production AI compute costs. Even when models sit idle, they incur charges if they’re loaded in memory and ready to serve requests. Databricks’ serverless architecture auto-scales to meet demand, which is great for user experience but brutal for margins. Every additional query adds compute costs that don’t exist in traditional SaaS, where serving one more user is essentially free.

This is the core difference between AI products and traditional software. In SaaS, marginal costs approach zero. In AI, marginal costs scale linearly—or worse—with usage.

What This Means for Developers

If you’re building with AI infrastructure, Databricks’ margin squeeze is your wake-up call. You can’t treat AI compute costs like traditional cloud hosting and hope for the best. Every architectural decision—real-time vs batch, GPU vs CPU, serverless vs reserved capacity—has massive cost implications.

The data backs this up. Companies that strategically mix batch and real-time processing report 45% fewer infrastructure scaling events and 28% lower cost variability. Databricks’ own pricing reflects this reality: job cluster DBUs cost about half as much as all-purpose clusters. If you’re not optimizing for cost from day one, you’re building on a foundation that won’t scale economically.

This also changes build-vs-buy calculations. Platforms like Databricks are absorbing margin pressure to deliver premium performance, which means they’re investing heavily in infrastructure efficiency. That’s actually a point in their favor for developers who need reliability and scale without managing it themselves.

The Sustainability Question

Here’s the uncomfortable question no one wants to ask: Can AI infrastructure companies sustain 74% margins when traditional SaaS standards sit at 85%+? Right now, investors are fine with it because growth is exceptional and market positioning matters more than profitability. But what happens when that 55% year-over-year growth inevitably slows?

There are three possible outcomes. AI platforms raise prices, which kills adoption. They cut costs by reducing infrastructure quality, which kills competitive advantage. Or they accept permanently lower margins than SaaS, which redefines investor expectations for the entire sector. None of these are great for developers betting their stack on these platforms.

The Optimistic Counter-Narrative

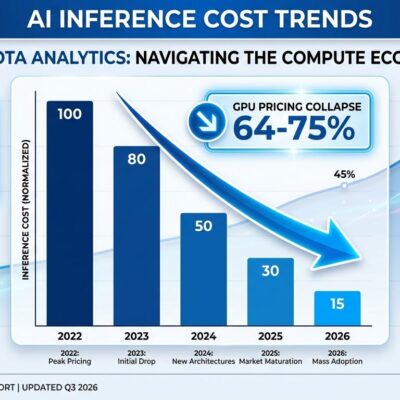

Not everyone thinks margin pressure is a crisis. Some VCs argue that gross margins don’t matter for high-growth AI companies—market capture and competitive moats are what count. And there’s evidence that AI infrastructure costs are improving. According to Mayfield VC analysis, inference costs for GPT-3.5-level models have dropped 280-fold over two years, hardware costs are declining 30% annually, and energy efficiency is improving 40% per year.

The question is whether these efficiency gains can outpace the shift toward more expensive real-time AI workloads. So far, the answer for Databricks appears to be no.

What to Watch

Databricks isn’t failing—far from it. A $5 billion raise at a $134 billion valuation signals that investors still believe deeply in AI infrastructure. But the margin trends matter, and they’re worth watching. If other major AI platforms start reporting similar compression, it confirms that this is a structural issue, not a Databricks-specific problem. That would force a reckoning about AI economics that the industry has been avoiding.

For now, developers should take note: AI infrastructure costs are real, they scale with usage, and even the best platforms can’t engineer their way out of the fundamental economics. Build accordingly.