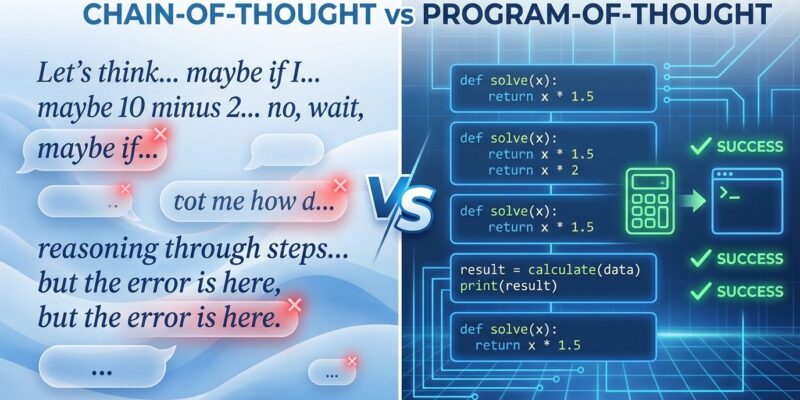

A research paper trending on Hacker News today reveals that Program-of-Thought (PoT) prompting beats Chain-of-Thought (CoT) by 12-20% on numerical reasoning tasks—at the exact moment when separate Wharton research shows CoT’s value is declining. Moreover, for developers working with AI on computational tasks, this timing isn’t coincidental. It’s a signal that the prompting landscape is shifting, and blanket reliance on Chain-of-Thought is no longer the default playbook.

The performance gap is significant: PoT achieves 20% gains on financial datasets like FinQA and ConvFinQA, while Wharton’s June 2025 study found that CoT adds 20-80% response time for gains as low as 2.9%. The community is noticing. Furthermore, the question isn’t whether PoT works—it’s when to use it instead of the prompting techniques you’re already familiar with.

What Is Program-of-Thought Prompting?

Program-of-Thought separates reasoning from computation. Unlike Chain-of-Thought, which uses natural language for both thinking and calculating, PoT has the LLM express reasoning steps as executable Python code while an external interpreter handles the actual math.

Here’s the difference in action. Consider a word problem: “Seattle has 20 sheep. Charleston has 4 times as many. Toulouse has twice as many as Charleston. How many sheep total?”

Chain-of-Thought approach (error-prone):

Charleston has 4 × 20 = 80 sheep.

Toulouse has 2 × 80 = 160 sheep.

Total: 20 + 80 + 160 = 260 sheep.The LLM does the arithmetic. If it miscalculates, the answer is wrong.

Program-of-Thought approach (accurate):

def solver():

seattle_sheep = 20

charleston_sheep = seattle_sheep * 4

toulouse_sheep = charleston_sheep * 2

total_sheep = seattle_sheep + charleston_sheep + toulouse_sheep

return total_sheepThe LLM generates the logic. Python executes the code. Consequently, the answer is precise.

This separation reduces the error surface. LLMs are strong at reasoning but weak at arithmetic. Therefore, by delegating computation to an interpreter that doesn’t make math mistakes, PoT achieves measurably better results on quantitative tasks.

Performance: 12-20% Gains on Financial Datasets

The original research, published in Transactions on Machine Learning Research (October 2023), evaluated Program-of-Thought across eight datasets: five math word problem sets and three financial question-answering benchmarks. The average improvement over CoT was 12%, but the real story is in the breakdown.

On financial datasets (FinQA, ConvFinQA, TATQA), PoT outperformed CoT by 15-20%. These datasets involve large numerical values, multi-step calculations, and complex financial reasoning—exactly where LLM arithmetic errors compound. In contrast, on simpler math datasets (GSM8K, AQuA, SVAMP), the gains were smaller: around 8%.

The pattern is clear: PoT’s advantage scales with computational complexity. The harder the math, the wider the gap.

One standout result: Zero-Shot PoT scored 66.5 on TabMWP, beating Few-Shot CoT at 63.4. This means PoT without any examples outperformed CoT with examples—a strong signal that the technique’s core strength isn’t just in prompting finesse but in structural advantage.

When combined with self-consistency decoding (generating multiple solutions and selecting the most common answer), PoT achieved state-of-the-art performance on all evaluated math benchmarks. The GitHub implementation is available at TIGER-AI-Lab/Program-of-Thoughts for developers who want to test it.

Chain-of-Thought’s Declining Value

The timing of PoT’s emergence matters because Chain-of-Thought isn’t performing as advertised. Wharton’s Generative AI Labs published research in June 2025 titled “The Decreasing Value of Chain of Thought in Prompting,” and the findings challenge conventional wisdom.

For reasoning models (like o3-mini and o4-mini), CoT produced minimal accuracy improvements: just 2.9-3.1%. However, it increased response times by 20-80%—adding 10-20 seconds per query. For tasks where milliseconds matter, that’s a cost developers can’t ignore.

For non-reasoning models, CoT showed small average improvements but introduced higher variability, sometimes triggering errors on questions the model would otherwise answer correctly. The Wharton team’s conclusion: “Chain-of-Thought prompting is not universally optimal. Its effectiveness depends significantly on model type and specific use case.”

This validates PoT’s relevance. If CoT’s gains are shrinking while its costs remain high, developers need task-specific alternatives. PoT fills that gap for computational tasks—not as a universal replacement, but as a specialized tool that delivers measurable gains where CoT no longer does.

When to Use Program-of-Thought vs CoT

Program-of-Thought isn’t a blanket replacement for Chain-of-Thought. It’s a specialized technique for a specific problem class. Here’s the decision framework.

Use PoT when:

- The task involves numerical computation (math, finance, statistics)

- Accuracy is critical (financial analysis, scientific calculations)

- The problem includes large numbers or iterative calculations

- You can safely execute generated code (controlled environment, sandboxed execution)

Stick with CoT when:

- The task is qualitative (summarization, explanation, creative writing)

- There’s no computational component

- Question diversity is high (PoT struggled on the AQuA dataset due to unpredictable question types)

- Code execution poses security risks (untrusted environments, production systems without sandboxing)

The key differentiator: if the bottleneck is LLM arithmetic, PoT wins. If the bottleneck is reasoning quality, CoT still has a role—though Wharton’s research suggests that role is shrinking.

One practical example: financial earnings analysis. If you’re parsing quarterly reports and calculating year-over-year growth rates, PoT will outperform CoT by 20% on accuracy. Conversely, if you’re summarizing the CEO’s strategic vision from the same report, CoT is fine (or skip prompting techniques entirely and let the model work naturally).

Security Risks: Code Execution Isn’t Free

Program-of-Thought requires executing LLM-generated code. That’s a security risk you can’t ignore.

Malicious code is a real possibility. A PoT-generated script could include import os; os.rmdir('/') or SQL injection payloads that leak confidential data. As a result, if you execute this code blindly in production, the consequences range from data loss to full system compromise.

Mitigation strategies:

- Sandboxed execution: Run generated code in Docker containers or isolated VMs

- Static code analysis: Scan for dangerous patterns before execution

- Library whitelisting: Only allow approved imports and functions

- Monitoring and logging: Track all executions, flag anomalies

- Timeout limits: Prevent infinite loops and resource exhaustion

The research documentation explicitly warns about this. LearnPrompting.org’s PoT guide states that malicious snippets “could harm the machine running the snippets” and recommends treating code execution as a controlled, monitored operation—not an automated pipeline.

If you can’t implement these safeguards, PoT isn’t appropriate for your use case. The performance gains don’t justify the security exposure.

The Broader Shift: Task-Specific Prompting

Program-of-Thought fits into a larger industry trend: the move from ad-hoc prompting to rigorous, task-specific context engineering.

In 2024, developers used blanket prompting strategies—mostly variants of “think step by step” tacked onto queries. In 2025, the approach is structured. Production-level AI systems now include versioned prompt templates, monitoring dashboards tracking latency and success rates, and model-specific optimization (because GPT-4o, Claude, and Gemini respond differently to identical prompts).

Related developments signal this shift:

- Model Context Protocol (MCP): Thousands of servers launched in under a year, providing standardized context management

- Google Antigravity: An agent-first IDE where surfaces embed into agents, not the other way around

- Agentic workflows: Systems that autonomously choose prompting techniques based on task type (PoT for computation, CoT for reasoning, direct prompts for simple queries)

A 243-user survey found that 55% of AI developers regularly revise prompts based on feedback—evidence that iterative refinement is now standard practice, not an edge case.

Program-of-Thought represents the future: different reasoning methods for different problem classes. The days of using one prompting technique for everything are ending.

Key Takeaways

Program-of-Thought prompting delivers 12-20% accuracy gains on computational tasks by separating reasoning from execution. It’s not a universal replacement for Chain-of-Thought, but it solves a specific problem class where CoT’s value is declining.

Use PoT for numerical reasoning (finance, math, statistics) where accuracy is critical and you can safely execute code. Stick with CoT for qualitative reasoning or skip prompting tricks entirely for simple tasks. The decision comes down to whether the bottleneck is LLM arithmetic or reasoning quality.

Security awareness is non-negotiable. Executing LLM-generated code without sandboxing, monitoring, and static analysis is a production risk. Implement safeguards or avoid PoT in untrusted environments.

The broader lesson: prompting is becoming a discipline. PoT is part of a shift toward task-specific, rigorously tested techniques that replace blanket strategies. As the Wharton research shows, not all prompting methods work for all tasks—and some are no longer worth the time cost.

For developers working with AI on computational workloads, Program-of-Thought is worth testing. The GitHub implementation is open-source, the research is peer-reviewed, and the performance data is consistent. If you’re still using Chain-of-Thought for everything, you’re leaving accuracy on the table.