Microsoft Azure’s serverless GPUs hit general availability in March 2025, and the timing tells you everything. The serverless computing market is racing toward $52 billion by 2030 while over 70% of developers now use AI tools daily. Azure’s answer – NVIDIA A100 and T4 GPUs with scale-to-zero capability and per-second billing – sits at the convergence point of these two mega-trends. This isn’t just another cloud service. It’s the infrastructure finally catching up to how developers actually want to work with AI.

The GPU Provisioning Tax Nobody Talks About

Here’s the reality most AI tutorials skip: you provision an A100 GPU instance at $17.50 per hour, your model runs inference for 12 minutes total that day, and you just burned $402 for 288 minutes of idle silicon. The math doesn’t work for teams without venture capital or enterprise budgets.

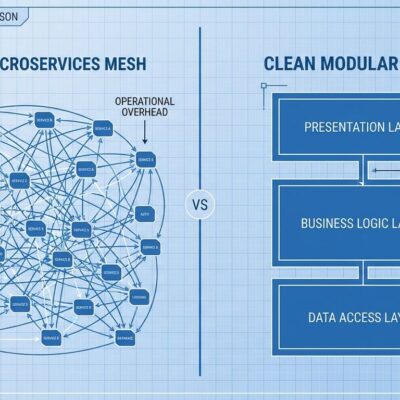

The traditional pain points compound quickly. GPU provisioning requires dedicated infrastructure knowledge – driver setup, CUDA configuration, manual scaling policies. Your ML model that processes user uploads three times per hour needs the same heavyweight infrastructure as a model serving millions of requests. Variable AI workloads hit a wall: either over-provision and waste money, or under-provision and drop requests.

Azure’s serverless GPUs eliminate this tax entirely. Scale-to-zero means GPUs spin up on-demand and disappear when idle. Per-second billing charges for actual compute time, not the hourly blocks you hoped to fill. The platform handles driver setup, CUDA plugins, and automatic scaling. Your infrastructure complexity just dropped to near-zero.

What You Can Actually Build

The use cases cluster around variable-load AI workloads where serverless economics shine. Real-time ML inferencing with custom models gets fast startup times and automatic scaling without provisioning headaches. Batch LLM processing – classification, summarization, structured extraction – runs 10x to 100x faster than traditional approaches, with thousands of organizations already deploying these pipelines.

AI chatbots and assistants scale seamlessly through traffic spikes using natural language processing at scale. Computer vision applications handle image and video analysis with edge integration for low-latency requirements. The technical foundation supports both NVIDIA A100 GPUs for high-performance workloads and T4 GPUs for cost-effective inferencing, with NVIDIA NIM microservices baked in at general availability. The service now spans 11 regions after March’s GA expansion.

Three Trends Colliding

The serverless GPU story makes sense only when you zoom out to the broader infrastructure shift happening right now. The serverless computing market exploded from $24.5 billion in 2024 to a projected $52 billion by 2030 – that’s 14.1% compound annual growth driven by event-driven microservices and edge-native deployments.

AI adoption accelerated in parallel. AI appears in 25% of current job postings. Over 70% of developers use AI coding assistants regularly. AI integration shifted from experimental to infrastructure default. The third trend – edge computing converging with serverless – pushes processing closer to end users for IoT and autonomous vehicles requiring sub-second response times.

Serverless GPUs sit at the intersection. This is infrastructure adapting to how developers actually deploy AI in 2025, not how textbooks said it should work in 2020.

The Challenges Nobody’s Solved Yet

Cold start latency remains real. Azure delivers approximately 5-second container cold starts – competitive with Google Cloud Run’s 4-6 seconds but slower than specialized providers like Modal Labs (2-4 seconds) or RunPod (48% of starts under 200ms). For ultra-low latency requirements, those five seconds matter. For most production workloads processing user requests or batch jobs, they don’t.

The deeper architectural tension involves state management. AI inference is inherently stateful – LLM chat applications rely on key-value caches to accelerate performance, while serverless architectures traditionally assume stateless execution. As SIGARCH research notes, “serverless AI magnifies existing inefficiencies in serverless computing, especially when deploying large AI models on specialized hardware.” The industry is adapting with better state management solutions, but the friction hasn’t disappeared.

Cost modeling requires actual usage patterns, not assumptions. Per-second billing wins decisively for variable workloads. High-utilization scenarios with consistent GPU usage might favor dedicated instances. The answer depends on your specific traffic profile, which means you need to measure before migrating.

What This Means For Developers

The practical question is whether your AI workload belongs on serverless GPUs. Variable traffic patterns with unpredictable spikes – yes, absolutely. Consistent high utilization running inference 24/7 – probably not, run the cost comparison first. Test whether 5-second cold starts fit your latency requirements. Model your costs honestly by comparing per-second serverless against hourly dedicated instances using realistic traffic patterns.

The infrastructure landscape is shifting fast. Watch for sub-second cold start improvements, H100 GPU availability in serverless offerings, and better state management for AI workloads. Multi-cloud serverless GPU strategies will emerge as providers compete on price and performance.

2025 marks the year serverless AI transitions from experimental to production-ready for mainstream developers. The GPU provisioning complexity that blocked smaller teams from deploying AI is evaporating. The infrastructure is finally catching up to the use cases. If you’ve been putting off AI deployment because the infrastructure overhead seemed overwhelming, that excuse just expired.