Anthropic released the latest Model Context Protocol specification update today, introducing async operations that let AI agents handle long-running tasks without blocking. The November 25 release follows a 14-day testing window and marks MCP’s transition from emerging standard to production-ready backbone for enterprise AI integrations.

Async Operations Solve Production’s Biggest Blocker

The headline feature is async support for long-running tasks. Currently, MCP operates synchronously—when an agent calls a tool, everything stops and waits. Try running a 30-minute data processing job and watch your agent timeout.

The new implementation allows servers to kick off long-running tasks while clients check back later for results. Fire off an ETL pipeline syncing 10,000 records, let the agent continue other work, and poll for completion. This unlocks data pipelines, batch API operations, and report generation that previously hit timeout walls.

The technical implementation is tracked in SEP-1391, using a fire-and-forget pattern with polling and callbacks. Operations can now run for minutes or hours without blocking the agent’s main thread.

GitHub Proves It Works at Scale

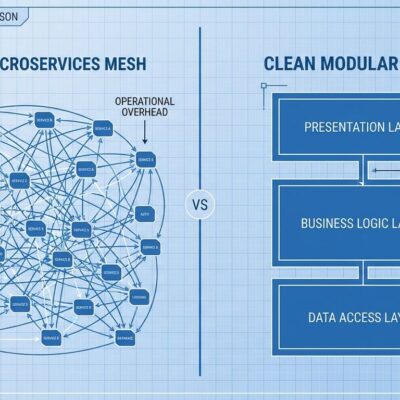

The code-first pattern GitHub validated shows what production MCP deployment looks like. Their MCP server reduced token usage from 150,000 to 2,000—a 98.7% reduction in cost and latency.

The trick is filtering data in the execution environment rather than passing everything through the model’s context. Anthropic’s engineering approach presents MCP servers as code APIs, loading tool definitions on-demand instead of upfront. Progressive disclosure means the agent discovers what it needs when it needs it, not before.

This isn’t theoretical optimization. GitHub runs this in production, and the 98% token reduction translates directly to lower API costs and faster response times. When a major platform validates your architecture, it’s no longer an experiment.

Discovery Gets Easier with Well-Known URLs

Server discovery now works through .well-known URLs, following RFC 8615—the established standard for metadata exposure. Servers advertise their capabilities at /.well-known/mcp/ without requiring connections or authentication first.

Think of it as the server’s business card that anyone can read without knocking on the door. This enables MCP registries to auto-catalog available servers, developers to browse capabilities before committing, and debugging tools to inspect servers without credentials. The improvement might seem minor until you’ve manually tested 20 servers to find the one that does what you need.

Horizontal Scaling for Enterprise Workloads

The Transport Working Group addressed the pain points around server startup and session handling that prevented enterprise deployments. Stateless operation support now enables horizontal scaling across multiple MCP server instances with load balancing and graceful upgrades.

This matters when you’re handling thousands of concurrent AI agents in production. Prototype code running synchronously for 10 test users looks fine. Scale to 1,000 users and watch response times balloon from 3 seconds to 45. Async operations combined with horizontal scaling makes production SLAs achievable.

From Experiment to Standard in 12 Months

MCP’s trajectory tells the story. Anthropic launched it as an open standard in November 2024. OpenAI officially adopted it in March 2025, integrating MCP into ChatGPT’s desktop app and the Agents SDK. By November 2025, the community has built thousands of MCP servers bridging everything from GitHub to SAP systems.

The network effects are kicking in. More servers mean more value for everyone implementing MCP. Official integrations from GitHub and WooCommerce signal enterprise commitment. SDKs covering Python, TypeScript, C#, Java, and Swift make adoption language-agnostic.

Thoughtworks called MCP “the best example of a standard evolving to control context effectively.” When industry observers and major platforms align on one protocol, developers win. Write your integration once, and it works everywhere.

What This Means for Developers

The November 25 release completes MCP’s evolution from interesting experiment to production infrastructure. Async operations eliminate the synchronous blocking that killed real-world agent deployments. GitHub’s 98% token reduction proves the architecture scales. Horizontal scaling and discovery improvements check the enterprise requirements boxes.

If you’re building AI agents or evaluating integration standards, MCP just became the safe bet. The protocol handles the plumbing—authentication, discovery, state management, async execution—so you can focus on what your agent actually does. That’s the promise of any good standard: solve the common problems once, then move on to the interesting work.