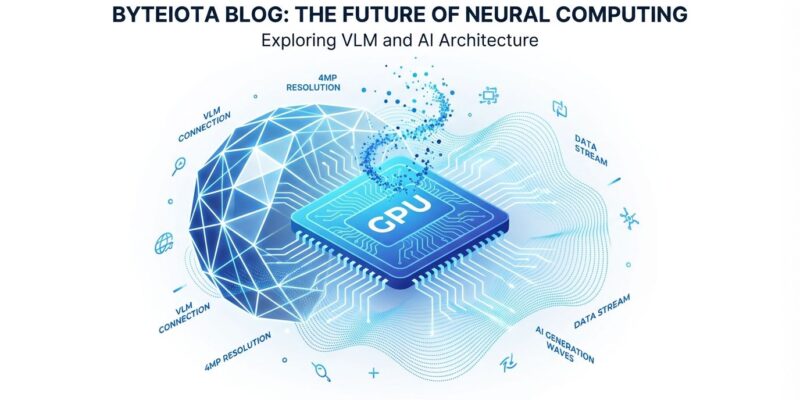

Black Forest Labs released FLUX.2 on November 25, coupling a 24-billion-parameter vision-language model with image generation for the first time. NVIDIA’s FP8 optimization work slashed VRAM requirements by 40% and boosted performance by 40%, bringing the 32-billion-parameter model to consumer RTX GPUs. What required datacenter infrastructure on Sunday runs on a $600 gaming GPU today.

The release marks a shift from pure image generation to “reasoning” visual AI. Integrating Mistral-3’s VLM delivers better prompt adherence, world knowledge, and compositional logic compared to models that just generate pixels. Moreover, five days after Google launched Gemini 3 Pro Image touting similar reasoning capabilities, the message is clear: the industry moved beyond impressive demos to production-ready reliability.

Optimization Engineering Beats Bigger Models

FLUX.2’s original architecture demands 90GB VRAM fully loaded—prohibitive for consumer hardware. NVIDIA’s quantization work transformed accessibility. The FP8 implementation cuts VRAM to roughly 54GB while maintaining quality, and performance improved 40% on RTX GPUs. Furthermore, ComfyUI’s weight streaming feature lets systems with less than 32GB VRAM offload components to system RAM, trading some speed for expanded capability.

This matters because optimization unlocked a datacenter-class model for consumer use. The same 32-billion parameters that required cloud infrastructure yesterday run locally today. Day-0 ComfyUI support means developers don’t wait for tooling—the model shipped with integration ready. Consequently, developers with RTX 3090s or 4090s get frontier visual AI without recurring cloud costs or vendor dependencies.

The lesson: engineering work on quantization and memory management democratizes frontier models as effectively as architectural innovation. FLUX.2’s accessibility breakthrough came from NVIDIA’s optimization, not just Black Forest Labs’ model design.

VLM Integration Brings Reasoning to Visual AI

FLUX.2 couples Mistral-3’s 24-billion-parameter VLM with a rectified flow transformer. The VLM provides real-world knowledge and contextual understanding. The transformer handles spatial relationships, material properties, and compositional logic. Together, they deliver better prompt adherence for complex, multi-part instructions compared to pure generation models.

This represents the industry’s pivot point. DALL-E 3, Midjourney, and Stable Diffusion excel at generating visually impressive outputs but struggle with compositional accuracy and world knowledge. However, FLUX.2 and Gemini 3 Pro (released five days earlier) both emphasize reasoning capabilities—vendors are racing toward enterprise reliability over pure aesthetic quality.

The trade-off: larger models. FLUX.2’s 32 billion parameters dwarf most competitors, driving the VRAM requirements that necessitated NVIDIA’s optimization work. Nevertheless, for professional workflows where prompt accuracy matters more than generation speed, the reasoning capability justifies the size.

Professional Features Hit Consumer Hardware

FLUX.2 handles 4-megapixel output, multi-reference consistency across 10 images, and complex typography that “works reliably in production” according to Black Forest Labs. Text rendering has been visual AI’s persistent failure—FLUX.2 makes infographics, UI mockups, and memes with legible fine text actually functional.

The multi-reference consistency feature combines up to 10 images for character, product, and style consistency across generations. Marketing teams can generate brand-compliant campaign assets. Additionally, product teams get mockups with accurate UI text. Designers maintain style across iterations. These aren’t demo features—they’re production workflow capabilities now accessible on consumer GPUs.

Brand guideline adherence and lighting/layout control target enterprise creative workflows. Organizations hesitant to adopt visual AI due to inconsistency or brand compliance concerns get tools built for reliability. This explains the timing with Gemini 3 Pro—vendors see enterprise adoption as the next growth phase, requiring production-grade features over impressive one-off outputs.

Open Weights vs Closed Alternatives

FLUX.2 ships in three variants: [pro] and [flex] are closed commercial models, while [dev] offers open weights for non-commercial use. A fourth variant [klein]—size-distilled with Apache 2.0 licensing—arrives soon. The open [dev] variant contrasts with closed competitors like DALL-E, Midjourney, and Gemini.

Open weights enable community inspection, custom fine-tuning, and self-hosting without vendor lock-in. Black Forest Labs states visual intelligence “should be shaped by researchers, creatives, and developers everywhere, not just a few.” For organizations that require model transparency or data privacy through self-hosting, FLUX.2 [dev] provides an option absent from closed alternatives.

The team’s credibility reinforces this approach. Black Forest Labs’ founders—Robin Rombach, Andreas Blattmann, Patrick Esser—created Stable Diffusion at Ludwig Maximilian University before joining Stability AI. Five of ten co-founders came from Stability’s exodus in March 2024. They’ve disrupted visual AI with open models before. Therefore, FLUX.2 continues that pattern with $31 million in seed funding from Andreessen Horowitz backing the effort.

Key Takeaways

- FLUX.2’s 40% VRAM reduction brings 32-billion-parameter visual AI from datacenters to consumer RTX GPUs through NVIDIA’s FP8 quantization work

- Mistral-3’s 24B VLM integration delivers reasoning capabilities—better prompt adherence and world knowledge compared to pure generation models

- Professional features (4MP output, 10-image consistency, production-ready typography) now run on $600 consumer hardware

- Released five days after Gemini 3 Pro Image, signaling industry shift toward enterprise reliability over aesthetic demos

- Open weights [dev] variant enables community inspection and self-hosting, contrasting with closed alternatives like DALL-E and Midjourney